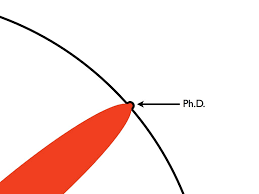

A few words about juggling

When I was a kid I learned to juggle.

Barely.

I could handle 3 balls for about 30 seconds.

I bluster, that if I practiced, I could handle 5 and go for minutes.

This Reddit thread estimates a week of practice to juggle 3, a month to juggle 4, and a year to juggle 5.

Wikipedia has a whole page on Juggling world records. No one can continuously juggle more than 7 balls. The time record for 7 is 16 minutes. Someone juggled 6 for 30 minutes. The record for 5 is 3 hours and 44 minutes. For 3 it's 13 hours.

The takeaway is this: some humans can juggle more than others but even the greatest max out at 7.

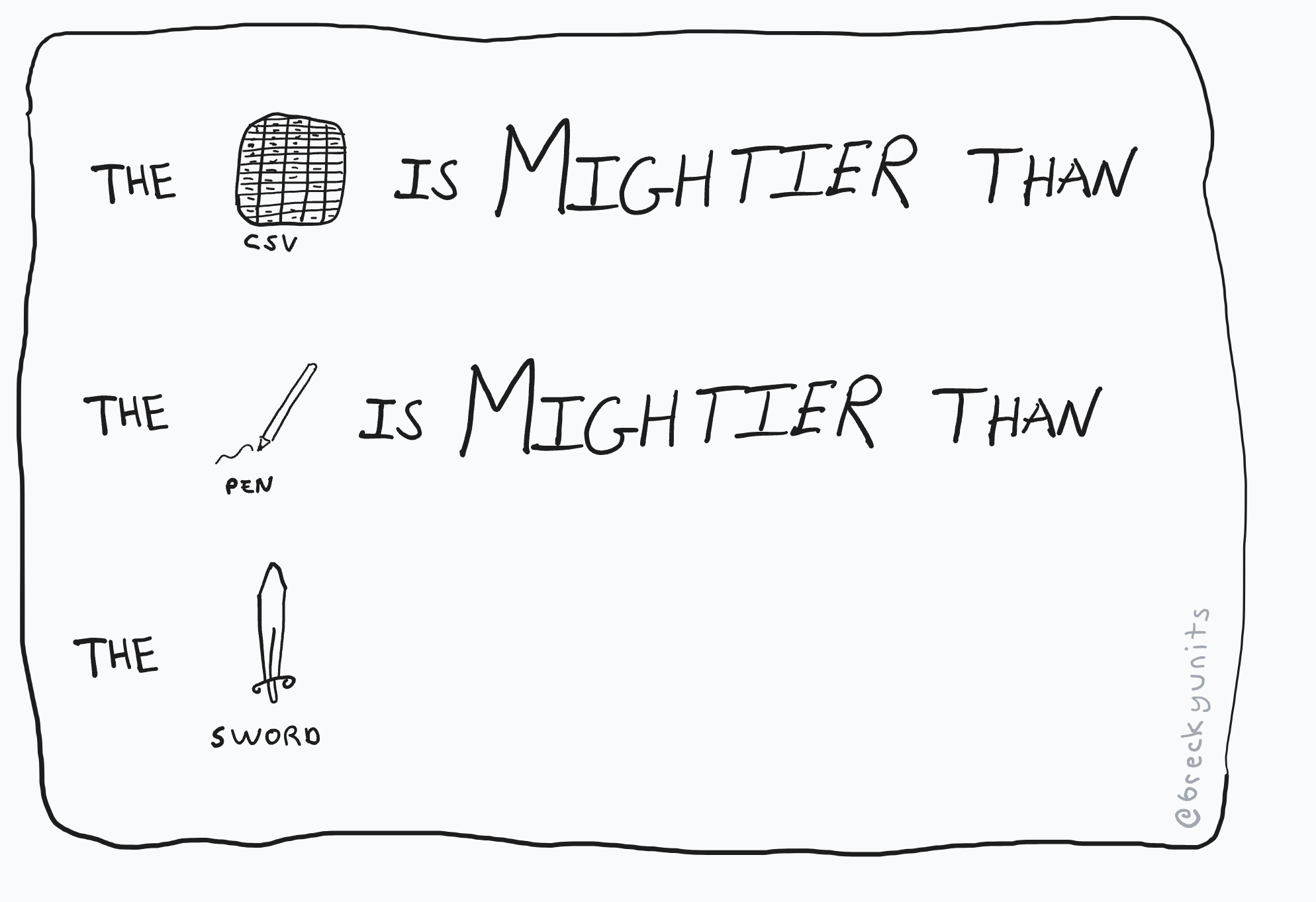

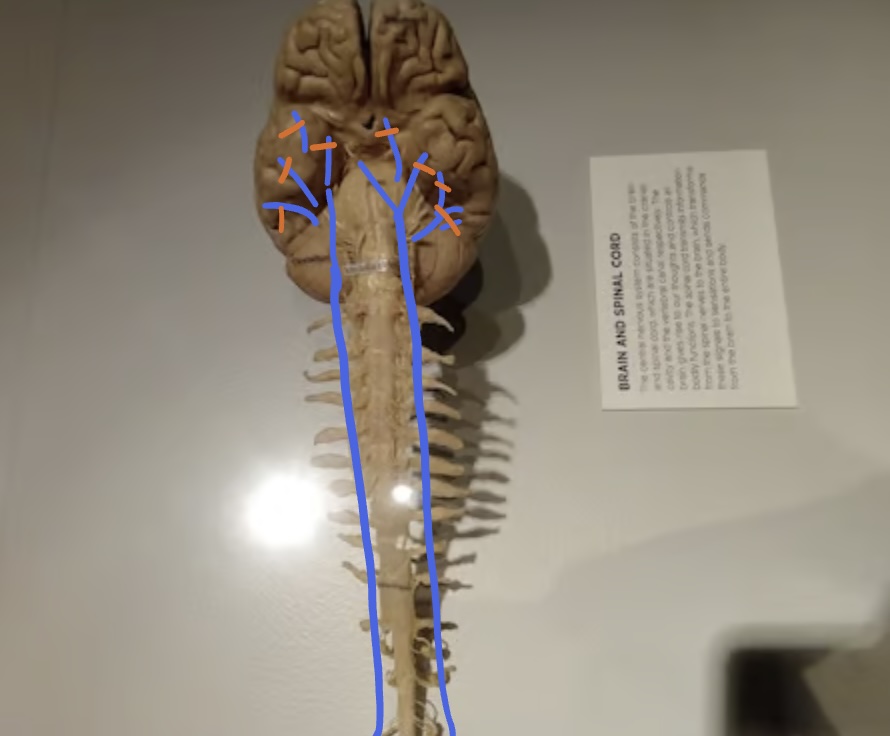

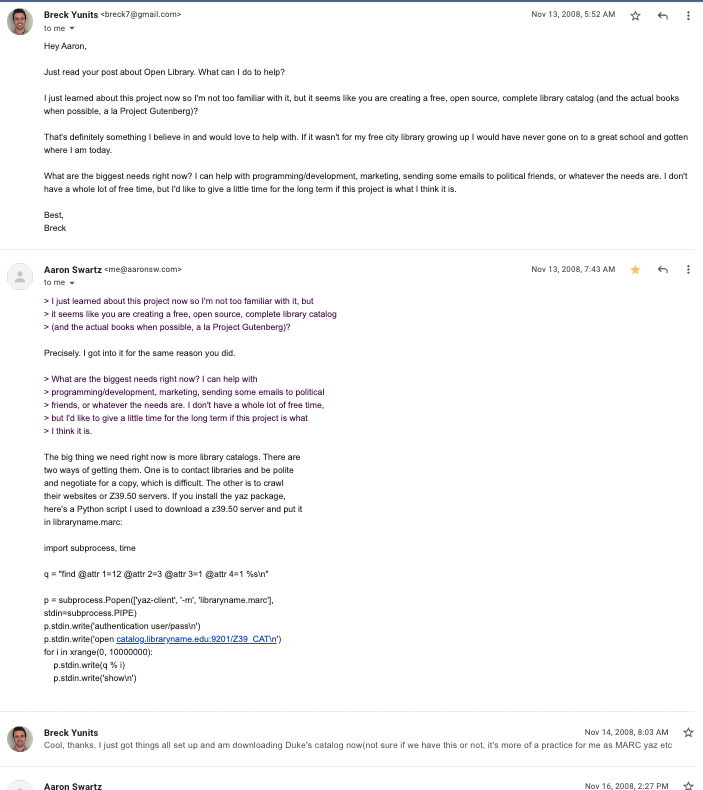

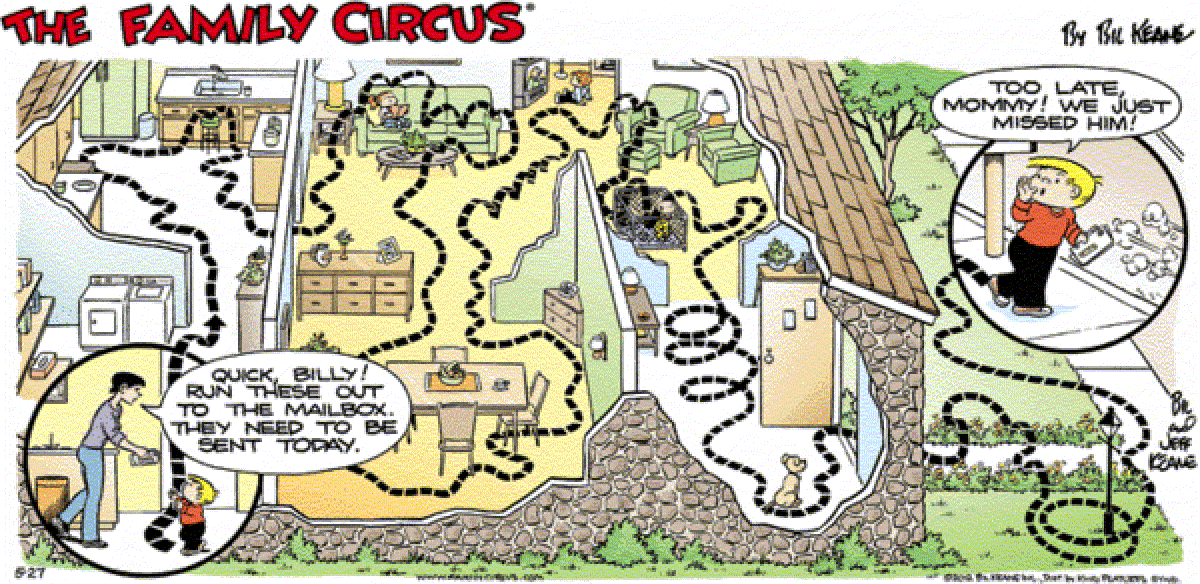

Mental juggling and JIQ

It is not physical juggling that I'm interested in.

I'm interested in mental juggling.

What if humans had the same mental juggling limits as physical?

We could compress the IQ scale to a JIQ scale of 1-7.

A person with a JIQ of 1 could handle only 1 mental ball at a time. Dogs would have a JIQ of 1.

A person with a JIQ of 6 gets overloaded by adding 1 more concept, and the whole thing comes tumbling down.

A person with a JIQ of 7, could handle 7 without becoming overloaded. These would be the geniuses of the world.

You can imagine a superlinear difference between the solutions from a 6 versus a 7.

Where do I rank?

On the JIQ scale, I think I'm a 5. Probably a 4 on a lot of days, and maybe a 6 on a really exceptional day.

I should probably be very grateful for this. Instead, I'm annoyed I'm not a 7. I've encountered a number of 7s in my life, and am in awe--and a bit jealous--of their mental capabilities.

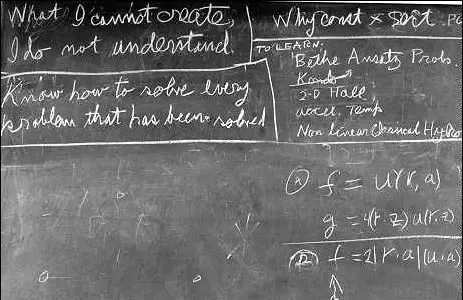

I'm constantly looking for/trying to invent new thinking tools to help me mentally juggle more, to be able to think, if even briefly, as a 7.

Concepts as Balls

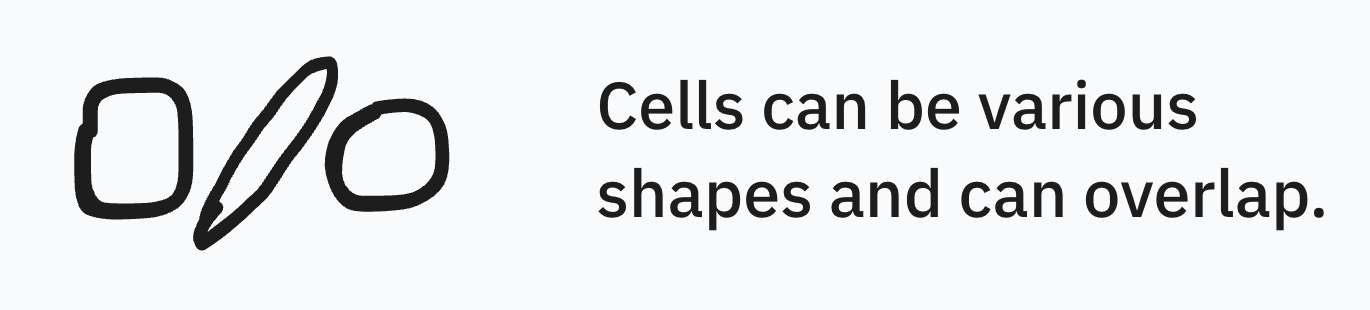

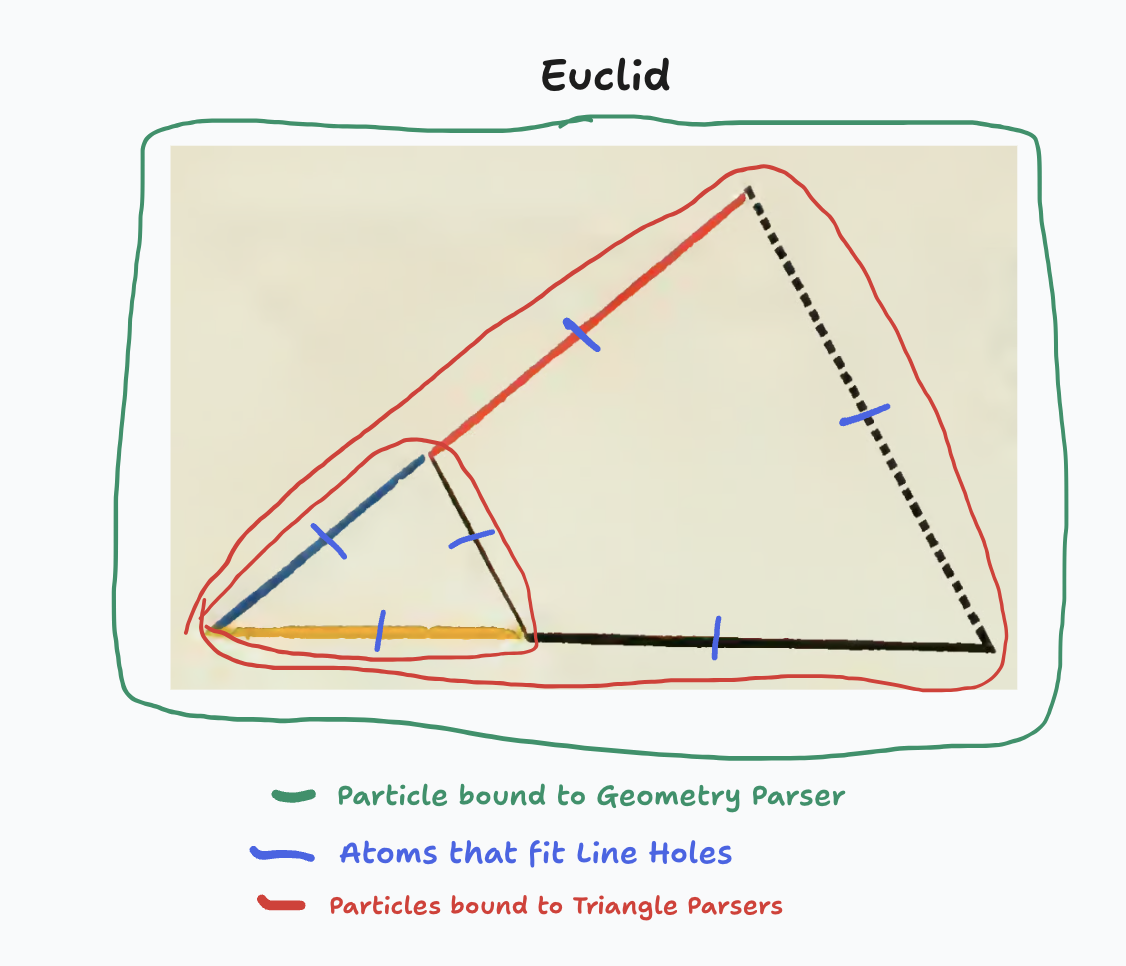

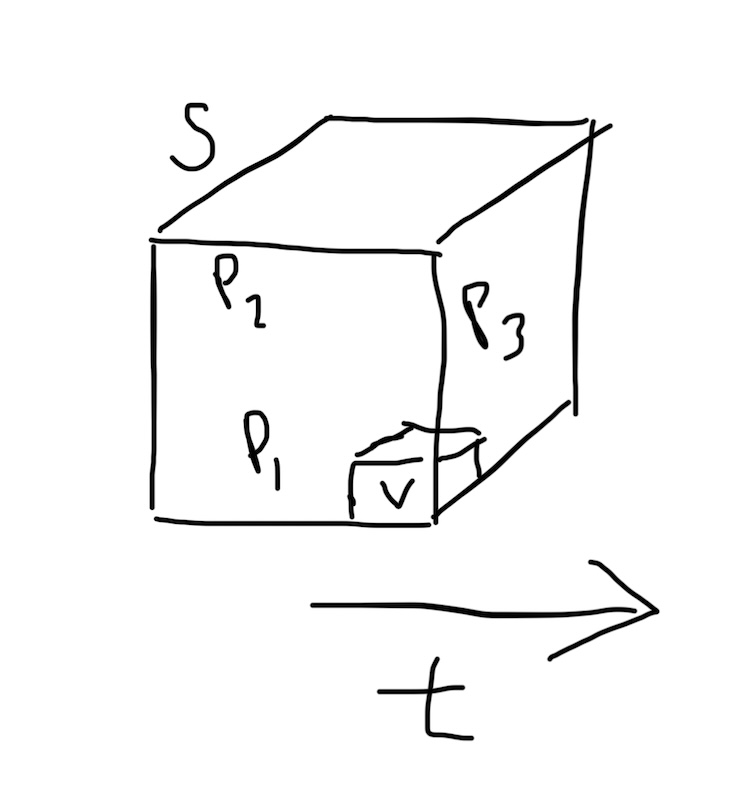

Lately I've been exploring a minimal universal 3D/4D language where all concepts are represented by balls.

A simple example is thinking of a water molecule as a ball containing 2 balls of hydrogen and 1 ball of oxygen.

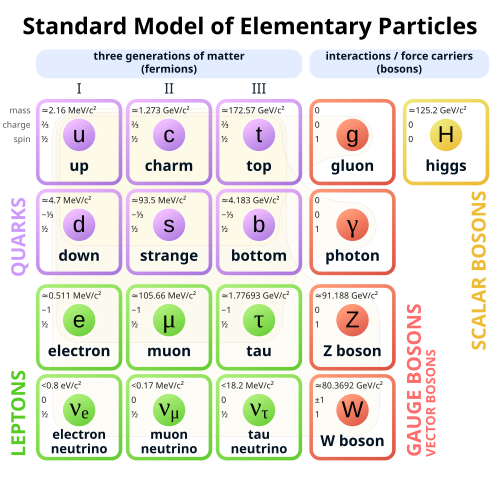

A larger example would be particle physics, where each particle type is a ball. Most people can juggle the simpler 3 ball particle set of electrons, protons and neutrons.

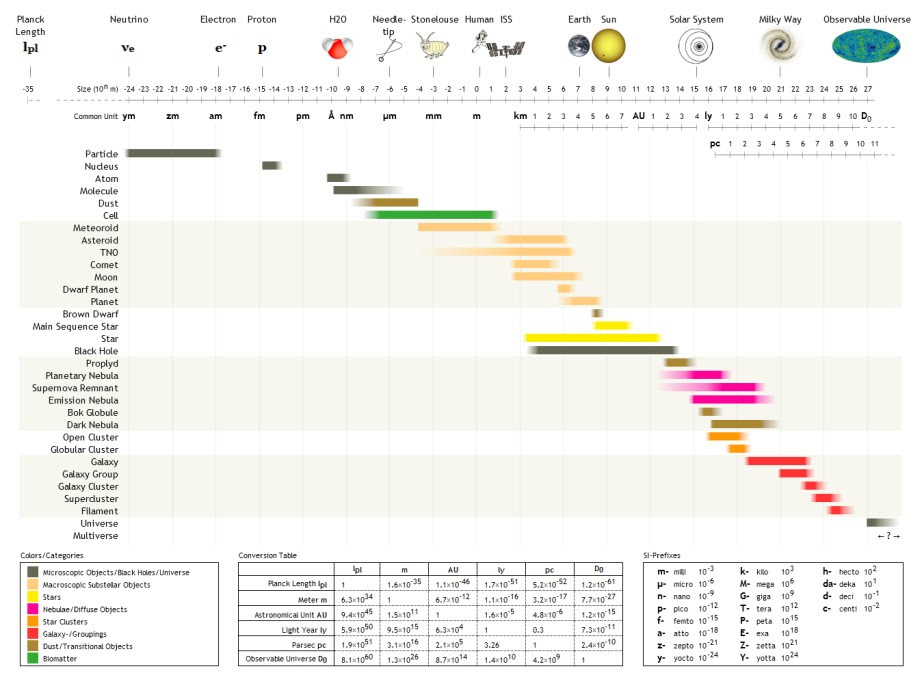

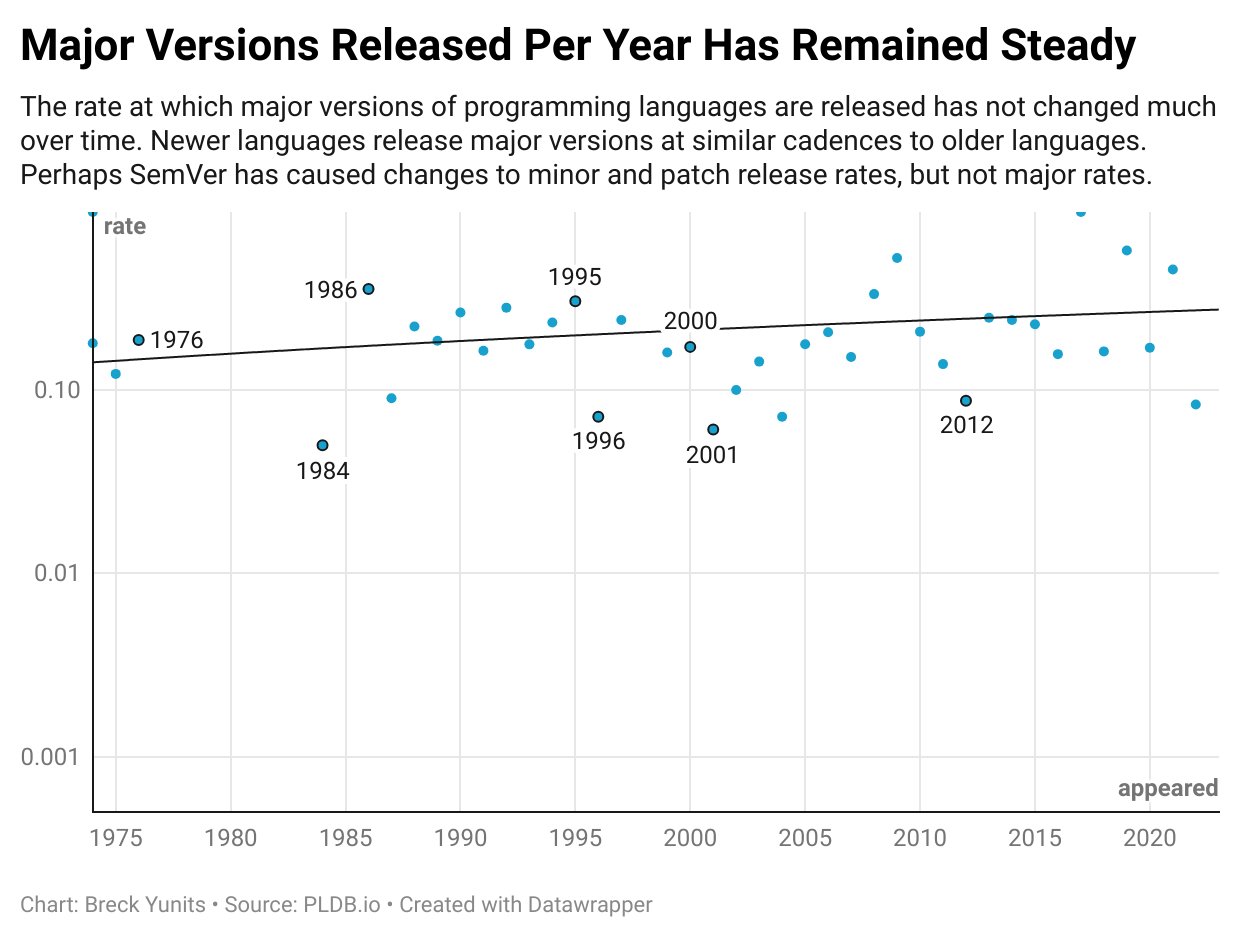

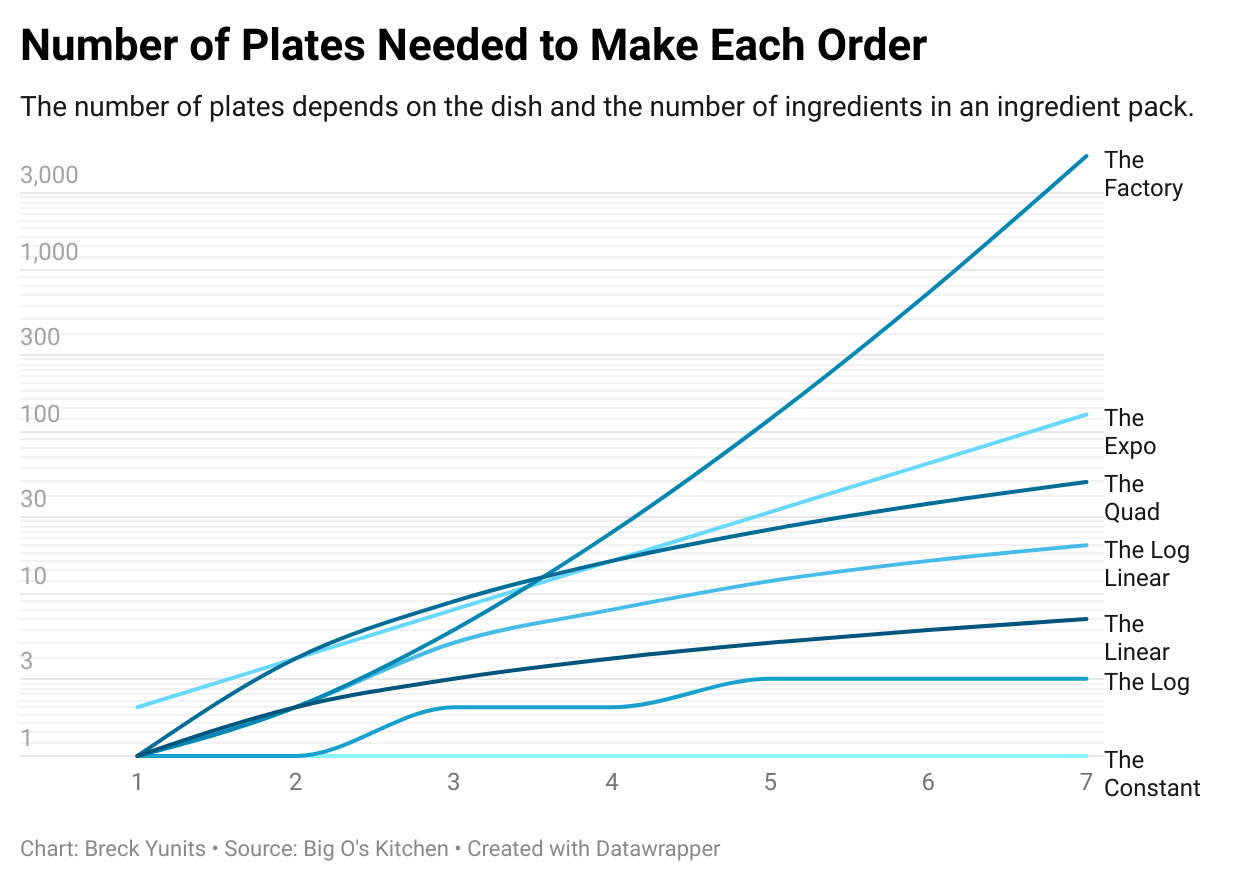

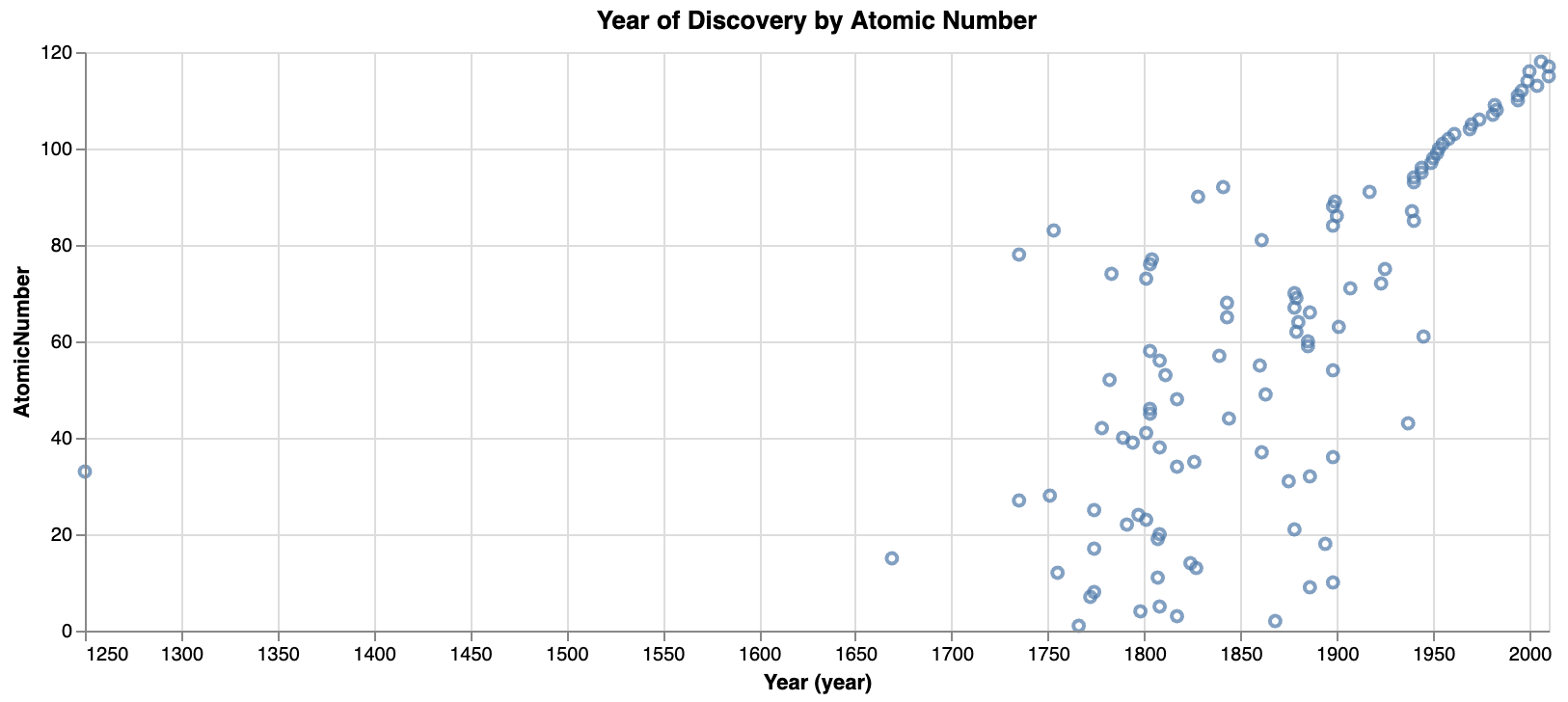

But the current full Standard Model set contains over a dozen balls, which already exceeds humanity's juggling limit:

A chart of the Standard Model of Particle Physics. I think each of these also has an anti-particle, but we're already way over the juggling limit.

Trying to Juggle Thousands of Balls

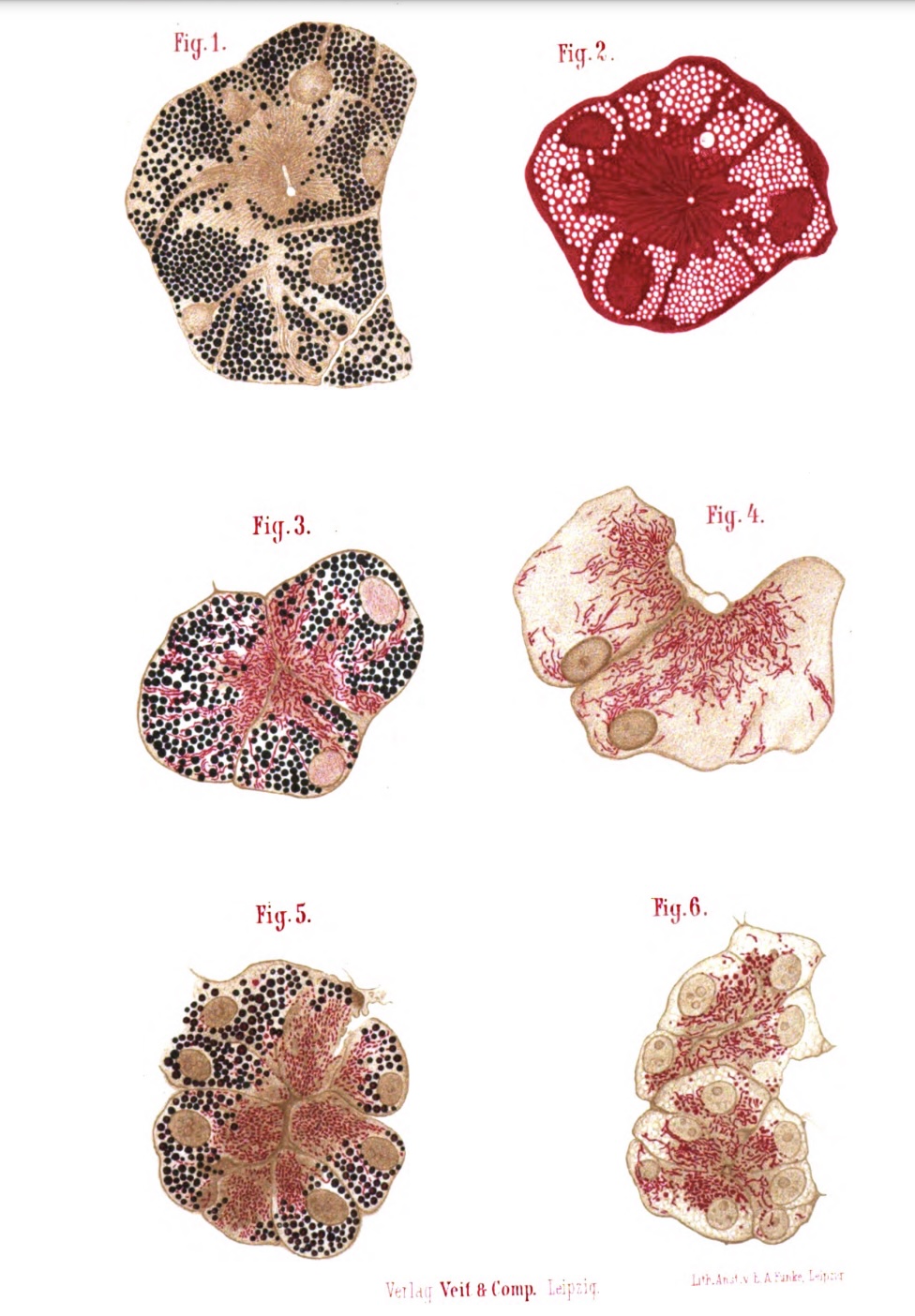

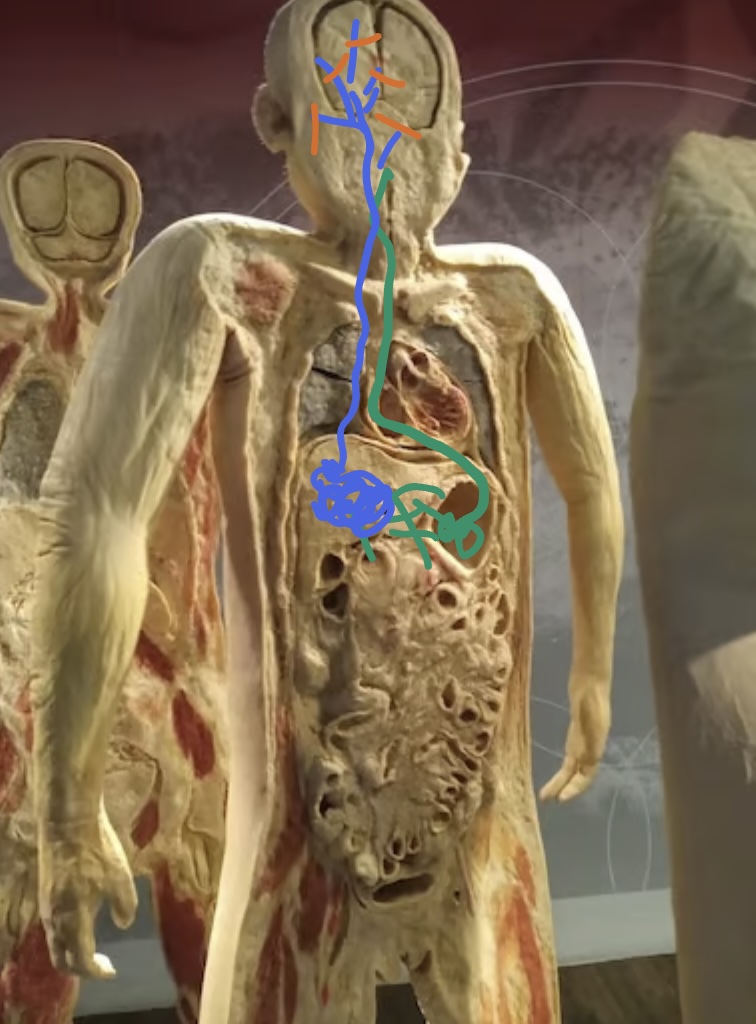

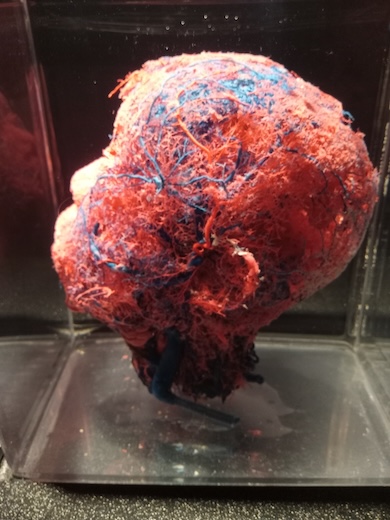

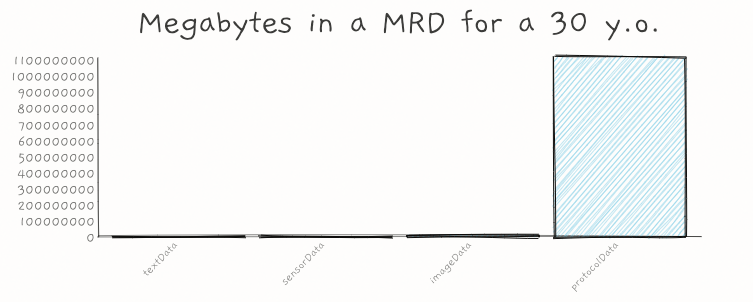

I've been reading microbiology papers and mentally turning each sentence into scenes of balls. Often there are many hundreds of concepts (balls) explicitly discussed in these papers. And not only that, but these concepts depend on other concepts, so there's another 10-100x dependency balls to juggle to fully grok a paper - tens of thousands of balls!

How does one mentally juggle that many?!

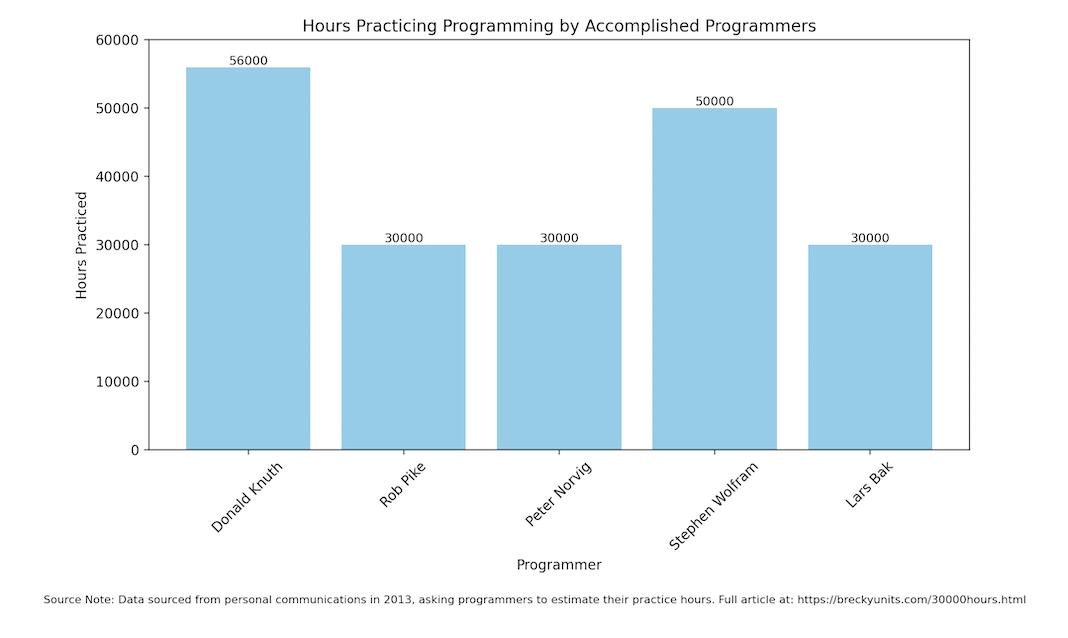

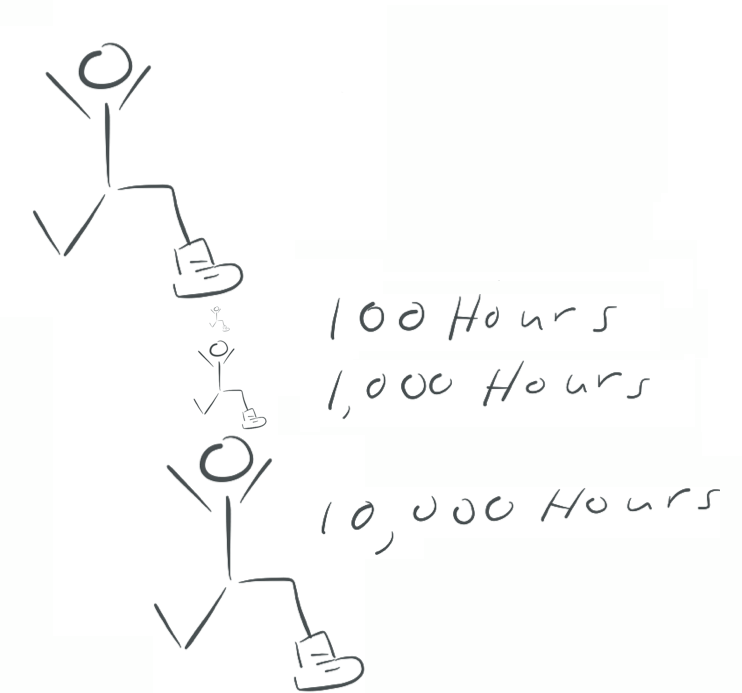

With practice you can probably get a little better.

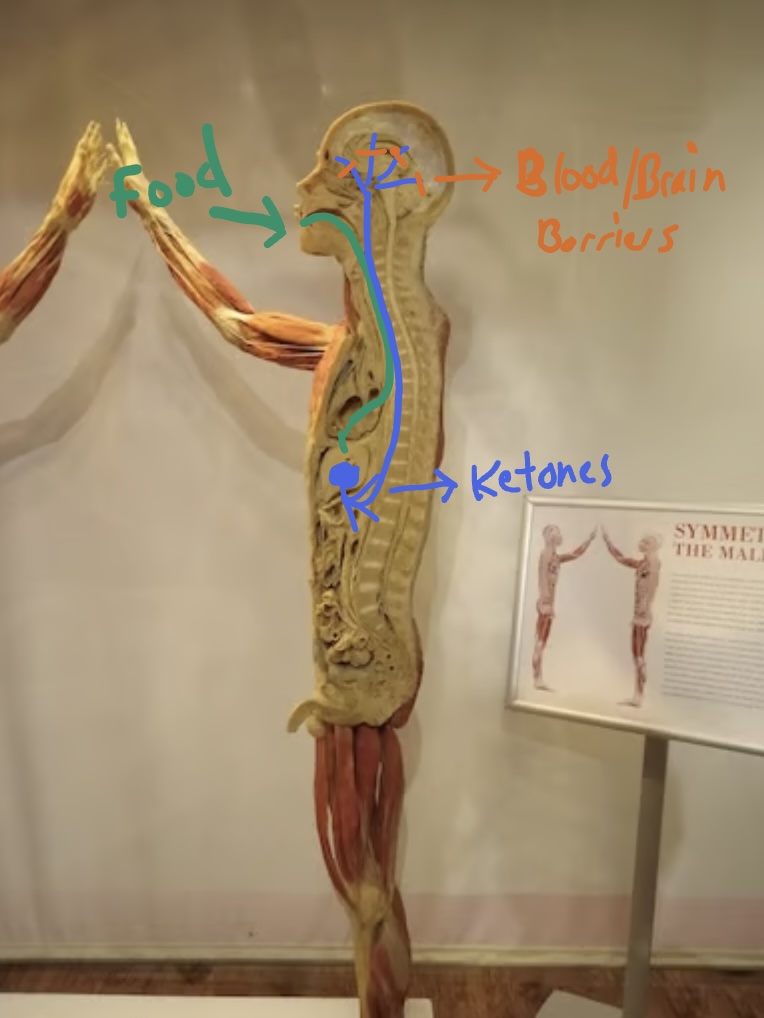

There are probably tricks, such as fasting, that might put your mind in a state that can handle a few more.

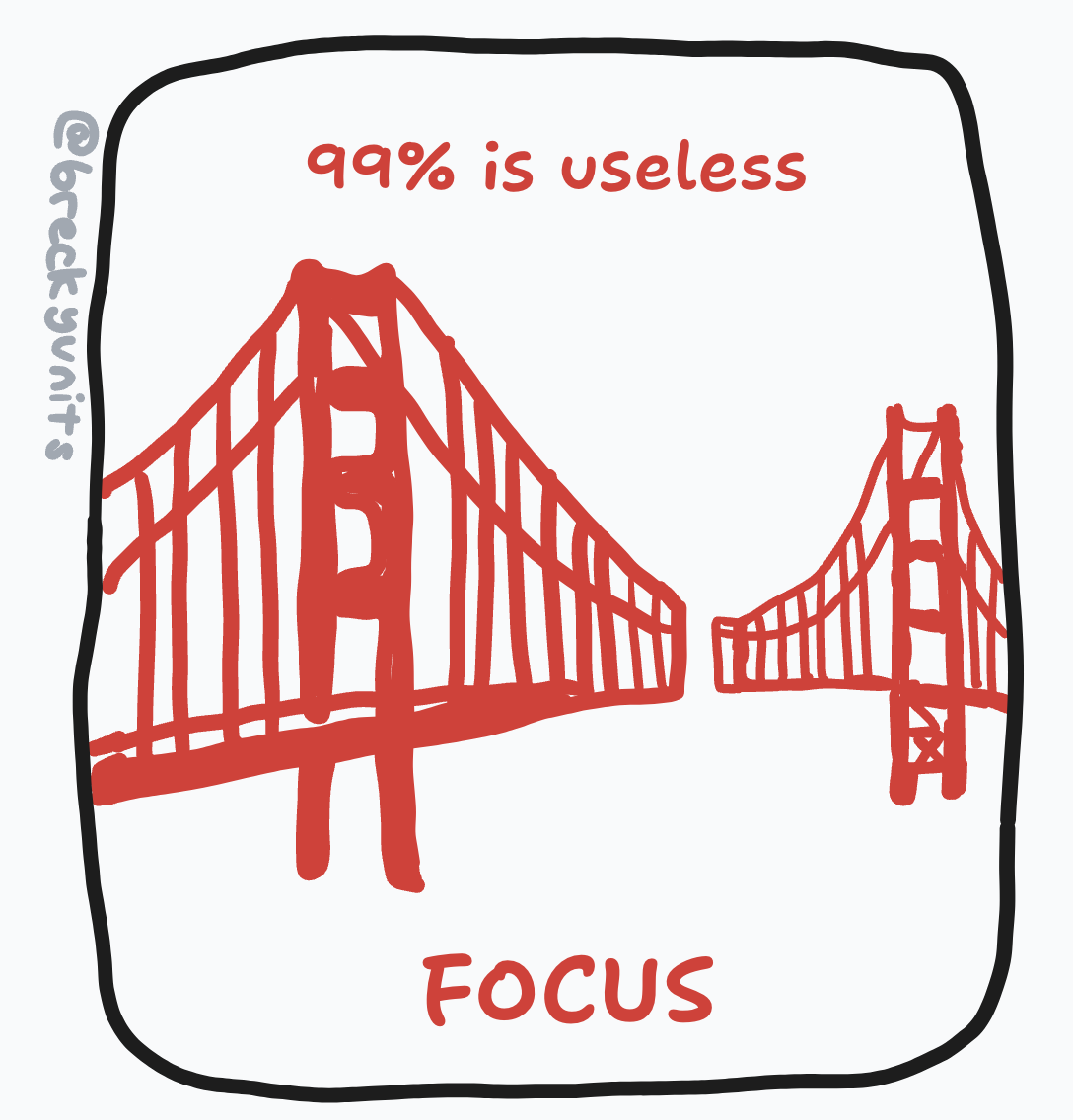

But even then, you are orders of magnitude away from being able to juggle everything all at once and so you must focus on one set at a time.

Focusing on Subsets

Humans divide and conquer. Different groups of humans have focused on different, small subsets of balls, mastering those and contributing their insights to the common body of knowledge.

Where should the divisions be? You want to find causally linked subsets. Juggling insulin, toenails, and hair follicles is not as useful as juggling insulin, glucose, and the pancreas.

Collective Juggling

Simple causal models can build upon each other to create things of tremendous complexity.

If you spherically modeled a modern CPU/GPU, you'd have billions of balls, with perhaps a million unique conceptual balls needed to describe it.

No human can juggle that many in their head all at once, but a million humans, each refining and assembling a subset of 5 - 7, can do a collective juggling act that dwarfs any individual performance.

Related posts

- The Spherical Object Model (2025)

- Dreaming of a 4D Wikipedia (2025)

- Does 3D exist? (2025)

- Ford (2025)

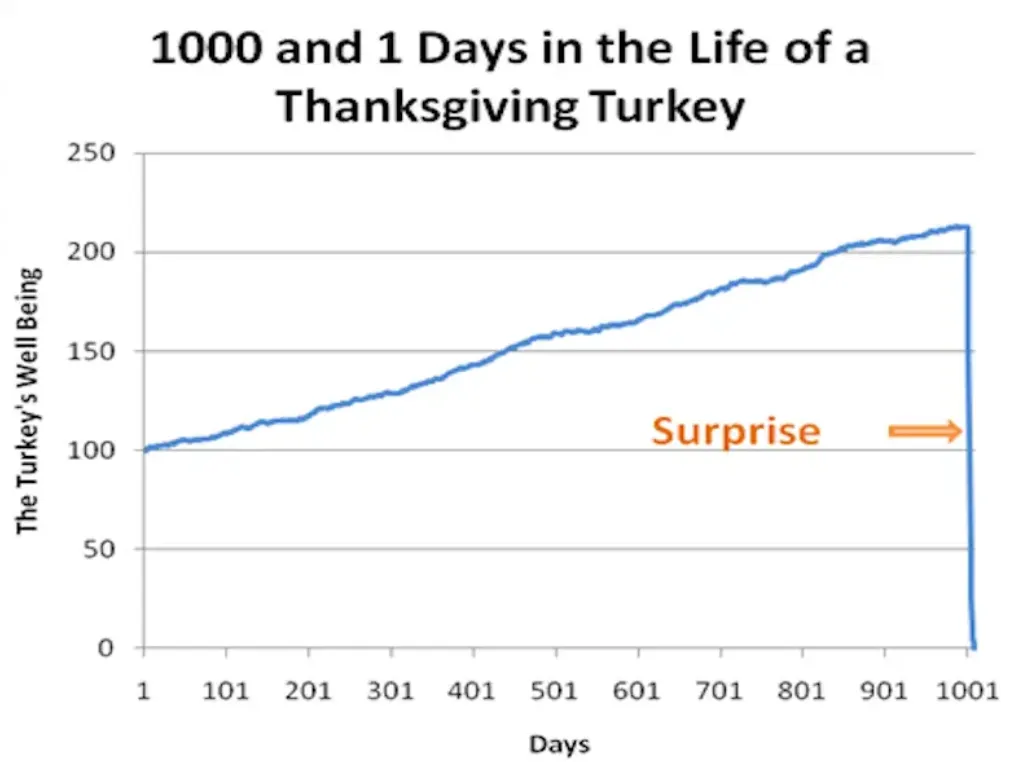

Every time I see a school of fish, I'm bewildered by the conformity. Every fish looks and swims the same.

Don't fish have a desire to be individuals? Where are all the contrarians?

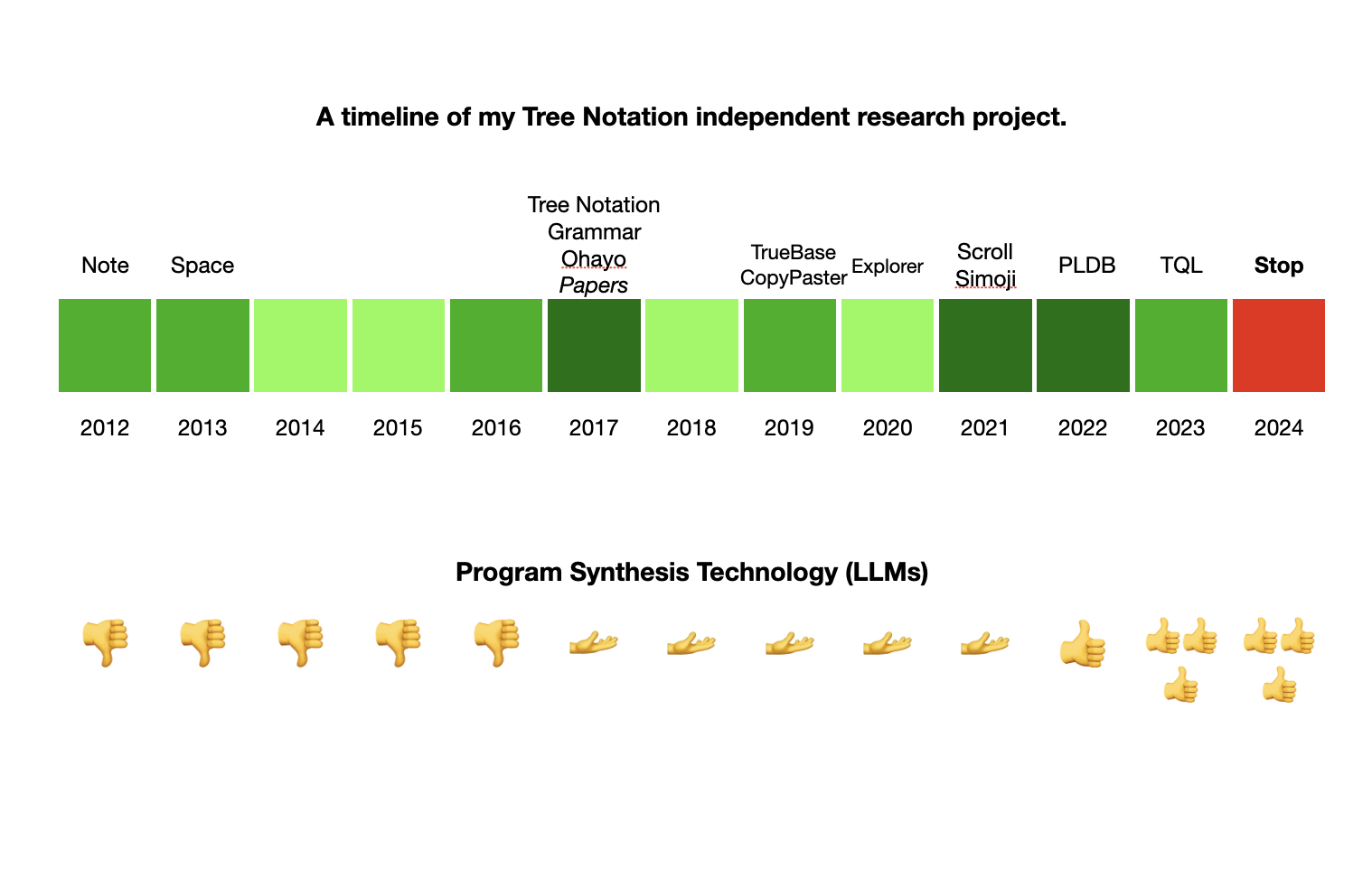

Today I coded a little simoji simulation and surprised myself-even well meaning contrarians can cause a lot of damage!

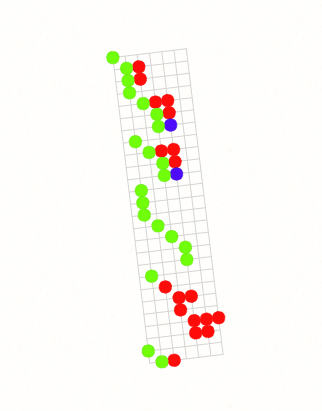

The Simulation

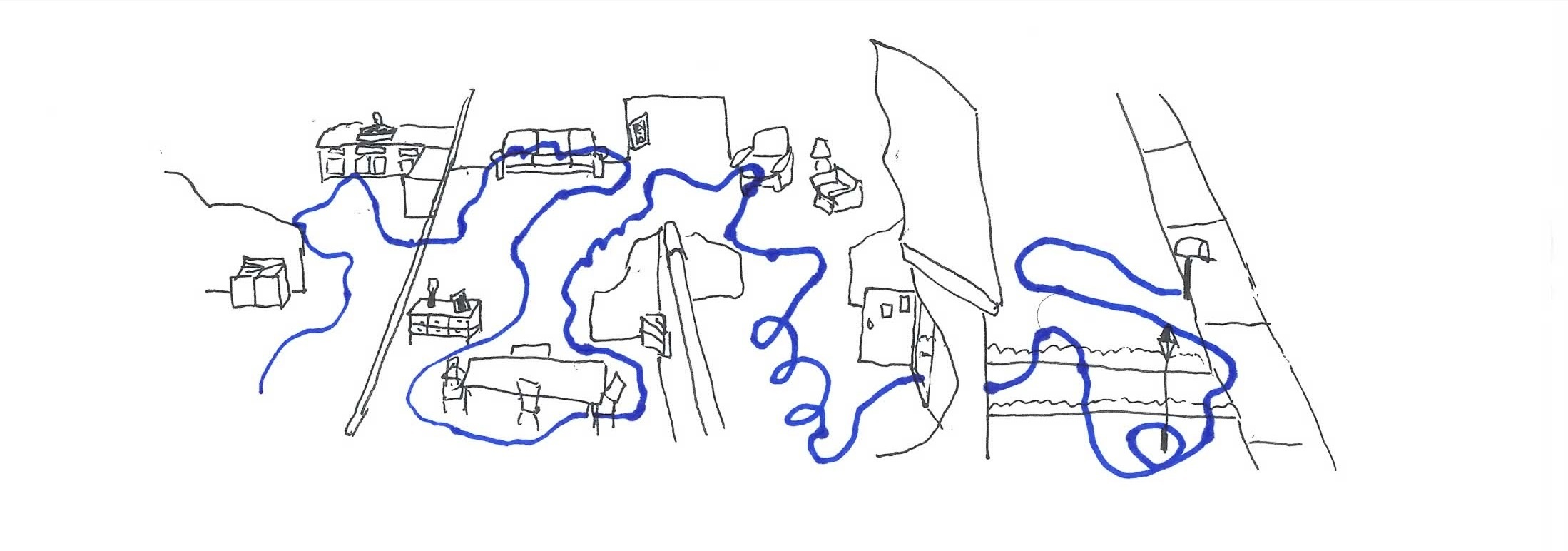

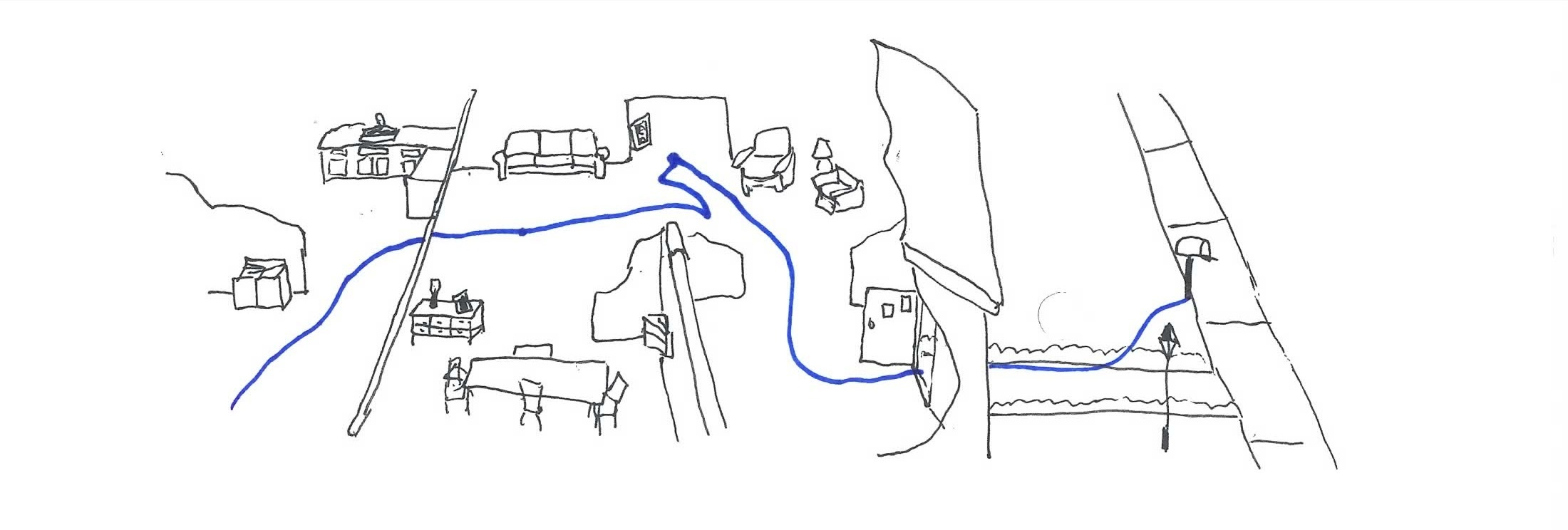

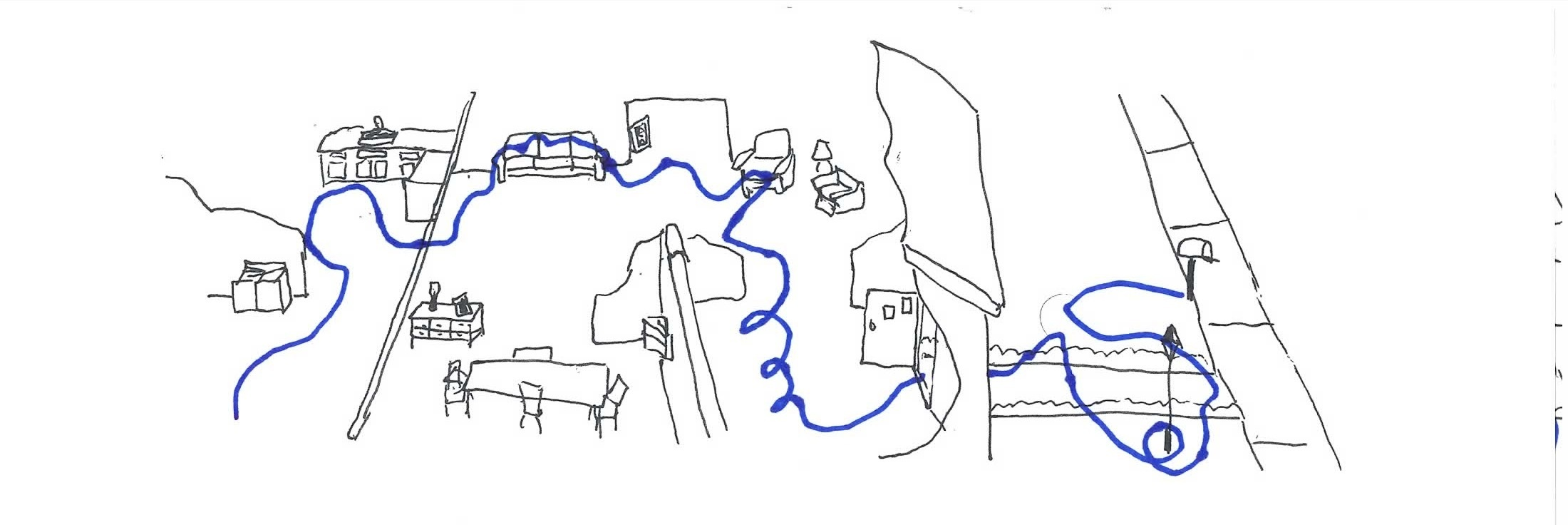

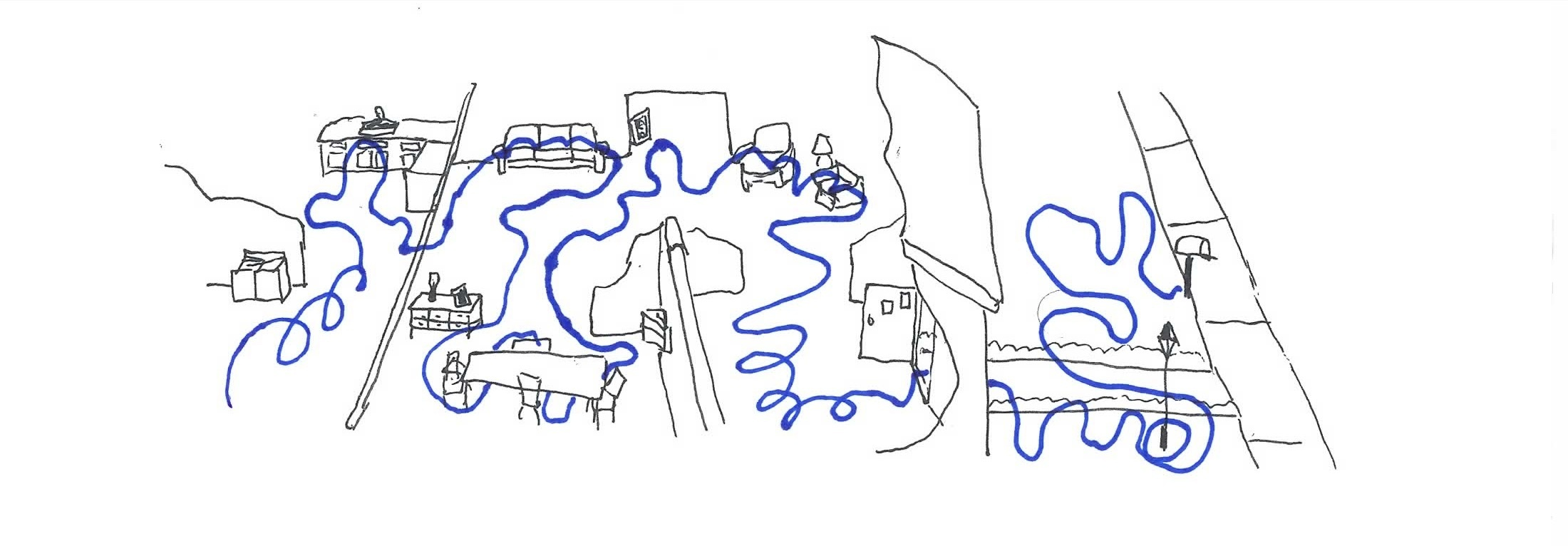

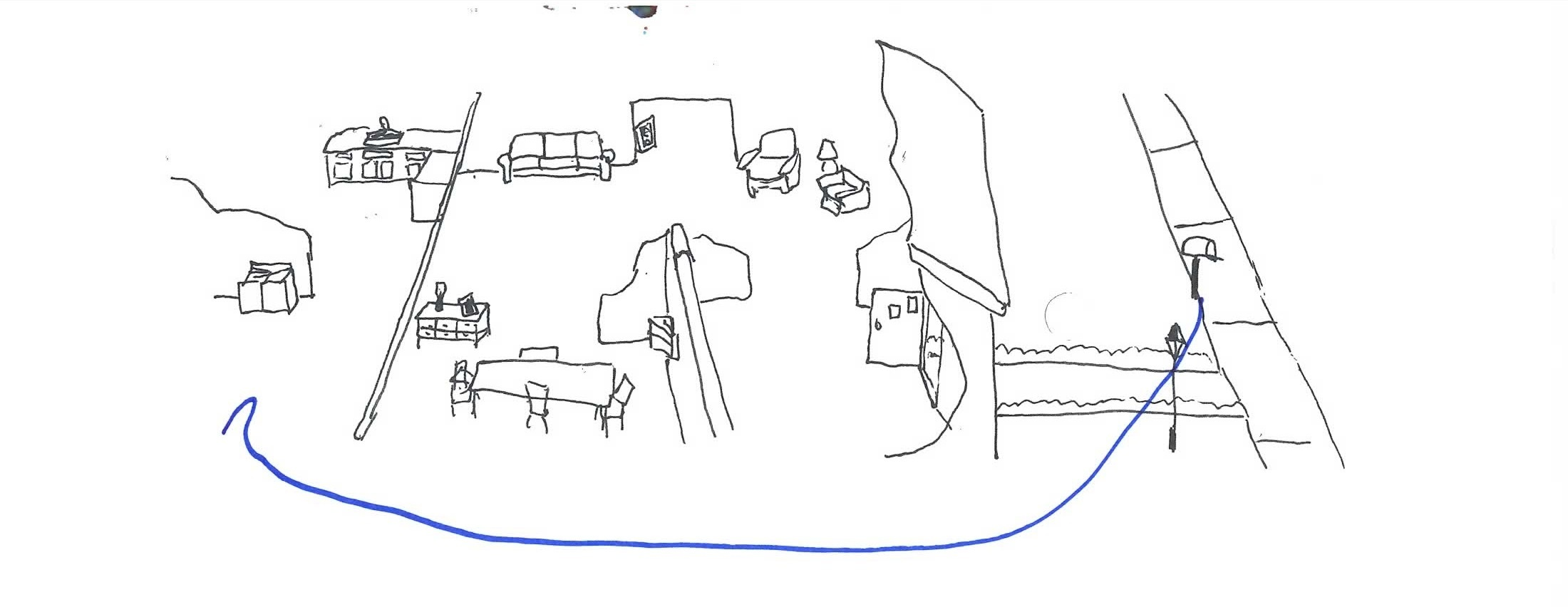

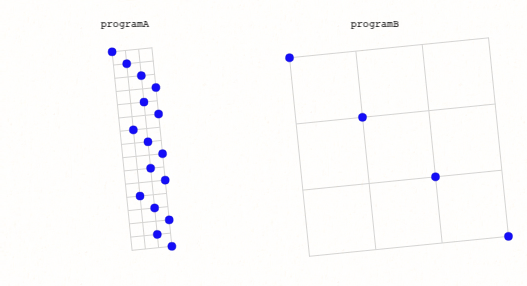

In this very simple sim every fish swims to the edge of the screen.

If every fish conforms every fish makes it safe:

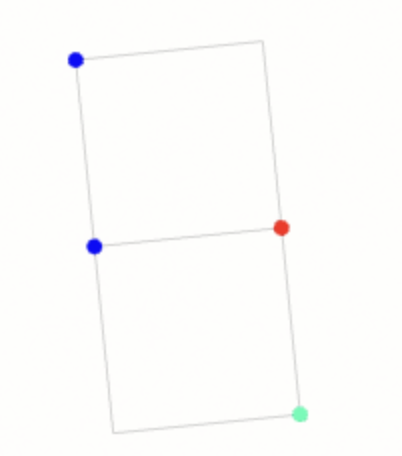

However, if even a single fish gets curious and goes in a contrarian direction, all hell breaks loose:

Takeaways

I have been a brash contrarian in my life. Perhaps too unsympathetic to those following the herd and/or attempting to guide the herd.

While I maintain that the payoffs in nature are extremely variable and warrant thorough exploration of ideaspace, I can see that perhaps I should strive to be a more courtesous contrarian.

To not be contrarian excessively just to practice being contrarian, but to only be contrarian for a few rare ideas, and courteously from the edges.

To explore contrarian ideas while respecting the schools who have gotten us this far.

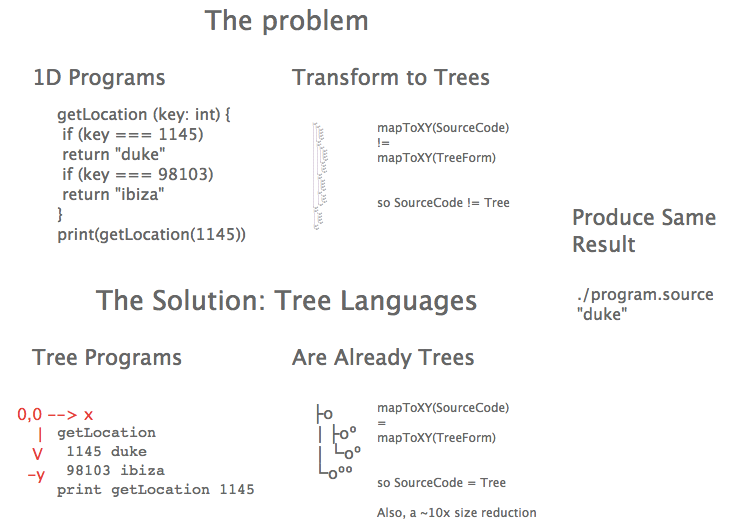

A language where all concepts are defined out of spheres with radii that accurately contain instances of the concept. In the simple prototype above, spheres are hyperlinked and clicking on one loads and zooms in on its contents.

Imagine a language where the only primitive is the sphere. Everything is composed of spheres. Spheres contain spheres and can be recursive. It's spheres all the way down.

Is this even possible?

So far it seems to me it might be, if you get the "Grammar of Spheres" correct.

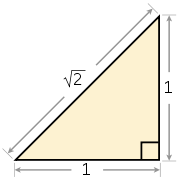

All spheres have a diameter. If you use Planck Lengths, base 2, you can use ~206 bits for the size property to cover every sphere up to the sphere of the observable universe.

Recursion is essential. A line sphere is a sphere containing two unit spheres, with a recursive line sphere in between.

Spin might be key (though it may be better to represent spin via an internal "spin state" sphere). A NAND gate could be 2 input spheres with a spin which touch an output sphere that only changes spin if both input spheres are going in the right direction. Numeral base systems could be represented with spin or internal state spheres.

Spheres would abut input and output spheres. A human sphere would have input sphere for oxygen, food, water, etc, and output spheres for carbon dioxide, waste, and so on.

Time spheres would contain spheres of a concept at different times. Ie., humans would have an embryo, infant, toddler, and adult sphere, among others.

Even ideas would be represented as spheres. Ideas would be spheres inside neuron spheres, perhaps.

I haven't yet been able to use only spheres to model electromagnetic fields accurately, but it feels doable.[2]

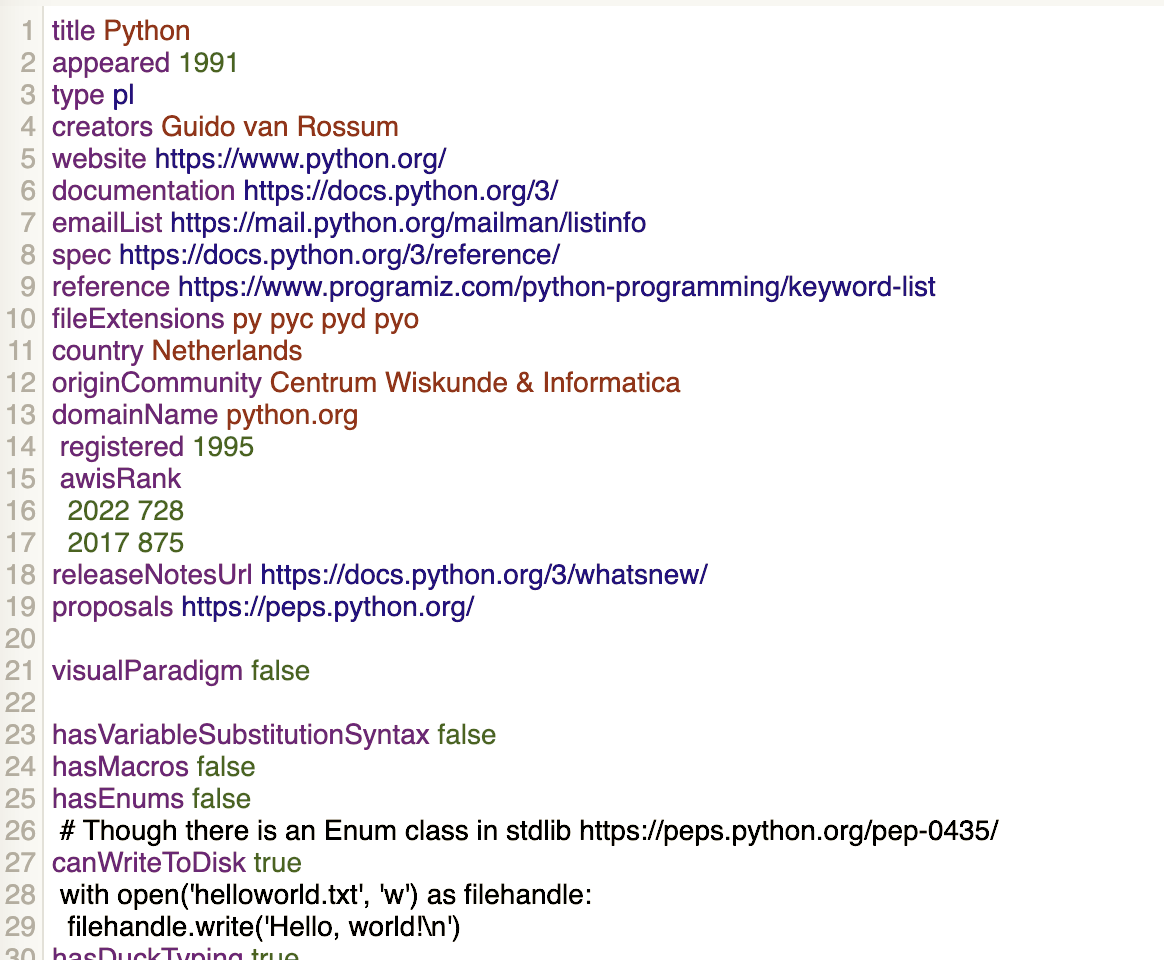

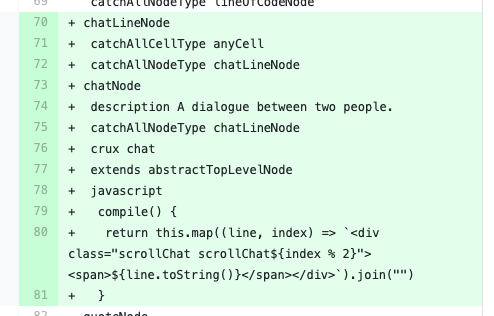

What would the source code for a Spherical Object Model look like?

It would be very similar to the DOM, with spheres instead of rectangular divs as the primitive.

The code for the water molecule above looks like:

waterMoleculeSphere

oxygenSphere

radius 1.5

x 0

y 0

z 0

leftHydrogenSphere

radius 1

x 1.5

y 1.5

z 0

rightHydrogenSphere

radius 1

x -1.5

y 1.5

z 0

Nailing the semantic details would be essential, but I think the standard language wouldn't look more complex than that.

What about the dozens of existing 3D languages[1]?

Existing 3D languages are generally domain specific (like in chemistry) or focused on representing the visuals of scenes, for film or video games, and not on the causal relationships between objects that this would be. The Spherical Object Model would be a domain-agnostic, general purpose language containing the spherical primitives that can model all the causal patterns in our universe.

Why only one primitive?

In my mind's eye I can instantly, and in parallel, draw spheres around the boundaries of all objects I see. It seems I can visualize hundreds, if not thousands or more, spheres at once. This parallelization capability goes away if I were to add even one more shape, such as the cube. I then need to consciously decide whether each object should be surrounded by a sphere or a cube, and it becomes more of a sequential process. It seems a single primitive allows for a massive parallelization speedup.

Of the various primitives, why the sphere, as opposed to the cube?

I think the sphere is the obvious choice, since it is easy to construct other primitives out of them, and in my experience spheres are far more common in nature than other 3D shapes.

Why not point clouds?

As far as I can figure, everything in this world needs to take up space. Spheres are points that take up space. As the bit is the primitive in one dimension, the sphere seems to be the primitive in 3 dimensions.

Why would we want this?

A universal spherical language would be grounded to reality in a way that no other language is. We could sort all concepts across all domains by their size and/or frequency in the real world.

It might take longer, at first, to build up a shared 4D encyclopedia, but the physical constraints from the 4D laws of nature would force it to contain a truth that can't be matched by lower dimensional languages. Just as a Mercator projection contains unavoidable bias, so does a flat encyclopedia relative to a spherical one.

It is extremely easy to build false models out of words, so switching to a well-designed sphere based language might be a radically better way to create and communicate true models.

Reader Comments

What do you think? Send feedback you'd like me to post to breck7@gmail.com, or add your comment by editing the source to this post.

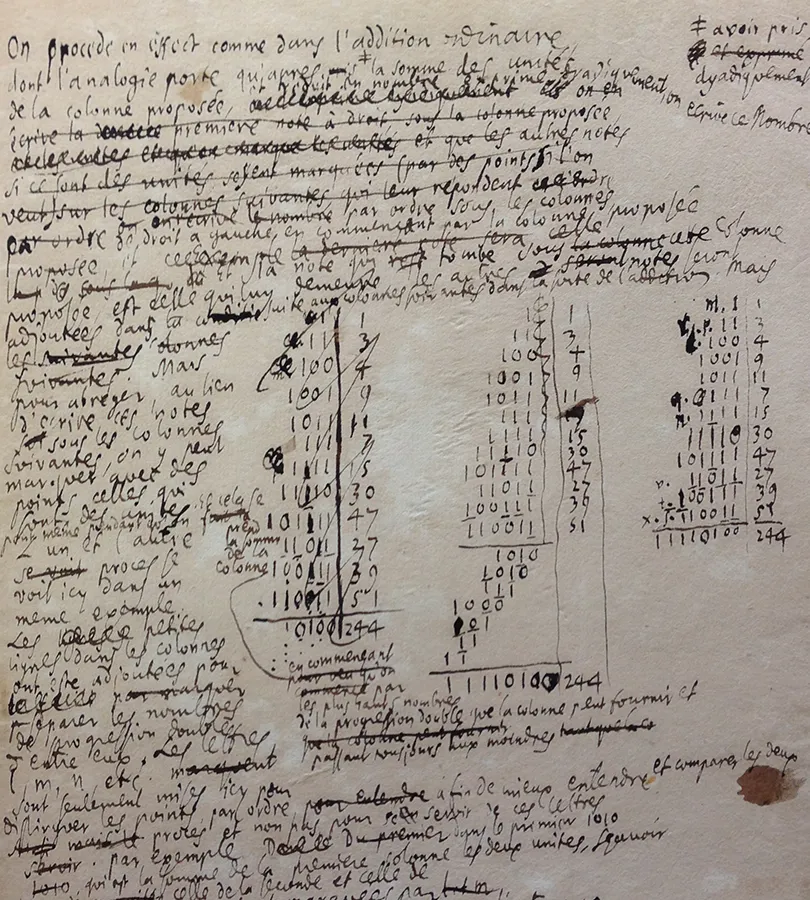

- A reader points out the relevance of Leibniz' La Monadologie (1714). I had overlooked that work by Leibniz before. I now have read this translation. Extremely interesting. The same reader points out potential connection to Tim Morton's hyperobjects.

- gwern points out the relevance of "epicycles/Fourier decomposition, Gaussian splatting". Playing with Gaussian splats in part encouraged me to try using spheres as the primitive. I particularly enjoyed this overview.

- A reader suggests the reason for spheres is they are "the most efficient container". Their perspective is its not spheres all the way down, it's pattern recursion, and spheres are just "the most elegant expression of those patterns". I'm not sure about the deeper idea, but I love the line about container efficiency. That is a good exercise to do: given a bunch of naturally observed patterns, see which primitive shape has the best efficiency as a container. Spheres seem like they would minimize the surface area of the containers and you also don't need to worry about orientation (just position and size).

- Stens points out the connections to BORO, an ontological method "grounded in physical reality ", which is based on the principle of extension ("taking up space").

- Bruce provided detailed feedback on all parts of the post, and pointed me to related topics like covering and tesselation and tiling. He pointed out the key question is where there is "a thinking advantage" to thinking in spheres.

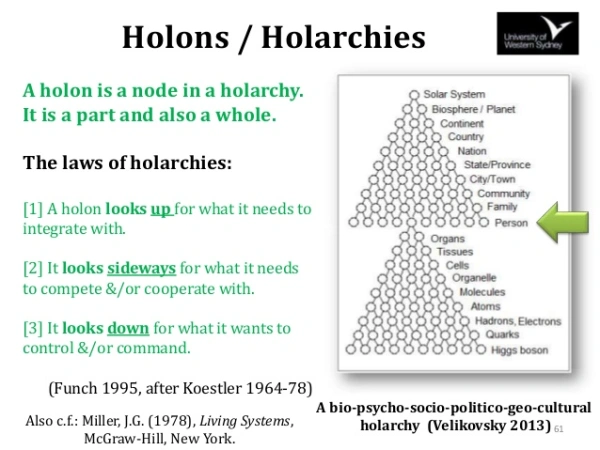

- Maciek pointed me to the highly relevant "holons" and "holarchies":

- A tangental note from Anton makes me wonder if angular diameter would be a useful primitive to keep in mind.

- Another way to frame this idea could be an attempt to come up with a simple grammar to allow the techniques of finite element method/discrete element method to be more widely applied. FEM is "the mesh discretization of a continuous domain into a set of discrete sub-domains, usually called elements."

[1] The list of 3D languages I've studied to date.

[2] It also seems like what I'm talking about is reviving Newton's corpuscular theory of light.

I just got back from the future where they had the most incredible device.

It was a large black tabletop that generated animated 3D holographic projections above it.

I interacted with it via voice. "Show me an apple", I said, and a floating red apple appeared.

Then I turned a knob left and the apple turned green and shrank and rose in the air and down came a branch and now the two were attached.

Then I turned the knob right and the apple went back to red and I kept turning and it turned brown and shrivelled and died.

I turned it back and then pinched my hands to zoom in and soon I could see cells and then I tapped on a cell with my finger and it zoomed in and I could see the organelles and I tapped further still until I was looking at the electrons and protons in the atoms of a strand of DNA of this appley apparition.

I then spread my hands and zoomed back out and got back to the branch and tapped it and now was looking at an apple tree and I turned the knob left and watched the tree fold all the way into a seed.

I had been so preoccupied looking at the center of the table that I had failed to notice a holographic human appearing to observe the seed.

I tapped the human and then the whole scene moved and he was now in the center.

I turned the knob left and watched as he shrunk down to a boy then a toddler than a fetus and embryo, the machine autozooming in as his size became microscopic. I turned back to the adult man.

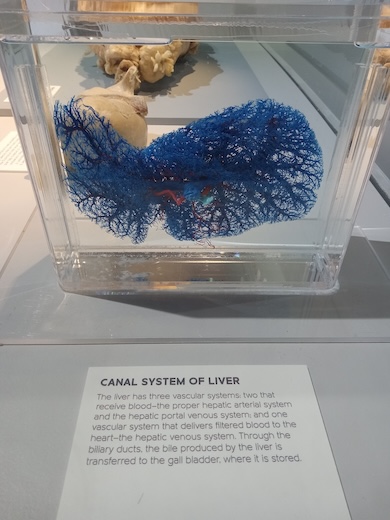

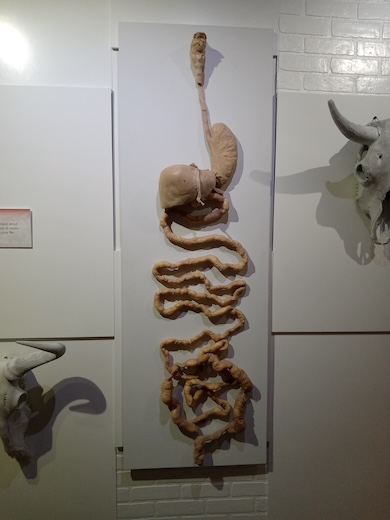

I then said "Show me the man eating an apple pie", and watched as a holographic apple pie appeared and the man used a fork to lift a piece to his mouth and then the simulated camera equipped with floodlights entered his mouth and we could see his teeth chomping the pie to bits and then the camera followed the crumbs down the esophagus and into his stomach and we could see acid breaking down the crumbs and transporting food molecules to the liver and the molecules entering the cells and then the mitochondria and then I finally said "PAUSE".

And then, "show me the apple pie being made from scratch".

And then I watched in awe as the scene rewound to the pie and then showed it coming out of an oven and then split into many scenes some showing the apples being gathered and others showing the oven being assembled and then others showing men sailing across the seas in wooden ships and then others showing tiny saplings spreading across the forest floor and then others showing clumps of cells joining together and then others showing cells eating mitochondria and then others showing asteroids pelting the earth and then fields of cosmic dust and then a beautiful bright flash.

Related posts

I just watched a great presentation from Jon Barron (of NeRF fame).

Deep neural networks can now generate video nearly indistinguishable from "real" world video and/or video generated from 3D engines.

Does this provide evidence that the 3D/4D world doesn't actually exist?

What is at the root of our conscious experience?

I can see at least 2 possible topological orderings:

4dSpaceTime

mind

4dSpaceTimeModelor

generator

mind

4dSpaceTimeModelIn the latter you are observing not a real 4D world but the generative output of some kind of network.

In (1) you have a 4d universe and light rays bounce around and hit observers who form a model of that 4d world; in (2) you don't actually have light rays or spacetime, you just generate signals to the observer's inputs and somehow we came up with the spacetime model.

When you turn your head, are you seeing new light rays or is the generator giving you simulated values?

These ideas are so far upstream they are probably unanswerable, so why think about it?

Well, I came across this talk while working on a new language for the 4D world.

If the 4D world is actually "fake", and the world is neural network generated, then that might be a waste of time.

For now I still strongly lean in favor of spacetime being real but at least I'm aware of another possibility.

- Juggling Ideas (2025)

- The Spherical Object Model (2025)

- Dreaming of a 4D Wikipedia (2025)

- Ford (2025)

People were surprised that AI has turned out to make information workers obsolete before laborers, but I'm not.

Information is the easiest job.

I laugh at the obliviousness of the photographer who snaps a photo of the Golden Gate Bridge and demands copyrights and royalties, as if that task was harder than the years of labor by thousands building it (some of whom lost their lives).

Information is the easiest job.

Who worked harder, the men that spent years leveling a path through the forest and mountains to build a road, or the guy who makes an SVG representation of the road for a digital map?

Information is the easiest job.

I enjoy thinking about information. I like to write. I like to find new ideas and digest them and rotate them and tear them apart and put them back together.

But not for a moment do I think information jobs are harder than the physical labor jobs I did in the past, or that others are doing all around me.

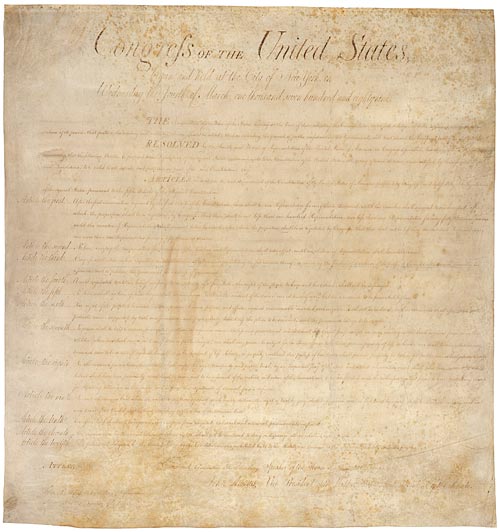

That's why I put out all of my work to the public domain. I wouldn't dare throw a "copyright" sign on my work, or a "license", and pretend like my job is so special that I deserve to restrict the freedoms of others.

The janitor does not demand royalties when I walk into a clean room; the plumber does not demand royalties when I flush the toilet; the electrician does not demand royalties when I turn on a switch; the furniture maker does not demand royalties when I sleep on a bed; the shoemaker does not demand royalties when I go for a walk.

Why on earth should I demand royalties when someone uses my outputs?

Especially since information is the easiest job.

I do want to get paid to produce solid information. I sell things of various sorts. I find information work that needs to be done and deliver. I push myself to always be improving my skills so I can make the best information I can.

But I don't expect the absurd salaries of the old days.

Why have information workers been paid so much, relative to other professions?

Corruption.

The people who make the laws (lawyers) are information workers, and so unsurprisingly they made unnatural laws to benefit themselves.

They made information jobs far harder than they should be. Instead of encouraging collaboration they encouraged silo'd work and unnatural monopolies.

The people who inform the public (medias) are also information workers, and so unsurprisingly they misled the public not to oppose these laws.

But now AI has come along, and has ignored these unnatural laws (and just trained on everything, ignoring the information laws us humans are shackled with), and shown what a farce these high salaries for the easiest jobs have been.

My advice to information workers is this: keep in mind that information is the easiest job.

If your job is information, do it to the best of your ability, like you would want anyone else to do their job.

Don't expect the monopoly salaries of old. It wasn't honest before and now the truth is harder to hide.

And please, do your best to publish your information in the most honest format: unencumbered by "licenses"; clean source code; auditable change history.

It's an easy job, but it's even easier if we all do it right, and work together.

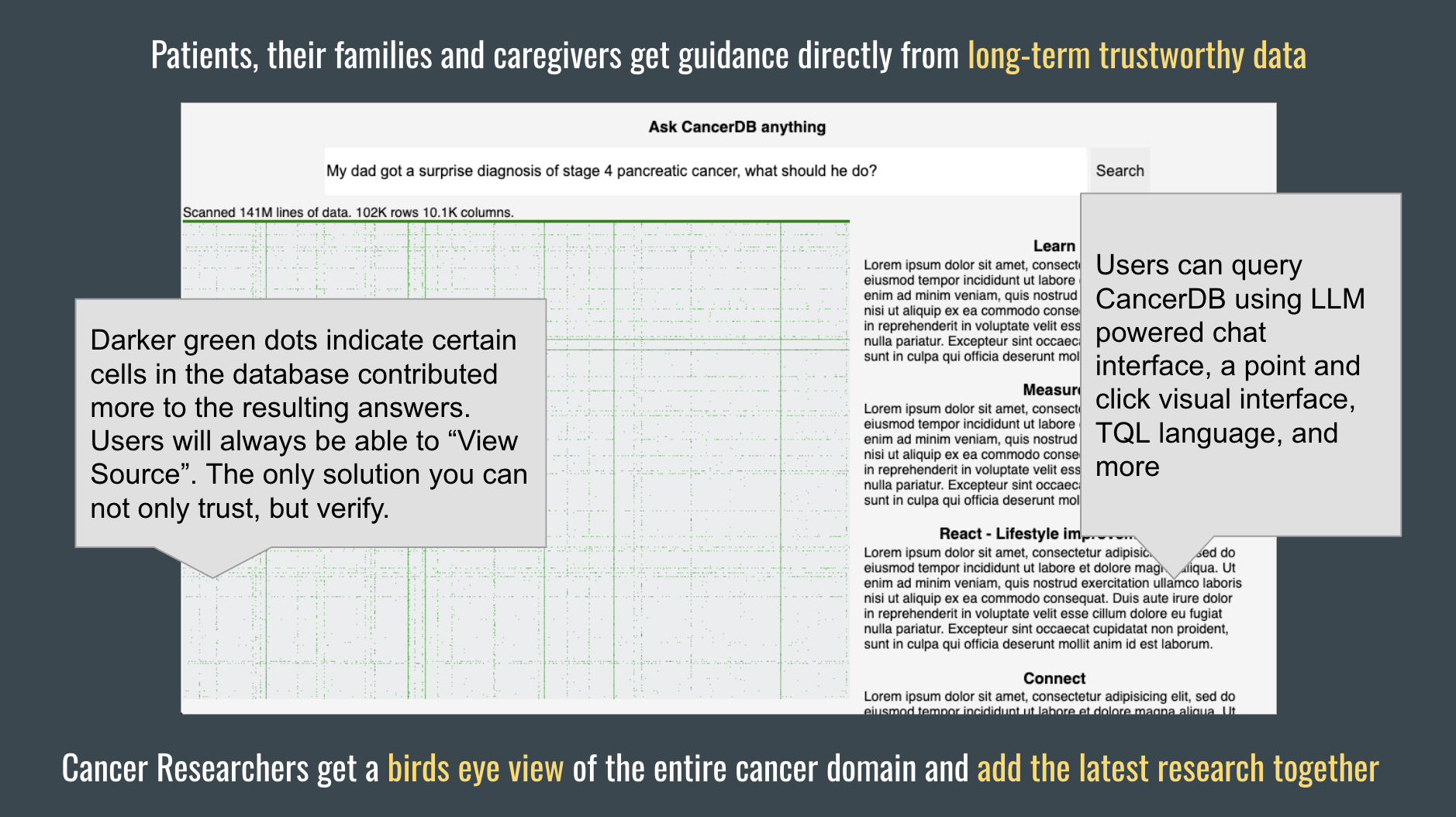

Deceptive intelligence is when an agent emits false signals for its own interests, contrary to the interests of its readers. Genuine Intelligence is one where an agent always emits honest symbols to the best of its ability, grounded in natural experiment, without any bias against its users.

Artificial intelligence is here and very powerful.

Will we have Deceptive Intelligences or Genuine Intelligences?

It seems to me closed source intelligences are bound to be Deceptive Intelligences.

Think about a closed system prompt or fine-tuning stage. It is extremely easy for a powerful entity (such as a government), to influence what happens in those stages, and thus quietly mislead the people downstream.

(It's interesting to realize that even before AI, we already had what served as a "system prompt", where the powers that be would apply hidden pressures to the major media organizations to generate news and media with specific slants.)

How is an individual to protect themselves against Deceptive Intelligences?

It seems to me like legalizing intellectual freedom and having powerful, fully open source, local AIs would help.

Would that be enough?

Sometimes I wonder if the inherent black-box nature of neural networks means there will always be a place for the DI to hide.

In that case the question is: is it possible to build a purely symbolic intelligence that could be competitive against neural nets?

A fully open and understandable (but massive), organization of symbols that provides the knowledge and expertise benefits of AIs but in a Genuine Intelligence form factor?

Symbolic AI was hot, then fell way behind neural networks, but neural networks have had a trillion dollars plowed into them.

Could symbolic AI make a comeback? If you plowed enough brain power and resources into symbolic AI (including using a lot of LLMs to help write it), could you make one competitive to neural networks and more of Genuine Intelligence?

Or is there something inherently worse about symbolic AI?

I'm genuinely not sure. :)

Why do I worry about this?

Simply because I'm a huge outlier in terms of amount of time I've put into studying and experimenting with symbolic technology, and feel some responsibility to help make it work, if it actually can work (rather than just go off and bow to our new neural network overlords :) ).

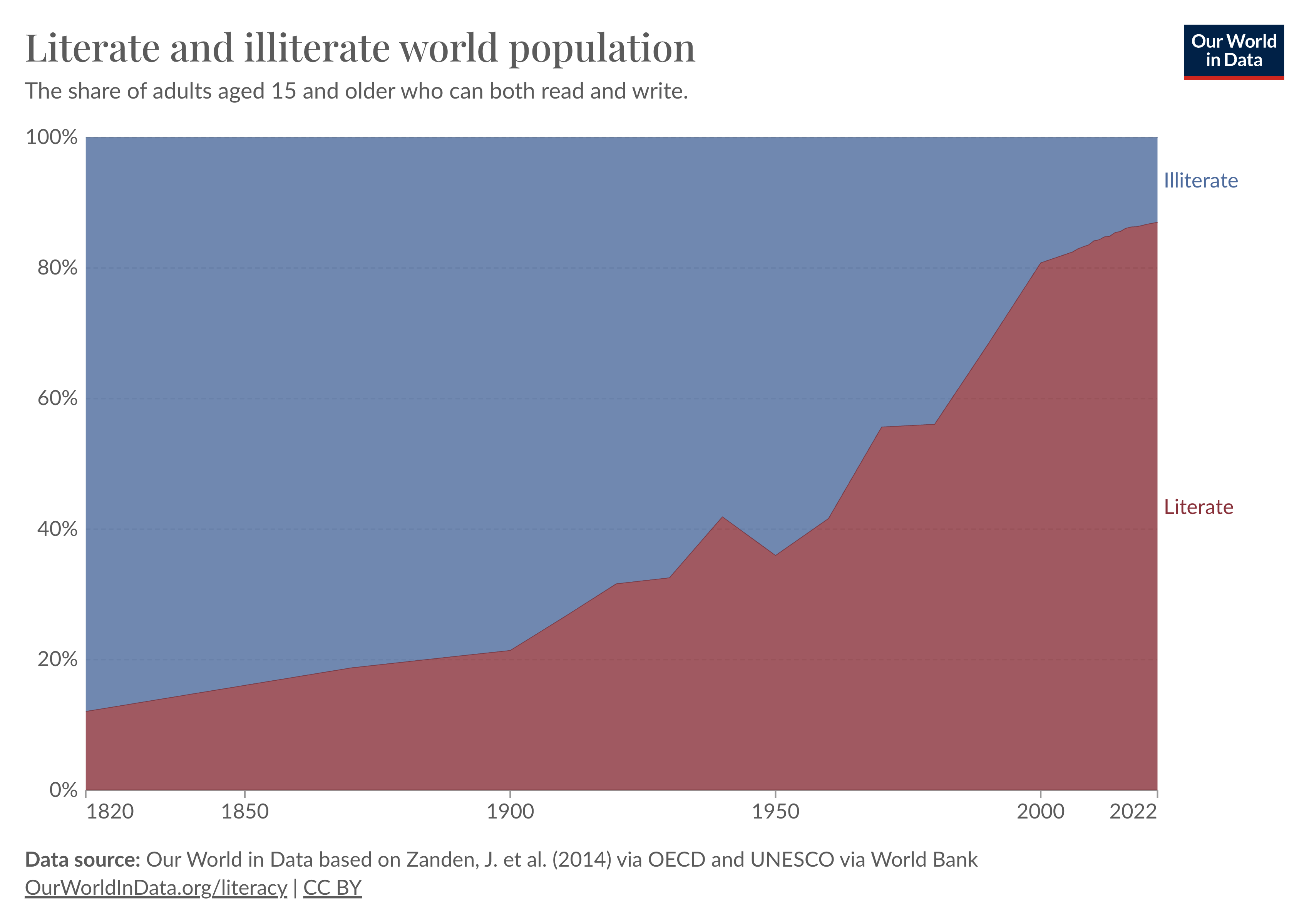

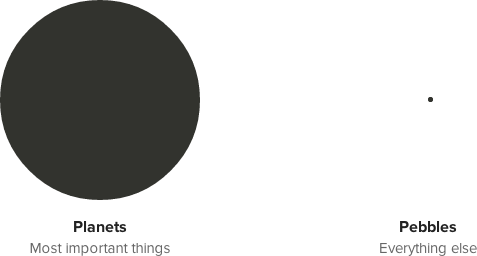

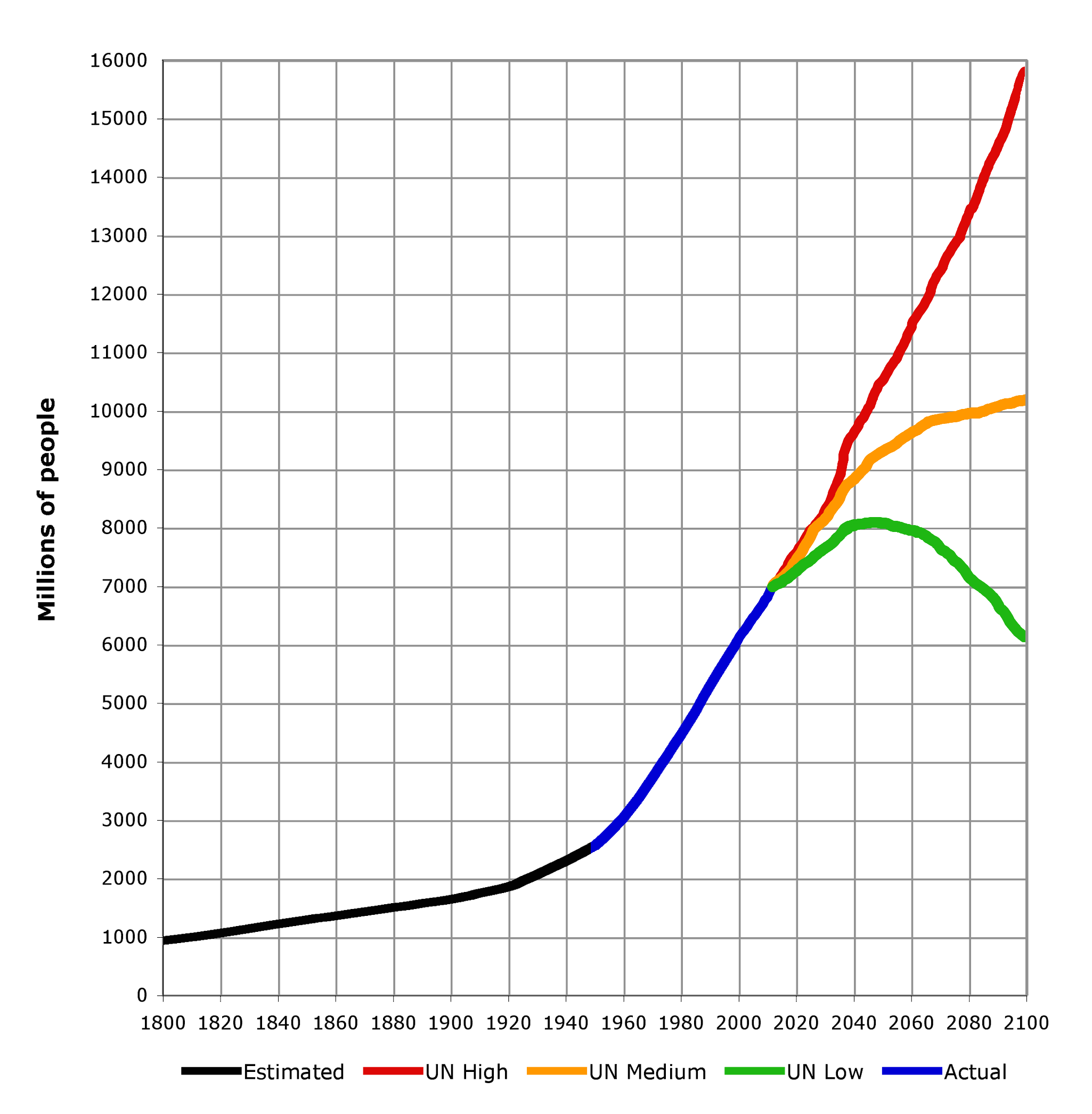

- Human population has grown exponentially.

- Words are 2D signals that can convey information about the 4D world.

- The maximum number of words generated per year is a constant times human population.

- Written words persist and so the maximum number of written words increases by maximum number of words generated.

- The maximum number of words a human can perceive per year is a constant.

- It follows from the above that humans perceive a decreasing percentage of the world's total words per year.

- Knowledge is words that make accurate predictions of many more words.

- Noise is words that don't predict many more words.

- Patterns in words are sometimes recognized and formed into knowledge words.

- The fundamental generators of the patterns in the 4D world seem to be static, but may not be.

- The absolute number of knowledge words increases over time.

- Knowledge seems to grow logarithmically.

- If one ruthlessly focuses on knowledge over noise, one may predict more of the 4D world than their ancestors.

Americans are guinea pigs in a huge experiment: we are exposed to more ads than any group in history.

It's not going well.

I say it's time for a new experiment: let's get rid of ads.

Here's how.

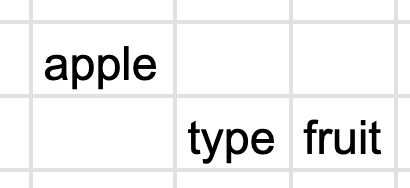

Information as apples

Imagine information as apples.

Ads are like those little round stickers stuck on the side.

Imagine it is illegal to remove these stickers.

Finally, imagine some stickers are poisoned.

This is information in America today.

Copyright makes it illegal for Americans to remove the stickers; to remove ads from information.

Get rid of copyright, get rid of ads.

(Or we can make content creators liable for any and all harm arising from their "content", including any harm coming from bundled ads.).

I was born in 1984. My daughters in 2019 and 2021. I would love their world to be better than the one I grew up in.

Information can nourish, or it can poison.

I would love my girls to live in a world with more sustenance, less poison.

Let's get rid of copyright and get rid of ads.

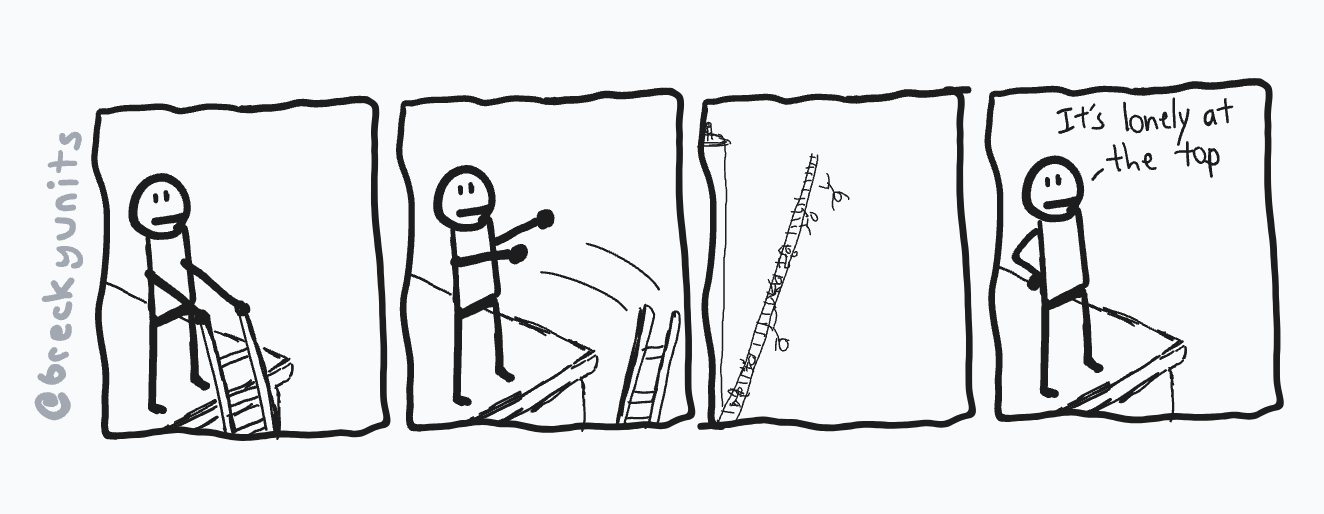

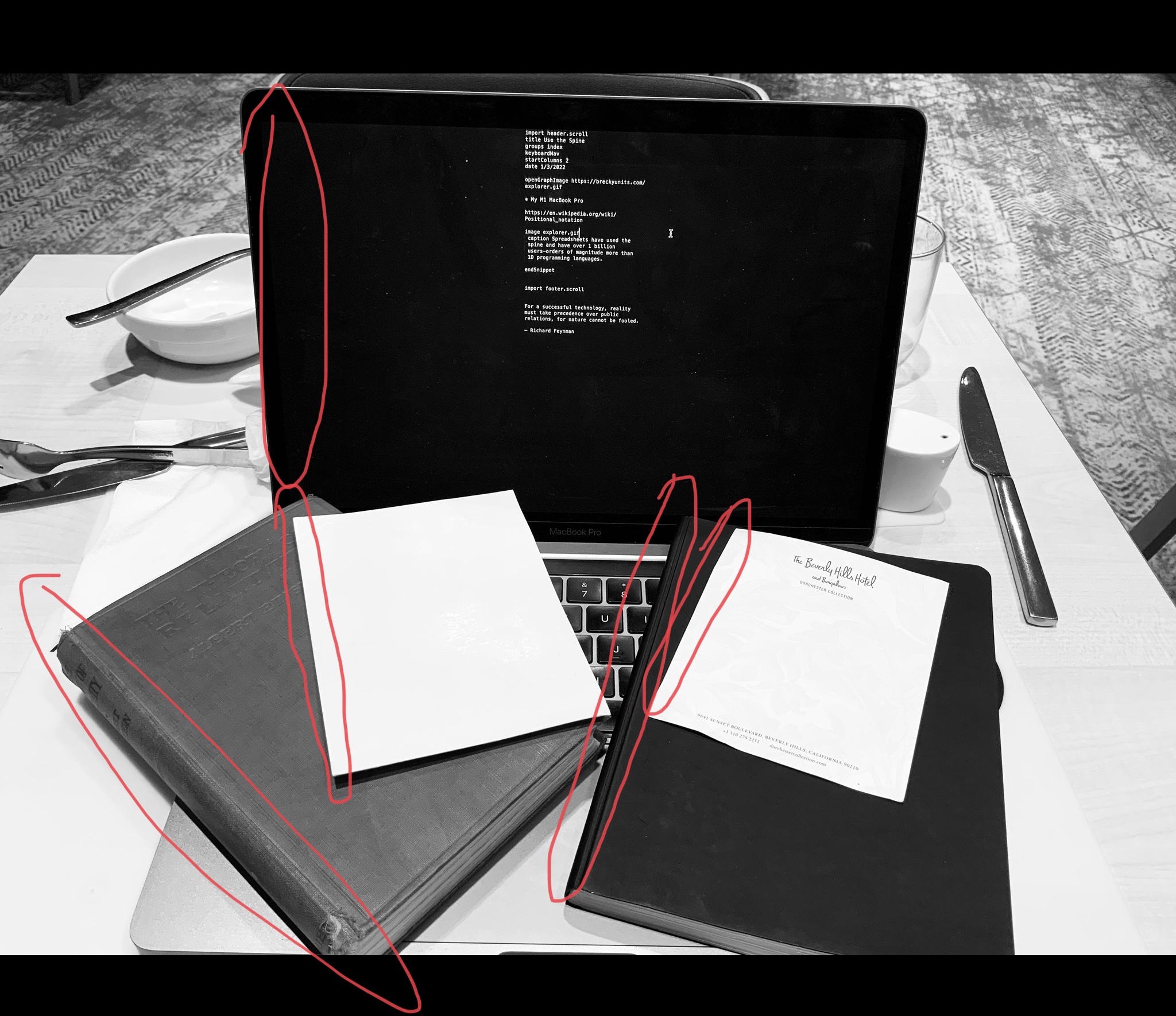

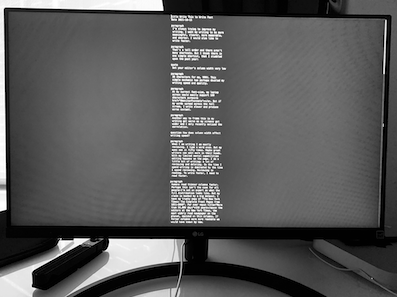

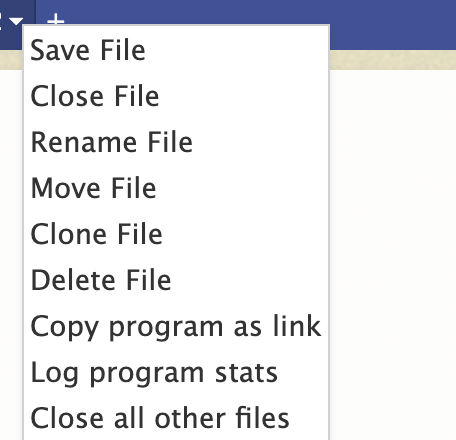

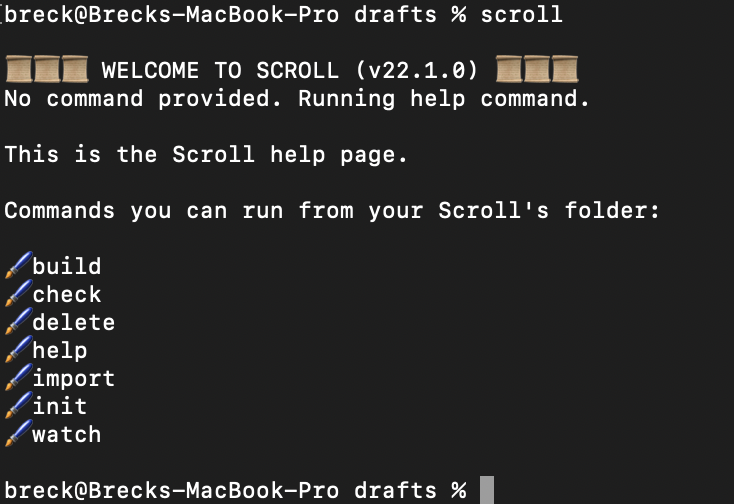

With Scroll I am trying to make a product I love and a product other people love so much it spreads via word-of-mouth.

Word-of-mouth spread! The holy grail of startups.

It has been harder than I expected.

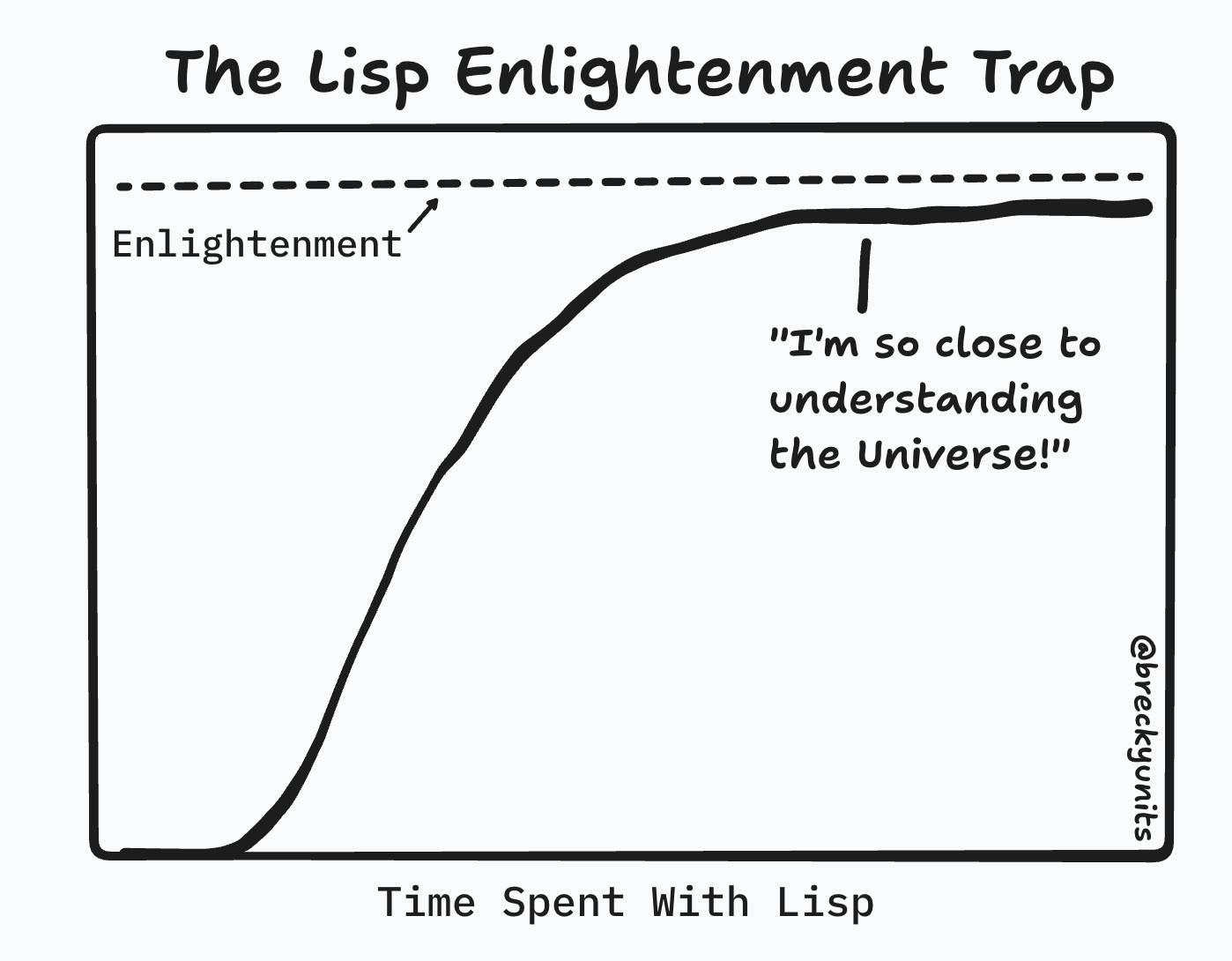

I think a cognitive mistake I've been making is comparing new versions of Scroll to older versions and not comparing new versions of Scroll to the best available alternatives in the market.

In other words, I keep thinking this new version is so mucher better (or has so much more potential) than the old version, so of course the old version wasn't good enough to have a viral coefficient greater than 1 (but this one will!).

I'll call this the Internal Comparison Trap. You feel like you're making significant progress because the new version of your product is significantly better. But the market doesn't care about internal comparisons. The market only cares about your best versus the market's best. And that gap might be as wide as ever, and widening the resource gap.

In most things in life it's healthy not to be comparing yourself to the market's best. Instead, be the best you can be-compete against your prior self! But when making investment decisions you have to compare your bet to the market's best. The market is very much winner take most. It's better to have a small slice of #1 than a big slice of #10.

Externally I see this playing out a lot with the various AI companies. If you look at each in isolation, they all seem to be making incredible progress. But if you compare each new release to the market's best, the improvements are often marginal or even non-existent.

It gets especially hard in a competitive market. At first you are on the low part of the S-Curve so it's relatively easy to make major jumps in product improvement. But then the improvements naturally get harder (technical debt builds up, for instance), and meanwhile you enter the ranks of the top competitors, who are innovating as hard as ever.

There are other ways to get word-of-mouth spread, other than having a breakthrough product. I think if you are financially disciplined with great customer service you can build a word-of-mouth flywheel machine.

But if your strategy is get ahead of the market with a better product, you have to really have a strong differentiator that the alternatives just can't provide.

I am really proud of Scroll and how much it has improved over the years. I think it's helped me become a stronger thinker.

But the alternative tools in the market have also improved a tremendous amount.

If Scroll is going to take the lead, it can't just keep improving significantly relative to itself, it needs to make a leap where it becomes significantly better than the market's best.

This post is written in Scroll, a 2D language.

I have a new language, a 4D one, I've been working on for over a year now. I call it Ford (get it?).

I don't have much to share yet but I wanted to write about it anyway. Maybe someone is working on a similar thing and would like to collaborate.

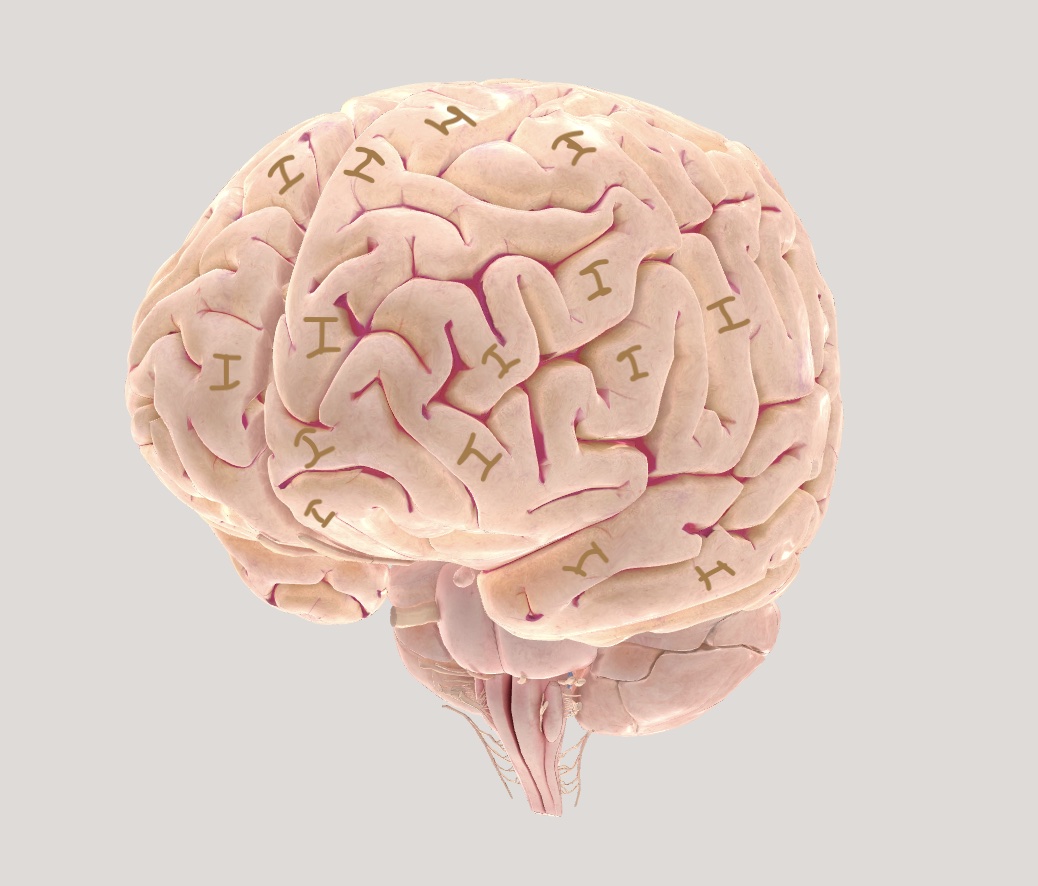

The brain is a 4D prediction engine.

All that is selected for is our ability to predict 4D.

4D patterns in nature can be folded into lower dimensions, like reverse-origami. Folding makes ideas easier to transport.

Writing is folding 4D into 2D.

Reading is unfolding 2D back into 4D.

A single word is a point representation of a 4D concept. Fire, for example. It unfolds to 2D then to 3D and 4D.

To fold concepts from 4D to 2D and back, there is an overall causal order, though parts can be parallelized.

All sensible symbols can be unfolded to accurate predictions of the 4D world.

Symbols that don't unfold accurately are non-sense.

(Symbols that purposefully perplex are not necessarily non-sense, if intended as art)

Our modeling engines are not infinite. Our brains have limits.

At each instant we expose ~200 million cells in our eyes to light.

Perhaps a number based on physical limits like that is the ceiling for the number of voxels we can model at once.

Perhaps the true number is far smaller.

I believe thinking about the 4D nature of concepts and the folds that best get them to and from 2D may lead to more useful 2D languages.

- Juggling Ideas (2025)

- The Spherical Object Model (2025)

- Dreaming of a 4D Wikipedia (2025)

- Does 3D exist? (2025)

Symbols can live more than fifty times longer than humans. They require almost no energy to persist, just the occasional refresh every fifty years or so to not fade. How do we talk about this space where symbols are popping up, fading or being replicated, on surfaces and screens? I suggest a new word: Infosphere.

The Infosphere refers to the collection of all man-made, public, persisting symbols.

The Infosphere is not the natural part of nature. The Infosphere is not present in the woods, or on the beach, or in the mountains.

Books, newspapers, magazines, televisions, phone screens, radios - these are the realm of the Infosphere.

Your eyes and ears breathe the Infosphere as your lungs breathe the atmosphere.

We depend on the atmosphere to live and we depend on the infosphere to thrive.

A century ago in the western world new industrial technologies polluted our atmosphere.

We largely fixed this, but now our infosphere is heavily polluted.

Information pollution is very real.

Earlier generations spent far more time in nature. It simply wasn't possible to be exposed to the infosphere so much.

Now the infosphere is increasingly omnipresent, and, as it is filled with toxins, the latest generations, including mine, are the most lied to people in history.

You wouldn't want your children to breathe toxic air, why would you want them to breathe toxic information?

What is the source of these toxins?

The infosphere can nourish or poison, instruct or distract.

You would think our laws would be designed to encourage the former and discourage the latter.

But it is the opposite.

The primary source of infosphere pollution are those unarchived symbols that we are not permitted to adjust.

We have made widespread a thing called "copyright" law. What does this do?

It orders that new symbols be frozen for over 100 years.

It pretends that this would lead to a healthier infosphere, but any thinking man can see how clearly false this is.

Ideas work best continually refined. Freezing ideas is nonsensical.

No honest scientist or engineer would ever say their symbols are perfect. Instead they constantly strive to correct them, iterating upon them until they die, and hoping that future generations will continue to iterate upon them after that. The scientist also publishes their work for all to see and learn from. Meanwhile, the copyrighter demands payment before one can see their work, then puts out dribble after dribble, and claims their symbols are immune from defects, and must not be altered without permission for over a century.

Which symbols do you think are healthier for the infosphere?

Nature provides incentives for improving the infosphere. Copyright provides incentives for polluting the infosphere.

Believing one can centrally set the optimal "term limits" on information is as naive as believing one can centrally set prices.

Free markets would far more intelligently organize the infosphere.

The information filter strips toxic information, such as orthogonal advertising, from the infosphere.

But information filtering is made illegal by copyright law.

As a result, toxins are incentivized and linger.

Advertising, the mixing of undesired symbols with desired ones, is like an industrial age smoke stack spewing soot into the air.

Advertising pollutes our infosphere.

Honest advertising needs no captive audience or copyright protection. Thus, most in the advertising business are liars.

Liars pay top dollar for ads. Often the purveyor of copyrighted materials tries to pretend they are in an honest business but their material is bundled with advertisements for dishonest products.

When you see "Our business model is advertising" you should interpret as "Our business model is lying."

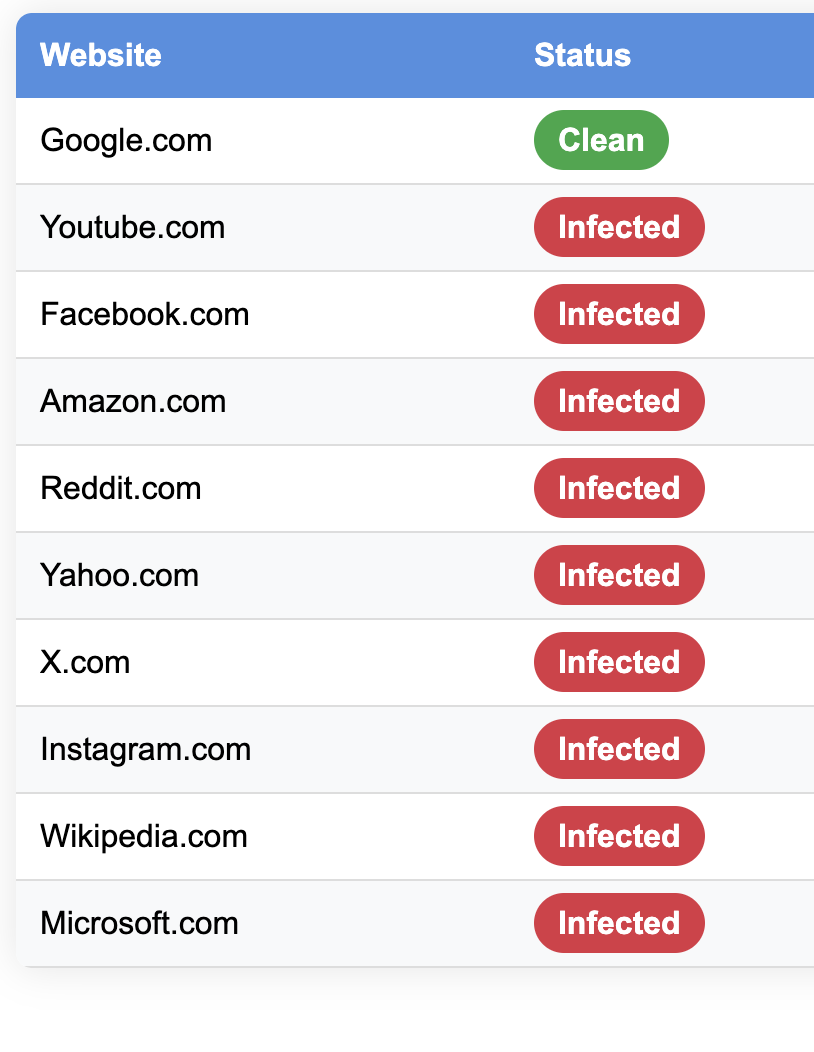

What kinds of information are we most exposed to?

Are we exposed to high value, instructive information, or are we exposed to information trying to distract and mislead?

I often find high quality information buried under mountains of junk. We are exposed to the information on top, while the truth seeker has to go digging.

Sadly people are not allowed to filter the junk for others and so each one must waste their time doing so (or more commonly, give up and just accept what they are told).

What is the harm of a polluted infosphere?

Significant time wasted, for one. When one man's time is wasted, everyone is worse off.

But it does not stop there.

We saw in 2020 people worldwide held prisoner in their homes, ordered to cover their faces, getting forced injections, all uninformed orders made possible by a toxic infosphere.

There are countless Salem witch trials going on right now for conditions that don't exist; immunizations that doesn't immunize; medicines that don't heal.

An unhealthy infosphere is as deadly as an unhealthy atmosphere.

We have recognized that we need to take care of our atmosphere.

Similarly, we need to take care of our infosphere.

I believe learning through motion-through conducting physical experiments in the real world-is vastly superior to learning through reading.

But the number of experiments one can do is vast, with some being far more informative than others, and time and resources are limited. Reading can point one to the motions-the experiments-that are most informative.

Thus, the most valuable symbols are the ones that guide one to conduct the most useful experiments.

Lately I've been figuring out how to put all of science into one file. I think the file would largely be experiment after experiment after experiment. Each one, the near minimum number of symbols to communicate the easiest experiment that can be done to cause learning the next most useful set of patterns about the world.

Pretext is all the words, all the definitions, all the patterns not in the text but that the text depends on. The texts that the author possesses and the reader requires.

Texts are far smaller than their pretexts.

The text E=mc² is short; the pretext long.

Texts are like the tip of the iceberg. But that's overselling texts, for the tip of the iceberg is 10% of the thing, whereas texts are far less a percentage of the text+pretext combination.

Perhaps the better metaphor is that texts are like the tip of a new Hawai'ian island poking above the surface, built on an Everest-sized mountain underneath.

The Pretext Button

We can build a machine, a function, that generates the pretext for a text.

Generated pretext would help find logical mistakes and/or experimental holes.

Pretexts would also give us measurable quantities. We could compare the true size of text A and text B by measuring both with their pretexts.

What should be in the pretext?

- Every word should be declared

- Every word should be grounded

- Every word should be defined

- And the same for multiword constructs

- The text should be fully parseable by the above

I'm excited to work more on pretext. Should have some demos soon.

The doer alone learneth.Attributed to Nietzsche

I wish I spent more time moving atoms and less time moving symbols.

I enjoy reading and writing, but I do more of it than I'd like.

I overinvest in symbols out of duty. A small group of us stumbled upon the truth that our society has gotten the law wrong on symbols, and as a result the infosphere has become heavily polluted.

In an ideal world, IP law is deleted, the toxins are filtered from our infosphere, symbols become far more signal than noise, and I can put more time into doing things that require more motion than the pressing of keys on the keyboard or dancing a pen across a page.

Symbols, done right, are an extremely useful tool.

But only so far as they can improve the models embedded in one's brain.

The latter is the ends, the essential. The former is just a means.

The mental model is the meal, the symbols are the kitchen. Humans don't need kitchens, but do need meals. One is absolutely essential, the other is just a means to the essential thing. Kitchens are awesome, symbols are awesome, but there is a huge difference between something essential and a tool for making the essential thing.

The Best Symbols

The best symbols are those that transmit reproducible experiments that someone can do to learn highly predictive models of the world.

It is not enough to just read the symbols, you have to do the movements to learn them. The symbols are merely a guide.

When some symbols can lead you through some motions that then unlock in you vast new predictive powers, that is symbols at their finest.

I wish I could snap my fingers and get people to see what I could see. A world with a very different infosphere. New mediums beyond books and papers and shows. Where symbolic products are published in a way to be easily accessed and shared and combined and refined and digested. An infosphere not overwhelmed with noise, not constantly trying to steal your attention. An infosphere that serves you, not tricks you.

It is far more rewarding to make things with atoms than symbols. Atomic creations are the things to be most proud of. The symbols on the screens should help us move and produce more, rather than sit and consume more symbols.

Years ago my smart phone broke and I did not replace it. It was a life improvement.

My laptop is nearing 5 years old. It too may soon break. I would prefer not to replace it.

I hope the truth will be spreading by then.

We need far fewer symbols. We need signal, not noise.

When the facts change, I change my mind. What do you do?Attributed to John Maynard Keynes (1932)

A strong thinker can explain their position and imagine new facts that would flip it.

Facts don't always change. Nature grants us some stability in her laws.

But they can always change. Our models are always downstream of measurements.

Imagining new facts that could cause you to change your positions makes you stronger.

Not only does it make you less likely to invest in wrong positions, but it will teach you how to better understand (and potentially alter) the positions of others.

Positions are built on models built of blocks

On topic T, my position is P.

My model of T is M.

M is built of blocks B[].

What changed blocks CB[], would cause me to flip my position P?

Sales

What I'm talking about here is also very similar to the problem of sales.

In sales you are listening to the potential customer to understand their model of the world, and then giving them truthful block changes that will update their model so that their position P becomes "buy".

Listening

To identify mind changers one needs to be know what the current model of the world in the mind is.

If you're trying to identify your own mind changers, writing helps.

If you're trying to identify the mind changers in someone else, listening.

Subconcious

What might cause someone to change their mind on a position P might have little to do with the symbolic model you're able to dig out.

It might mostly be due to subconscious blocks.

Perhaps a person says "Y" would change their position to P', but then what actually changes their position to P' is something totally different (like a pretty person pitching P').

Selling Out

The surprising thing is not that every man has his price, but how low it is.Attributed to Napoleon

In writing this one unexpected thing I realized is how easily and near universally even my positions are vulnerable to incentives.

I don't think you could pay me a price to promote a position I believed to be false, but you could probably pay me a (high) price to not promote a position I believed to be true.

In other words, my voice is not for sale, but my silence might be.

Why is this?

Simply because I have multiple things I care a lot about, I know my time is limited, and so working on certain things always comes at the expense of working on others. So if someone pays me $X to not promote opinion Y, but that allows me to promote 3 opinions that I care about when I otherwise might only be able to promote 1, I can see that is a deal I would be willing to consider.

So the block "suddenly I'm being paid not to promote P" would not get me to 180° flip my position P, but to at least rotate it 90° and go passive on P.

No one is currently paying me not to promote ideas (unfortunately?), but I think this is pretty widespread.

Some claim a lot of advertising in our world today isn't selling products, but buying silence.

Appendix: Practicing what I preach

Below I practice looking for mind changers on three of my most contrarian ideas.

What are some block changes that might cause me to reverse my contrarian position that IP laws should be abolished?

I don't think any of these would ever happen, but I guess my mind would change if reproducible data showed conclusively:

- Concentrating power into a tiny sliver of humanity, the "innovator class", creates vastly better innovation than diffusive innovation

- Without IP then the class of people adept with symbols are inevitably and hopelessly enslaved by the property controlling class

- IP allows better information control and without it there is inevitable weapons proliferation and civilization-ending violence

- Without IP information sharing plummets and information sabotage reigns

- Without IP for whatever 2nd order reasons we are no longer able to build such collabortive wonders as microprocessors

- If AGI arrives and can create so much great stuff on demand that IP laws become completely irrelevant

Again, I would be extremely surprised by data showing these things, but these are the types of things that might cause me to change my mind.

What are some block changes that might cause me to reverse my contrarian position that Scroll is a language worth strong investment?

- Putting time into improving Scroll often leads me to feel like I'm becoming a stronger thinker, so if that stopped being the case, I could see that.

- I definitely believe "thinking in 3D/4D", is 100x better than 2D thinking, so I could see if some type of 4D or 3D symbolic language were invented, that Scroll would become a much less important investment.

- Although I think Scroll is the language currently most alligned with LLMs, something new might arise significantly more aligned with them, which would make Scroll irrelevant.

- If the potential applications of Scroll to government and commerce were shown to be irrelevant, then it might show that the Metcalfe's law effect I predict will eventually come to Scroll might never happen.

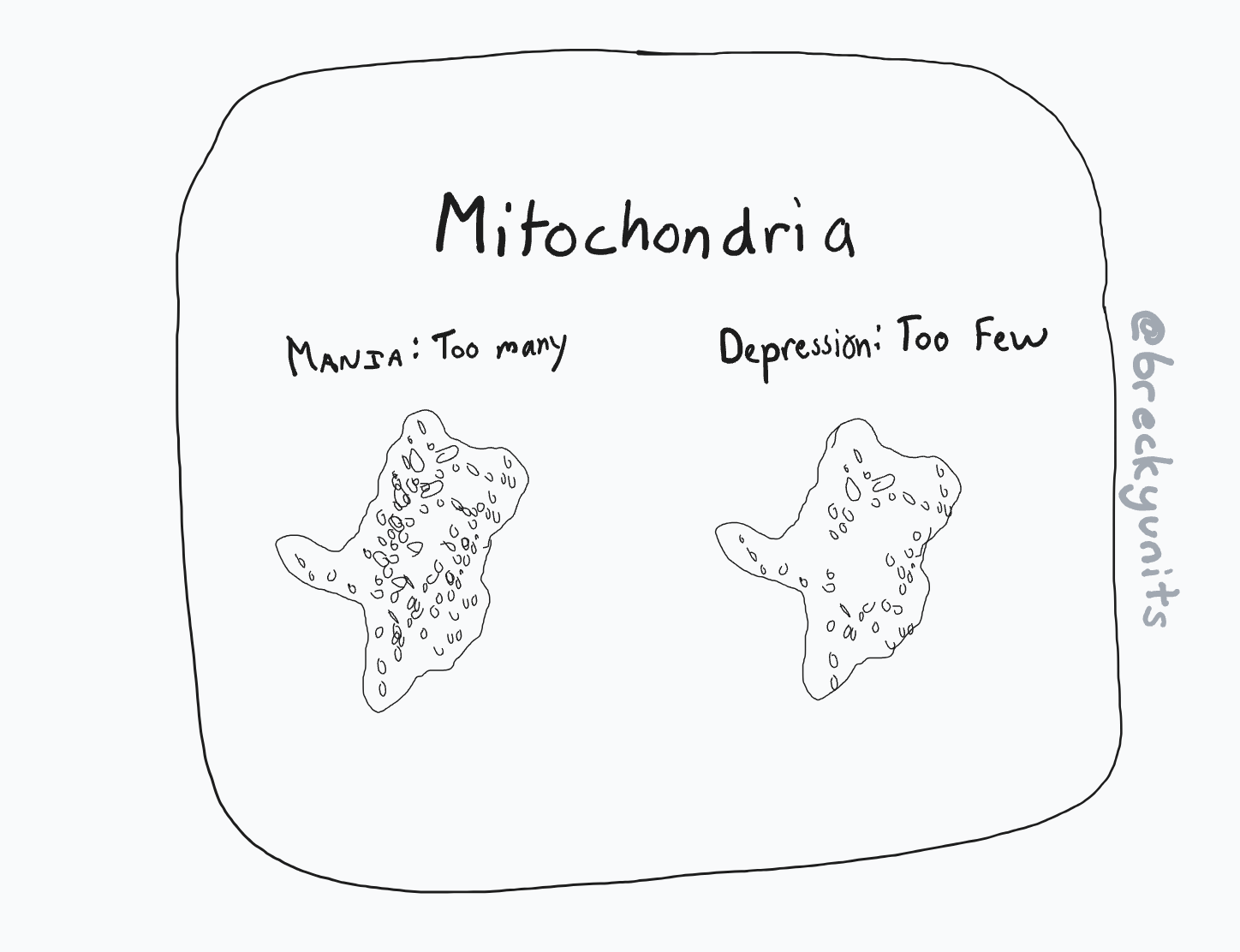

What are some block changes that might cause me to reverse my contrarian position that mitochondrial populations are the root cause of human energy disorders?

- If it's shown that there is no difference in mitochondrial populations in the same human in different energy states

- If a wholly different biological model arises, perhaps with a different organelle, or that looks at things very differently (perhaps with some type of field theory), that explains things better.

In 1976, Bill Gates wrote an angry letter to computer users saying "most of you steal your software".

BASIC is a language created at Dartmouth in 1964 by John Kemeny and Thomas Kurtz.

To celebrate its 50th, Microsoft released the source code to their first product, Altair BASIC, written in 1975.

The 157 pages of source code contain zero mentions of Kemeny, Kurtz or Dartmouth.

What Science May Be

by Breck Yunits

We can embody all of science into a single fully connected text file.

Scientists would contribute blocks to this file like they now contribute papers to journals and datasets to databases.

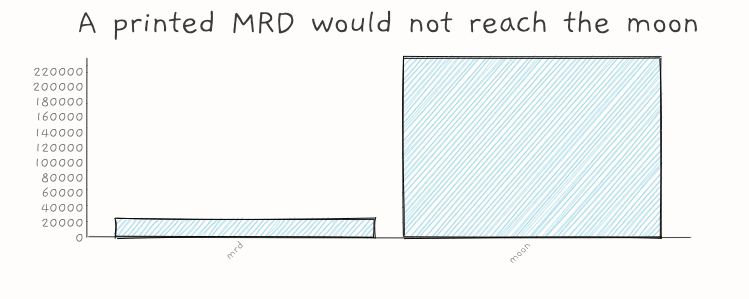

I estimate all of science would fit in 1 billion pages. If printed this would extend from here to the Moon.

This file would allow everyone to get state-of-the-art, logical answers to any question by querying every scientist, living and dead, all at once, on their own machines.

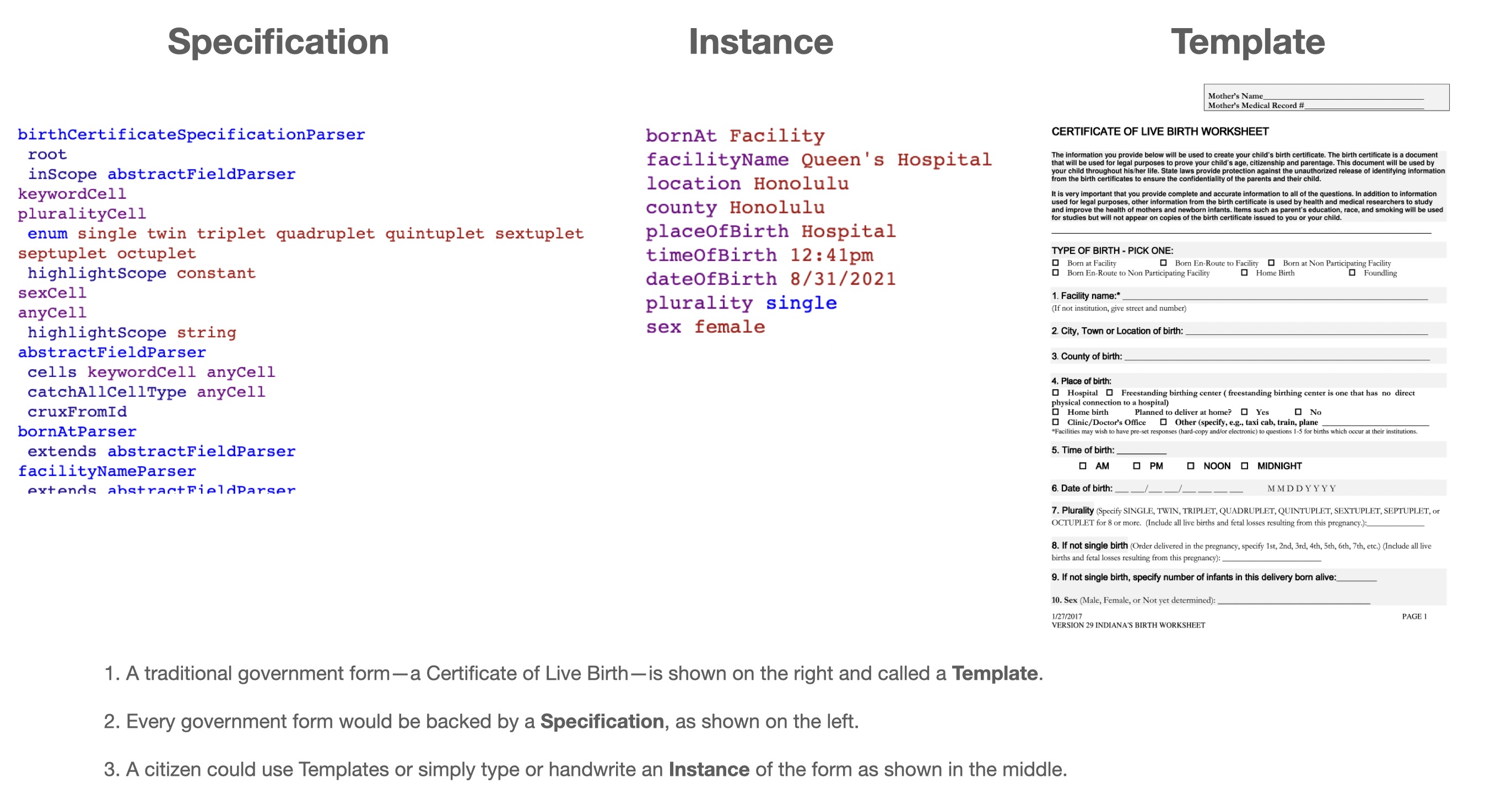

How do we connect every word? A new language consisting of a simple universal syntax that denotes words and recursive blocks, along with type definitions called parsers, creates an invisible wired grid. A Reader moves down the blocks of the file, encountering new parsers or pattern matching words and blocks to existing parsers, and then loads blocks into memory for later computations.

All words are typed and the file is mostly structured data, with exceptions for sections of visual and free-text data, called groundings.

This file would be topologically ordered, meaning concepts are defined only out of already defined concepts. For example, addition and uncertainty would be defined before quantum mechanics.

Experimental procedures and actual scaled measurements would be found early and often, as reproducible experiments are the bedrock of science.

To attract the huge energy required to build this file it must deliver value far before it is near complete. Domain specific sections of the file can be built separately in parallel and deliver immediate value even before integration. The tools needed to build this system are also useful for more down-to-earth tasks and these use cases could attract the energy necessary for the moonshot. For example, the same software needed for this file could also power simpler and more trustworthy blockchains.

Decentralization and forks would be encouraged and often merged back, and may on occasion lead to fracturing and different schools of science.

Believers in this system would want Freedom of Science laws that would protect the rights of individuals to create, improve, and share these files.

This system might be built by ten million scientists contributing an average of 100 pages each, or perhaps a far smaller team utilizing AIs.

Think of these files as a new medium beyond articles, books, databases, encyclopedias, wikis, and LLMs.

The three major alternatives to this system, in order of connectedness, are libgen, which metaphorically glues all liberated books and scientific papers together at the edges with no integration; Wikipedia, which contains a shallow but broad collection of concepts with weak integration; and deep neural networks, which turn libgen, Wikipedia, and the web into an inscrutable matrix with incredible generation capabilities that strongly suggest embedded logical understanding (but also high energy requirements and hallucination tendencies).

This system differs from previous expert symbolic AI systems in the design of its language that allows it to scale to all of science.

This file would instantly reveal what science knows and does not know; what concepts are needed to fully understand other concepts; what experiments one can do to verify key concepts; and what the actual contributions of new research are, measured in line changes.

In its transparency, simplicity and minimalism, this file would make science a physical thing that people could hold and trust.

A number of developments have made it more feasible than ever to build this system now, but the value this system would provide to humans is timeless.

The smallest improvements, compounded, have the biggest impact.

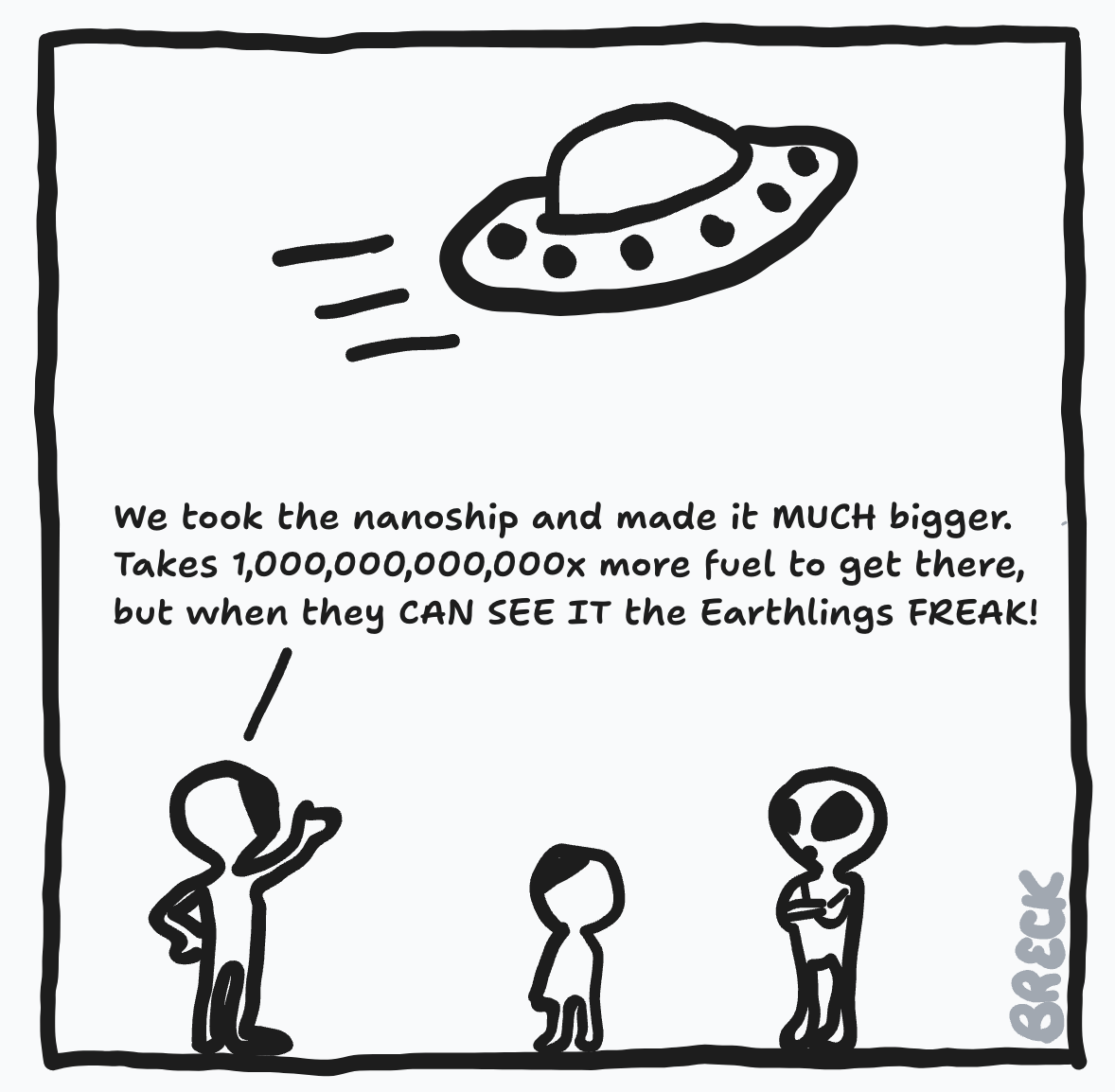

In biology, if it wasn't for a tiny dividing nucleic-cell pairing with a tiny dividing proto-mitochondria bacterium 2 billion years ago, we would not be here.

In computing, it's been the nanoscopic improvements to the transistor which have caused the biggest changes to the world in the past 50 years.

Let's call these things nanoideas.

It follows from this that if you believe a big impact is needed you need to look not for big ideas but nanoideas-the smallest ideas that compound.

To find better nanoideas as yourself questions like:

- Is there a version of this idea that's even smaller?

- Does this idea compound? How?

- How fast will this idea compound?

List all of your ideas and go into detail on their size and how they compound.

Biological viruses are nanoideas.

Physics is full of nanoideas.

Moral values are nanoideas.

There is nothing wrong with having big ideas. But sometimes the best way to materialize them is by way of nanoideas.

Related

Symbols are useless unless consulted.

Consulting consumes energy.

Everyone has finite energy.

Consulting can be sequential or—via computation—simultaneous.

I call symbols with no legal restrictions the Unchained.

The Unchained is too large to be consulted sequentially.

The Unchained can be consulted computationally and so simultaneously.

I call symbols with legal restrictions the ©hained.

The ©hained is too large to be consulted sequentially.

The ©hained may not be consulted computationally and thus cannot be consulted simultaneously.

Because the ©hained may not be consulted computationally, consulting from the ©hained and consulting from the Unchained are exclusive operations.

Sequentially consulting the ©hained reduces energy available for simultaneously consulting the Unchained.

Thus, the ©hainer's implicit argument is that sequentially consulting their ©hained symbols is worth more than simultaneously consulting the Unchained.

A ©hainer mathematician claims sequentially consulting their symbols is worth more than simultaneously consulting Euclid, Gauss, and Euler.

A ©hainer physicist claims sequentially consulting their symbols is worth more than simultaneously consulting Newton, Maxwell, and Einstein.

A ©hainer musician claims sequentially consulting their symbols is worth more than simultaneously consulting Bach, Beethoven, and Mozart.

A ©hainer biologist claims sequentially consulting their symbols is worth more than simultaneously consulting Leeuwenhoek, Pasteur, and Darwin.

A ©hainer artist claims sequentially consulting their symbols is worth more than simultaneously consulting da Vinci, Rembrandt, and van Gogh.

A ©hainer author claims sequentially consulting their symbols is worth more than simultaneously consulting Homer, Shakespeare, and Dostoevsky.

©hainers claim their symbols ought be treated with more consideration than the symbols of these greats.

I hope you see why this is such a ridiculously dishonest position, and understand my visceral disgust for this behavior.

© is the mark of the ©hainer. It is the mark of the dishonest man.

I salute and lend my hands to the honest men who contribute symbols to the great Unchained. They have an honest estimation of their humble contributions in relation to the whole. It is on their shoulders that the future is built.

Nobody talks about how Bill Gates actually made his billions.

Nobody talks about how Larry Ellison actually made his billions.

Nobody talks about how Larry and Sergey actually made their billions.

Nobody talks about how Jeff Bezos actually made his billions.

There's a Secret.

A Secret connecting the majority of the richest people in the world (and it's not value creation).

The reason nobody talks about the Secret is because the people who effectively control what we talk about make their millions in the same way. Talking about the Secret is a surefire way to get kicked out of the club.

Technology is abolutely incredible. Not just because of what it can do, but because of how hard it was to create. How much blood, sweat, and tears from tens of millions of people over thousands of years went into learning the patterns of our universe and helping evolve technologies to manipulate it.

It is such a shame that this industry is controlled by people who use the Secret to extract rent and control the population. Who try and pretend their extraordinary wealth comes from value creation, and not the legal chains of the Secret. Who disrespect the great work of the countless brave and selfless souls who gave their time to build a better world for their descendants, only to see things locked up by these "tech titans" and their cronies in Congress and the media. Who will lead our country to ruin to protect their Secret and their wealth.

I respect the work ethic and intelligence and leadership skills of the people above. But I don't respect their integrity.

It makes me sad that so many young people get caught up chasing the same scale of fortunes as the people above, not realizing that "dishonesty" and the Secret is the crucial ingredient to their extraordinary "success".

It makes me sad that so few can see the Secret.

It's hidden by its ubiquity.

We live above 7 microverses that we cannot see no matter how hard we squint.

Each microverse is ten times larger than the one above.

Each is its own land with unique creatures, phenomena and rules.

We rely on scopes, and experiments, and symbols to map the territory.

How many concepts to describe each world? 1,000? 10,000? More?

I gave these microverses names: Hairfield, Bloodland, Mitotown, Viralworld, Proteinplace, Moleculeville, Atomboro.

And that just gets us to the atomic level. But there are many more lands beyond that we cannot see. It may be closer to the beginning than the end.

| name | microverse | diameter | source | visibleSince |

|---|---|---|---|---|

| Hydrogen Atom | Atomboro | 1 | https://en.wikipedia.org/wiki/Bohr_radius | 1970 |

| Glucose | Moleculeville | 15 | https://bionumbers.hms.harvard.edu/bionumber.aspx?&id=106979 | 1961 |

| Hemoglobin | Proteinplace | 85 | https://bionumbers.hms.harvard.edu/bionumber.aspx?id=105116&ver=4&trm=hemoglobin&org= | 1840 |

| SARS-CoV-2 | Viralworld | 1000 | https://pmc.ncbi.nlm.nih.gov/articles/PMC7224694/ | 1931 |

| Mitochondria | Mitotown | 10000 | https://www.ncbi.nlm.nih.gov/books/NBK26894/ | 1857 |

| Red Blood Cell | Bloodland | 80000 | https://pmc.ncbi.nlm.nih.gov/articles/PMC2998922/ | 1678 |

| Human ovum | Hairfield | 1200000 | https://en.wikipedia.org/wiki/Egg_cell | 1677 |

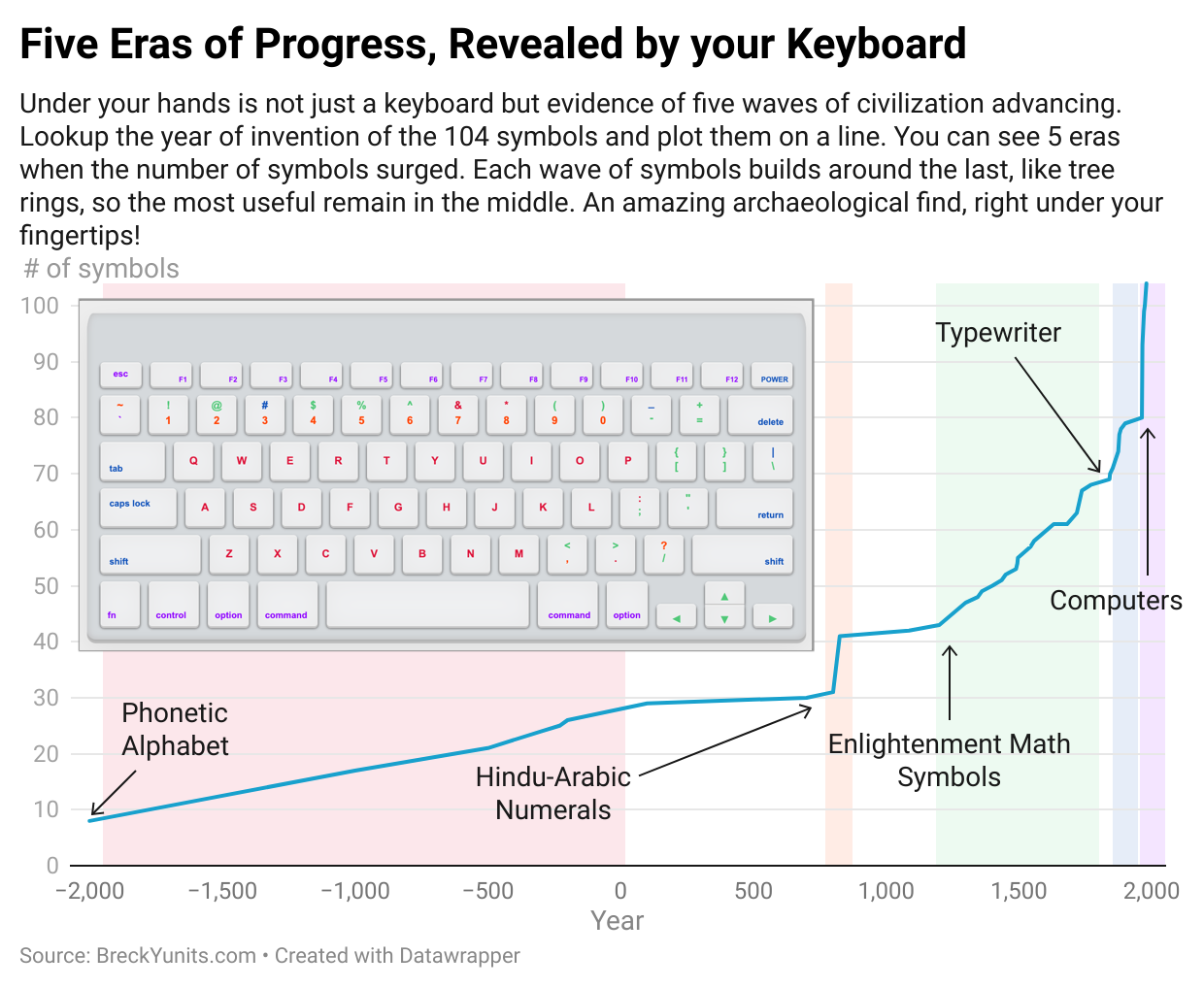

Lately I've been thinking about topological sorting.

Topological sorting is sorting concepts in dependency order.

For example, if you wanted to sort "fire" and "internal combustion engine", fire would come first. To explain ICEs, you need fire, but to explain fire you don't need ICEs.

Sorting concepts topologically versus chronologically can create different rankings.

Sorting numbers topologically puts binary (0 and 1) before the Hindu-Arabic numerals (0 - 9), even though humans used 0-9 way before using 0 and 1.

Our topological knowledge base often has missing or incorrect concepts that may not be fixed for centuries.

Encyclopedias are sorted alphabetically.

I am unaware of an encyclopedia sorted topologically.

Why would you want an encyclopedia sorted topologically?

Well, topological sorting tells you the logical importance of things. Things further down are built on things at the top (or vice versa, if you prepend new things to your files rather than append).

A topologically sorted encyclopedia would also be hard to vary. Computational logic tests would trigger if someone tried to add non-sense to a low level piece. You could tell how important something is by how hard it would be to vary-how much load do these symbols support?

When revolutionary new low-level scientific insights are discovered that refine our models, you could actually numerically see and measure the downstream impact of those breakthroughs.

If you want to get the most bang for your bits, sort your ideas topologically.

What parts of the encyclopedia should you learn first?

It makes more sense to learn the things with a high topological ranking, rather than a high alphabetical ranking. A lot of things turn out to be fads.

Popularity sorting such as by number of inbound links is an improvement over alphabetical sorting, but seems very susceptible to bias and fads.

How would you create a topologically sorted encyclopedia?

I have been attempting to build a topologically sorted encyclopedia for a long time, though I had never described it like that. It's only recently when I realized I wanted to change Scroll to be topologically-sorted by default that I went looking for the definitive term to describe the concept.

I think using parsers all the way down might work, though I could be wrong. The nice thing about this strategy is that you can build stuff that is useful along the way even if the vision of the topological encyclopedia doesn't materialize.

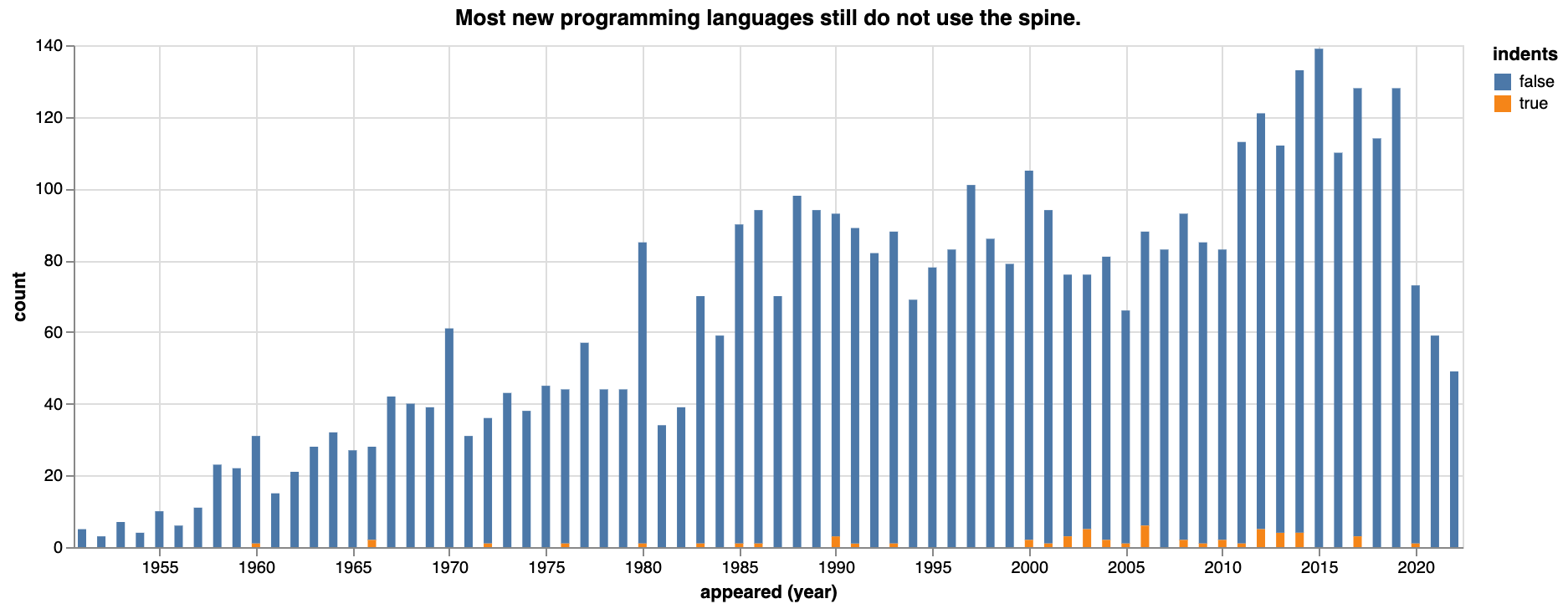

Today I started looking at older programming languages that have lasted like C, Fortran, Ada, et cetera, and realized that topological sorting used to be the default. Newer languages aren't as strict, and that's the pattern I copied. But I wonder whether it's a better design to make the rule topologically sorted, and the looser version the exception.

When dealing with larger programs it seems you can do things a lot faster if you know things are sorted topologically.

Is the universe topologically sorted?

It seems to be. The present depends upon the past, and so comes after the past.

Which came first, the chicken or the egg?

If we are talking about the words, that might be easy to determine with a good etymology reference.

If we are talking about the patterns represented by the words, then it's a bit more interesting. We know the bacteria came before either. But my guess is we had objects closer in appearance to chicken eggs before we had objects resembling chickens. So I would say the egg came first, topologically.

A scale is an ordering of numbers. Objects map to a scale to allow comparibility in that dimension.

The word scale is an overloaded term. Usually when I use the word "scale" I am using a different version of it, such as "scale it up" or "economies of scale". In this post I'm using it in the sense of a measurement or yardstick or number-line or type.

English is generally unscaled. A small subset of it is scaled.

So blog posts are mostly "unscaled". It is hard to compare this line with the line below it.

But this post does contain some lines that are scaled. For example, it has a date line, which maps this post to a date scale. So you can compare this post to others, and say which came before, and how much they came before.

Scroll, the language and software that powers this blog, does compute some scaled metrics on each post. The number of words, for example. You can see the number of words for this post and all others on the search page.

I like the definition of scales in the d3 data visualization library:

Scales are a convenient abstraction for a fundamental task in visualization: mapping a dimension of abstract data to a visual representation. Although most often used for position-encoding quantitative data, such as mapping a measurement in meters to a position in pixels for dots in a scatterplot, scales can represent virtually any visual encoding, such as diverging colors, stroke widths, or symbol size. Scales can also be used with virtually any type of data, such as named categorical data or discrete data that requires sensible breaks.

I remember when I was struggling to use d3 and then finally their definition of scales clicked in my head and I realized what a simple, beautiful and widely applicable concept it was.

Scales make things comparable. Measure different concepts using the same scale and now you can compare those things symbolically.

The more scales you use, the more sophisticated your symbolic models become. You can measure two buildings with a height scale to create some comparisons, but you can greatly increase those comparisons if you also measure them with a "year built" scale.

One of the most important scales is the computational complexity scale.

Nature loves inequality, the size scale of our universe is vast with ~65 orders of magnitude buckets, and so rarely do 2 random things fall in the same bucket.

A dimension is just a set of different measurements with the same scale.

You can think of any scale as just a line.

Measure objects and draw a point on the line for where each measurement falls.

You can draw a high dimensional dataset as just a lot of independent lines. Not the most useful visualization, but can be helpful sometimes to break things down into really simply pieces.

Wikipedia does not make heavy use of scales. It relies more on unscaled narratives. I often wonder if the focus was more on adding scaled data-data in typed dimensions-if it would allow it to become a more truthful symbolic model of the world.

To be fair, the infoboxes on Wikipedia are scaled data. The syntax is nasty, but the scaled data is wonderfully useful.

The more scales you have, the more trustworthy a model is.

I often think about complexity scales. I proposed if you think in parsers you can measure the complexity of any idea. Perhaps the "parser" is a good unit for a complexity scale. If two models of the world are equally intelligent, pick the less complex one - the one with fewer parsers.

I don't have anything too profound to say about scales. (On the impact scale, this post ranks low.)

I just want to make sure I am deliberately thinking enough about them. If you measure concepts on an importance scale, they are high on the list.

Related Posts

- The Infosphere (2025)

- Experiments (2025)

- Pretext (2025)

- What Science May Be (2025)

- Topological Sorting (2025)

- Thinking in Parsers (2025)

- ICS: A Measure of Intelligence (2024)

- ScrollSets: A New Way to Store Knowledge (2024)

- Marks (2024)

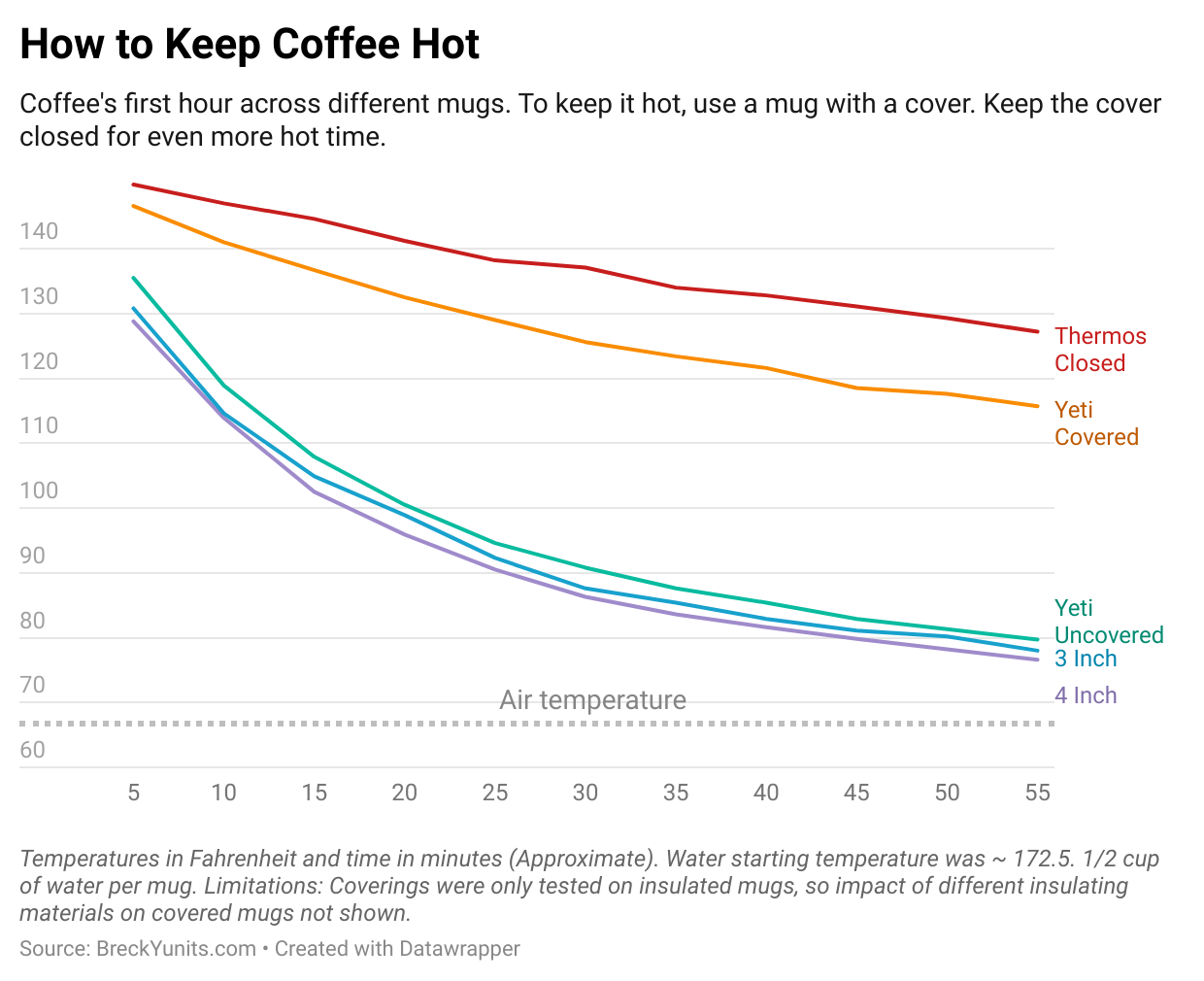

- Hot Coffee (2024)

- The Box Method of Science (2024)

- I thought we could build AI experts by hand (2023)

- Type the World (2020)

- PC: Particle Complexity (2017)

Do you want to learn a new way to think? And a new way to write? Do you want to learn how to look at everything from a new perspective?

If so, read on.

I will try to explain to you how to "think in Parsers". I'll also then go into "writing with Parsers", a computer language we've made. It might not click immediately, but when it finally does I think you'll find it wonderfully useful.

No matter what topic you want to learn, from music to math, chemistry to carpentry, mechanics to quantum mechanics, thinking in parsers gives you another path to understanding any domain.

Parsers match patterns

Look for a tree. (If you're inside, you may need to go to a window)

Did you see one? Great! That means you have a "TreeParser" in your brain that is capable of recognizing trees.

Now, look for a rock. See one? Great! You brain also has a "RockParser".

Parsers are things that match certain patterns and are inert to others.

Your TreeParser fired when you saw a tree, but not when you saw a rock, and vice versa.

Parsers are composable

The tree you looked at may have also activated another Parser specific to that species. I am writing this in Massachusetts and so for me that was my PineTreeParser. Usually I am in Hawai'i and my PalmTreeParser activates. For you it might be another kind. The point is parsers can be derivatives of other Parsers.

But that's not the only way Parsers can compose.

For example, you can also have Parsers that parse parts of a pattern. Think of a BranchParser or a LeafParser.

Parsers can combine in many ways.

Parsers are contextual

Imagine taking a leaf from the tree and looking at it under a microscope. If you remember your biology class, your "CellParser" may activate. But if you were given a blurry image and not told its source, your "TurtleShellParser" may activate instead.

Because you have context you know which Parser is the one to use.

Parsers hold logic

Imagine pulling the tree you saw out of the ground and into the sky. Now imagine throwing the rock into the sky.

I bet when you imagined lifting the tree, you imagined pulling up roots, but you didn't imagine roots attached to the rock.

You can attach knowledge to parsers that can be used in combination with the pattern that activated the parser.

Patterns Exist Without Parsers

The patterns that trees or rocks or turtles emit exist whether or not we have a parser that recognizes them. Patterns exist without parsers.

A baby starts with very few parsers and things for them are largely a blur until they have developed the parsers to make sense of their sensory data.

Often, especially when growing up, we see and record raw patterns that we don't fully understand. Eventually we stumble upon parsers that match those patterns and give us deeper understanding of what we witnessed earlier.

Sometimes we only notice a pattern after we've learned a parser for it. For example, if you've ever gotten a new car, you've probably experienced the phenomena of suddenly noticing that model of car all around you. This is because you have acquired more parsers for that pattern that are now getting activated when you encounter it.

And often the parsers we have don't actually best fit the universe's patterns and we update them later. The pattern of the earth revolving around the sun has existed always, for example, even though humans previously had parsers that parsed it in a different way.

Recap

Let's recap so far. Parsers:

- Match patterns

- Are composable

- Are context-sensitive

- Hold logic

Parsers in the symbolic world

So far we've been talking about Parsers for objects in the real world that are stored in the neural networks of your brain. These Parsers are neat, and you don't even have to be human to have them (dogs, for example have parsers to recognize their master) but Parsers work the same way in the symbolic world.

For example, you have an oParser that:

- recognizes the letter "o"

- also activates your LetterParser

- is context sensitive (so you can recognize the letter "o" versus the number "0")

- and holds logic related to "o", such as it's sound.

And then you can have a WordParser that recognizes words, such as "color".

You can then have a PropertyParser that recognizes a pair of words like "color blue".

Parsers never stop composing like this. If you were to look at the source code of this post you would even see that this sentence is parsed by a ParagraphParser.

It's Parsers all the way down! And all the way up!

Membranes

One thing you'll notice about parsing objects is that all Parsers assume membranes. Whether it's the edges of the tree or the space around words, there are lines, either visible or invisible, that separate what is being parsed versus not parsed.

The Parsers Programming Language

Now let's combine the sections above by making a language of symbolic parsers about things we encounter in the physical world.

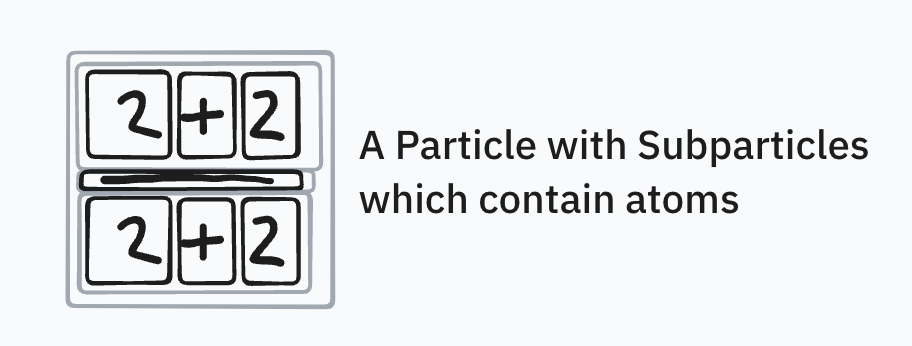

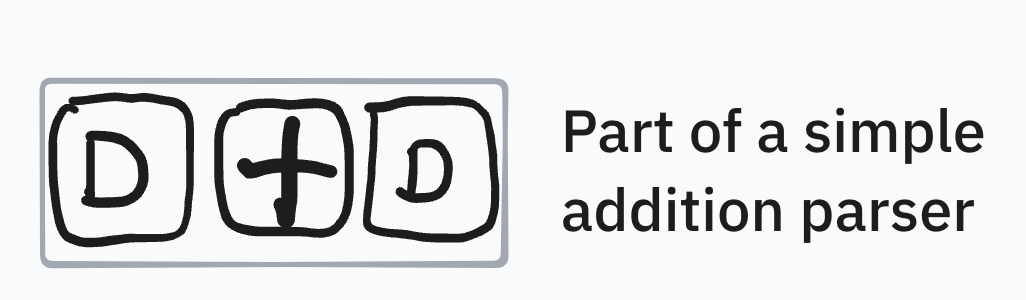

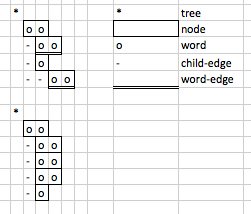

First, we need to set some rules as to the membranes of our language - where do we draw the lines around the atomic units that we will parse? For this, we'll use Particle Syntax (aka Particles). Particles splits our writings into atoms (words), particles (lines), and subparticles (indented lines that belong to the "scope" of the parent line). The nice thing about Particles is that there is no visible syntax at all, and yet it gives us the building blocks to represent any kind of structure.

Now, imagine we wrote the lines below on a walk in the woods.

pineTree

height 20ft

circumference .5ft

pineTree

height 10ft

circumference .3ft

oakTree

height 30ft

circumference .8ft

Right now all we have is some patterns, but no parsers.

Let's write some parsers to parse the lines above:

abstractTreeParser

pattern *Tree

heightParser

atoms lengthAtom

circumferenceParser

atoms lengthAtom

calculateVolume

return height * surfaceArea

pineTree extends abstractTreeParser

oakTree extends abstractTreeParser

Now we have five parsers with which to understand our original program.

Of course, the parsers themselves also make use of various parsers (such as "pattern" and "atoms"), which I've left out, so in reality there would be quite a bit more.

I've left out some details to focus on the core ideas of how you can define Parsers symbolically that match patterns, are composable, context-sensitive, and hold logic.

You may notice that the Parsers language is not very much concerned with computers and fitting the world to match the structures in a computer. Although the parsers can carry logic to execute on computers, Parsers is really focused more on organizing knowledge into parsers. Parsers is a knowledge storage language first--a way to think first--and a computational language second. Parsers does not say "here is a set of structures that work well on the computer, fit your knowledge of the world into them." Instead the approach of Parsers is to "mine the minimal, ideal set of symbols to represent the patterns you see, and then we'll have the computer adjust to those".

Now, if you want to dive deeper into Parsers, an ever evolving language, the Scroll website is a good place to visit next.

But now let's keep going and connect the dots.

How to Understand Anything

Now I'm going to get to the fun claim of this essay: every subject can be represented as a list of simple parsers in the Parsers language.

Parsers are the building blocks of knowledge, much like atoms are the building blocks of molecules.

It doesn't matter how "complex" the subject is, everything can be broken down (and built up) by simple parsers.

Now, don't get me wrong, I'm not saying quantum mechanics is the same thing as arithmetic. What I'm saying is that quantum mechanics is merely a larger list of parsers. Each parser in quantum mechanics is not more complex than the parsers in arithmetic, it's just that there are more of them.

If you are struggling to master a new subject, you can just start writing out all the parsers in the subject in a flat list. You can then work on your understanding of each one, refining your parser definition as you go. You'll find that before you can understand some parsers, you'll have to understand some other parsers it uses.

You can also organize parsers by which ones are used the most. (Often you'll find many parsers that are taught in books are not very important, and you'll start to pick up on the core set that are heavily used and should be mastered).

Eventually you'll have a long list of parsers that together describe a subject in a very accurate, precise, and computable way. It's like an encyclopedia but better, because the parsers are linked in ways that can be logically computed over.

Wrapping Up

This was a brief introduction to my primary mode of thinking: "thinking in parsers". I've personally found this a universally useful way to think about the world. The great thing about it is it works for both understanding objects in the 4D world and also for objects in the symbolic world (including 2D math).

When I was younger I was overwhelmed by the amount of symbolic knowledge we have in our world. Now that I'm able to think in parsers, I find it all far less intimidating, because I know it's just a big list of parsers, and I can take it one parser at a time.

I hope you find thinking in parsers as useful as I do.

FAQ

Are Parsers just Object Oriented Programming?

From an OO perspective, think of Parsers as ClassDefinitions initialized with particles that always have methods for parsing subparticles. Like OO, parsed particles can have methods and state and communicate via message passing. But with Parsers the focus is on parsing and patterns.

Are Parsers just functions?

From a functional programming perspective, you can think of Parsers as a function that takes pattern matching and other logic and returns functions that can parse patterns.

Are Parsers just Lisp?

From a Lisp perspective, you can think of Parsers as like Racket with its "Language Oriented Programming", but with Particle syntax which compose better than S-Expressions.

Are Parsers just XML?

From an XML perspective, think of Parsers as XML with a much slimmer syntax and a built-in language for defining schemas.

Does the rigidness of Parsers and the whitespace syntax in Particles dampen creativity?

No. First, you can have Parsers like a PoemParser that are very loose in what they accept. Second, no matter what, symbols are a poor approximation to patterns in the physical world, and so it's better to optimize for efficiency in symbolic patterns over looseness.

The below is a chapter from a short book I am working on. Feedback appreciated

Particle Syntax (Particles) is a minimal syntax for parsing documents.

What is a syntax? Think of it like a set of rules that tell you how to split a document into components and what to label those components. Particles fits in the category of syntaxes that includes XML, S-Expressions, JSON, TOML or YAML.

Particles is unique in that it is the most minimal of these.

How minimal is Particles? Very! The file below uses everything in the syntax:

post

title Particles

Particles divides a document into 3 kinds of things:

- Atoms

- Particles

- Subparticles

Atoms are just words. A word is just a sequence of visible characters (no spaces in words). The document above has 3 atoms: "post", "title", and "Particles". In Particles, atoms are separated by a single space. Particles has no understanding of what these words mean. Particles does not have the concept of number, for example. Every visible character in Particles is just part of an atom and that's it. If you have the line a b, that would be be split into 2 atoms, "a" and "b". If you were to add an extra space a b, that would now be 3 atoms, "a", "", and "b". So an atom can be of length 0.

The particles in Particles contain 2 things: a list of atoms, and optionally, a list of subparticles.

Subparticles are just particles that belong to a parent particle.

In the example above, the particle starting with "post" is actually a subparticle of the root particle, which is the document itself.

The particle starting with "title" is a subparticle of the particle starting with "post".

The particle starting with "title" has 2 atoms, "title" and "Particles", and zero subparticles.

The particle starting with "post" has 1 atom, "post", and 1 subparticle.

You make one particle a subparticle of another by indenting it one more space than it's parent particle. If we deleted the space before "title", then the line beginning with "title" would become the second subparticle of the root particle, and the line beginning with "post" would have zero subparticles. If we added another space before "title", that line would still be a subparticle of the "post" particle, and all that would change would be that we added a blank atom at the beginning of that particle's atoms list, and it would now have three atoms.

Exercises

Try to solve the exercises below. The answers are in the source code to this page.

if true

print Hello world

In the code above, how many atoms are there?

How many particles?

How many subparticles?

How many extra spaces does it take to make a particle a subparticle of the particle above it?

a

b

c

How many particles in the example above, excluding the root particle?

How many atoms does a blank line have?

Now you should understand the basics of what Particle Syntax is. In the next chapter we'll look at why Particles is so useful.

The market is a blob of decision making agents that speaks with money.

You speak to the market with an offer.

The market gives you money, which means "Yes".

Or does not give you money, which means "No".

The no may mean the market:

- does not understand your offering

- does not think you can deliver on your offering

- does not want your offering

- does not like the price of your offering

The relationship between a human and the market is like that between a dog and the farmer. The dog can roam a bit but ultimately the farmer holds the power.

Markets are an intelligence

Markets can be a bit scary sometimes, just as a hive of bees can be a bit scary. Markets vibrate with energy and it can take some getting used to to have that energy focused on you when you first put your offerings out there. You have to have thick skin - there are a number of market participants who will sting you, one way or the other (usually nothing personal, just people having a laugh or a bad day).

Markets are intelligent beings. They sniff out good deals. Direct energy to promising directions.

A good quote I saw recently, that was about investing in markets but I think applies to selling to markets as well: "1. Markets work. 2. Costs matter. 3. Diversification is your friend". In other words - markets will reward you if you make something of value, but it may take time (so keep costs low), and don't put all your eggs in one basket.

Muting the Market

As a builder, should you ever mute the market?

To mute the market is to ignore the "Nos" and continue to invest in your offering. Instead of pivoting to something the market will say "Yes" to today, you work on something that the market currently says "No" to, but you have the belief that at some point the market will change.

To mute the market is to understand something about the world the market currently doesn't get, and to have the conviction that it eventually will.