A few words about juggling

When I was a kid I learned to juggle.

Barely.

I could handle 3 balls for about 30 seconds.

I bluster, that if I practiced, I could handle 5 and go for minutes.

This Reddit thread estimates a week of practice to juggle 3, a month to juggle 4, and a year to juggle 5.

Wikipedia has a whole page on Juggling world records. No one can continuously juggle more than 7 balls. The time record for 7 is 16 minutes. Someone juggled 6 for 30 minutes. The record for 5 is 3 hours and 44 minutes. For 3 it's 13 hours.

The takeaway is this: some humans can juggle more than others but even the greatest max out at 7.

Continue reading...Every time I see a school of fish, I'm bewildered by the conformity. Every fish looks and swims the same.

Don't fish have a desire to be individuals? Where are all the contrarians?

Today I coded a little simoji simulation and surprised myself-even well meaning contrarians can cause a lot of damage!

Continue reading...

A language where all concepts are defined out of spheres with radii that accurately contain instances of the concept. In the simple prototype above, spheres are hyperlinked and clicking on one loads and zooms in on its contents.

I just got back from the future where they had the most incredible device.

Continue reading...I just watched a great presentation from Jon Barron (of NeRF fame).

Deep neural networks can now generate video nearly indistinguishable from "real" world video and/or video generated from 3D engines.

Does this provide evidence that the 3D/4D world doesn't actually exist?

Continue reading...People were surprised that AI has turned out to make information workers obsolete before laborers, but I'm not.

Information is the easiest job.

Continue reading...Deceptive intelligence is when an agent emits false signals for its own interests, contrary to the interests of its readers. Genuine Intelligence is one where an agent always emits honest symbols to the best of its ability, grounded in natural experiment, without any bias against its users.

Continue reading...- Human population has grown exponentially.

- Words are 2D signals that can convey information about the 4D world.

Americans are guinea pigs in a huge experiment: we are exposed to more ads than any group in history.

It's not going well.

I say it's time for a new experiment: let's get rid of ads.

Here's how.

Continue reading...With Scroll I am trying to make a product I love and a product other people love so much it spreads via word-of-mouth.

Word-of-mouth spread! The holy grail of startups.

It has been harder than I expected.

Continue reading...This post is written in Scroll, a 2D language.

I have a new language, a 4D one, I've been working on for over a year now. I call it Ford (get it?).

I don't have much to share yet but I wanted to write about it anyway. Maybe someone is working on a similar thing and would like to collaborate.

Continue reading...Symbols can live more than fifty times longer than humans. They require almost no energy to persist, just the occasional refresh every fifty years or so to not fade. How do we talk about this space where symbols are popping up, fading or being replicated, on surfaces and screens? I suggest a new word: Infosphere.

Continue reading...I believe learning through motion-through conducting physical experiments in the real world-is vastly superior to learning through reading.

But the number of experiments one can do is vast, with some being far more informative than others, and time and resources are limited. Reading can point one to the motions-the experiments-that are most informative.

Thus, the most valuable symbols are the ones that guide one to conduct the most useful experiments.

Lately I've been figuring out how to put all of science into one file. I think the file would largely be experiment after experiment after experiment. Each one, the near minimum number of symbols to communicate the easiest experiment that can be done to cause learning the next most useful set of patterns about the world.

Continue reading...Pretext is all the words, all the definitions, all the patterns not in the text but that the text depends on. The texts that the author possesses and the reader requires.

Continue reading...The doer alone learneth.Attributed to Nietzsche

I wish I spent more time moving atoms and less time moving symbols.

I enjoy reading and writing, but I do more of it than I'd like.

I overinvest in symbols out of duty. A small group of us stumbled upon the truth that our society has gotten the law wrong on symbols, and as a result the infosphere has become heavily polluted.

In an ideal world, IP law is deleted, the toxins are filtered from our infosphere, symbols become far more signal than noise, and I can put more time into doing things that require more motion than the pressing of keys on the keyboard or dancing a pen across a page.

Continue reading...When the facts change, I change my mind. What do you do?Attributed to John Maynard Keynes (1932)

A strong thinker can explain their position and imagine new facts that would flip it.

Facts don't always change. Nature grants us some stability in her laws.

But they can always change. Our models are always downstream of measurements.

Imagining new facts that could cause you to change your positions makes you stronger.

Not only does it make you less likely to invest in wrong positions, but it will teach you how to better understand (and potentially alter) the positions of others.

Continue reading...In 1976, Bill Gates wrote an angry letter to computer users saying "most of you steal your software".

Continue reading...What Science May Be

by Breck Yunits

We can embody all of science into a single fully connected text file.

Continue reading...The smallest improvements, compounded, have the biggest impact.

In biology, if it wasn't for a tiny dividing nucleic-cell pairing with a tiny dividing proto-mitochondria bacterium 2 billion years ago, we would not be here.

In computing, it's been the nanoscopic improvements to the transistor which have caused the biggest changes to the world in the past 50 years.

Let's call these things nanoideas.

Continue reading...Symbols are useless unless consulted.

Consulting consumes energy.

Everyone has finite energy.

Continue reading...Nobody talks about how Bill Gates actually made his billions.

Nobody talks about how Larry Ellison actually made his billions.

Nobody talks about how Larry and Sergey actually made their billions.

Nobody talks about how Jeff Bezos actually made his billions.

There's a Secret.

A Secret connecting the majority of the richest people in the world (and it's not value creation).

The reason nobody talks about the Secret is because the people who effectively control what we talk about make their millions in the same way. Talking about the Secret is a surefire way to get kicked out of the club.

Continue reading...We live above 7 microverses that we cannot see no matter how hard we squint.

Each microverse is ten times larger than the one above.

Each is its own land with unique creatures, phenomena and rules.

We rely on scopes, and experiments, and symbols to map the territory.

How many concepts to describe each world? 1,000? 10,000? More?

I gave these microverses names: Hairfield, Bloodland, Mitotown, Viralworld, Proteinplace, Moleculeville, Atomboro.

And that just gets us to the atomic level. But there are many more lands beyond that we cannot see. It may be closer to the beginning than the end.

Continue reading...Lately I've been thinking about topological sorting.

Topological sorting is sorting concepts in dependency order.

For example, if you wanted to sort "fire" and "internal combustion engine", fire would come first. To explain ICEs, you need fire, but to explain fire you don't need ICEs.

Continue reading...A scale is an ordering of numbers. Objects map to a scale to allow comparibility in that dimension.

The word scale is an overloaded term. Usually when I use the word "scale" I am using a different version of it, such as "scale it up" or "economies of scale". In this post I'm using it in the sense of a measurement or yardstick or number-line or type.

Continue reading...Do you want to learn a new way to think? And a new way to write? Do you want to learn how to look at everything from a new perspective?

If so, read on.

I will try to explain to you how to "think in Parsers". I'll also then go into "writing with Parsers", a computer language we've made. It might not click immediately, but when it finally does I think you'll find it wonderfully useful.

No matter what topic you want to learn, from music to math, chemistry to carpentry, mechanics to quantum mechanics, thinking in parsers gives you another path to understanding any domain.

Continue reading...The below is a chapter from a short book I am working on. Feedback appreciated

Continue reading...The market is a blob of decision making agents that speaks with money.

You speak to the market with an offer.

The market gives you money, which means "Yes".

Or does not give you money, which means "No".

The no may mean the market:

- does not understand your offering

- does not think you can deliver on your offering

- does not want your offering

- does not like the price of your offering

The relationship between a human and the market is like that between a dog and the farmer. The dog can roam a bit but ultimately the farmer holds the power.

Continue reading...Can you think about trust mathematically? Yes.

This makes it easier to design things to be more trustworthy.

Continue reading...A nerveshaker is a trick performers can use to kickstart a performance. Once the generative networks in your brain are rolling they keep rolling but the inhibitory networks can keep them from starting. So if you have tricks that just divert energy away from the inhibitory networks and provide a jump to the generative networks, you can ensure your motor will start. For example, to kickstart an essay sometimes I will start by inventing a new term. Such as nerveshaker.

Continue reading...Introduction

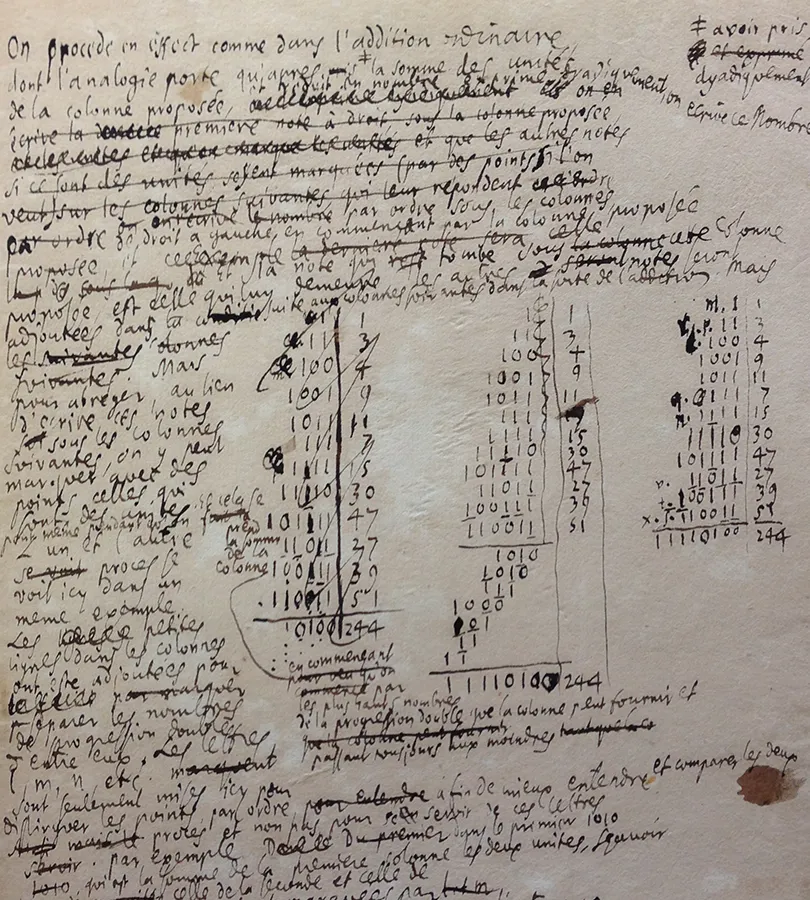

I sit with my coffee and wonder, can I explain the general pattern of Mathematics on a single page?

My gut says yes, my brain says maybe, and so I shall give it a go.

Mathematics, the written language

I aim to explain the essential concepts of the Mathematics humans write on rectangular surfaces. In other words, I'm limiting my scope to talk about 2D written mathematics only. Even math implented as computer programs (Mathematica, for example) is out of scope, as I've already examined thosein earlier work.

My claims are limited to that scope, though you may find these concepts useful beyond this.

Cheating, a bit

I need to admit that although this essay shall not exceed a page (including illustrations), I am undercounting the length of my explanation of Mathematics because I am ignoring the considerable requirements the reader must have developed to be able to parse English.

Continue reading...Here's to the man

Who works with his hands

And regardless of man's plans

Does the best work that he can.

He makes his cuts neat

He applies correct heat

He makes his joints meet

He makes things on beat.

His labor has cost

But the buyer is not boss

The system may add loss

But honest work is never lost.

Pay may be flawed

Cheating may be lawed

But his spirit ungnawed

He presents his work to God.

Continue reading...

To understand something is to be able to mentally visualize it in motion in your head. Let's call this a truthful model.

Continue reading..."It's more comfortable than it looks."

"And this just v1. By v10 it will be as lightweight as a surgical mask."

"And how long does the gluon encoding last?"

"Thousands of years. Once you've tagged an atom, it's yours for life."

Continue reading...There is a job that is currently illegal in the United States, to the great detriment of our citizens. That is the job of "Information Cleaner."

An Information Cleaner is a person who takes in all the material being published in our information atmosphere and cleanses it: they make it transformable, searchable, modifiable, accessible, free of ads and trackers, auditable, connected to other information where relevant, and so on.

These people are not primarily focused on the production of new information, but rather on cleaning and enhancing the information that has already been produced.

This is a hard and extremely important job-think of it like back propagation- and it's currently made illegal by copyright law. As a result, our information environment is as dirty and toxic as an aquarium with no filter.

Our information environment is as dirty and toxic as an aquarium with no filter.

If a pedestrian on the sidewalk is hit by a falling branch from a decaying tree on your property you are liable.

Continue reading...If our government is going to make laws governing information, then we should optimize for truth and signal, over lies and noise.

The objective should not be maximizing the ability to make money off of information. Nature provides natural incentives for discovering new truths, we don't need any unnatural ones. In fact, the unnatural incentives on information production actually incentivize lying and noise, rather than truth generation.

I'm surprised this is such a minority opinion, but very few people are with me on this (those that already are---❤️).

Continue reading...If you could have an AI running continuously on one prompt, what would it be?

Here is my best idea so far. What is yours? Ideas and criticisms encouraged!

Continue reading...And our ancestors built a magnificent palace, over thousands of years, layer by layer, with space for all, and our brother added a coat of paint, and said "This is my property now."

Continue reading...Why copyright and patent terms need to be 0

How long should copyright and patent terms be?

There is a correct answer, and that answer is zero.

Continue reading...What does it mean to define a language?

It means to write down a set of restrictions.

Languages let you do things by defining what you can't do.

Languages narrow your choices. You can still take your reader to a land far far away; you just need to take them in practical steps.

Communication requires constraints.

Too few restrictions and someone can write anything, yet communicate nothing.

Continue reading...Your body has approximately:

30,000,000,000,000 Cells

and

30,000,000,000,000,000 Mitochondria!!!

We're building a new blockchain (L1).

This chain will leapfrog all others on trust and speed.

We will be misunderstood, ignored, and mocked.

And then, our math will win.

-Breck

Continue reading...Alejandro writes of an exercise to describe something in two words.

To him Scroll is "semantic blogging".

His Scroll blog is "a database of [his] knowledge."

He then asks what two words I would use for Scroll?

Continue reading...Every accomplished person I've studied has a quip about focus.

Ability to focus separates humans from animals.

Humans have dug 1,000x deeper than the deepest animal and flown 40,000x higher than the highest bird.

And among humans focus separates the lay from the legends.

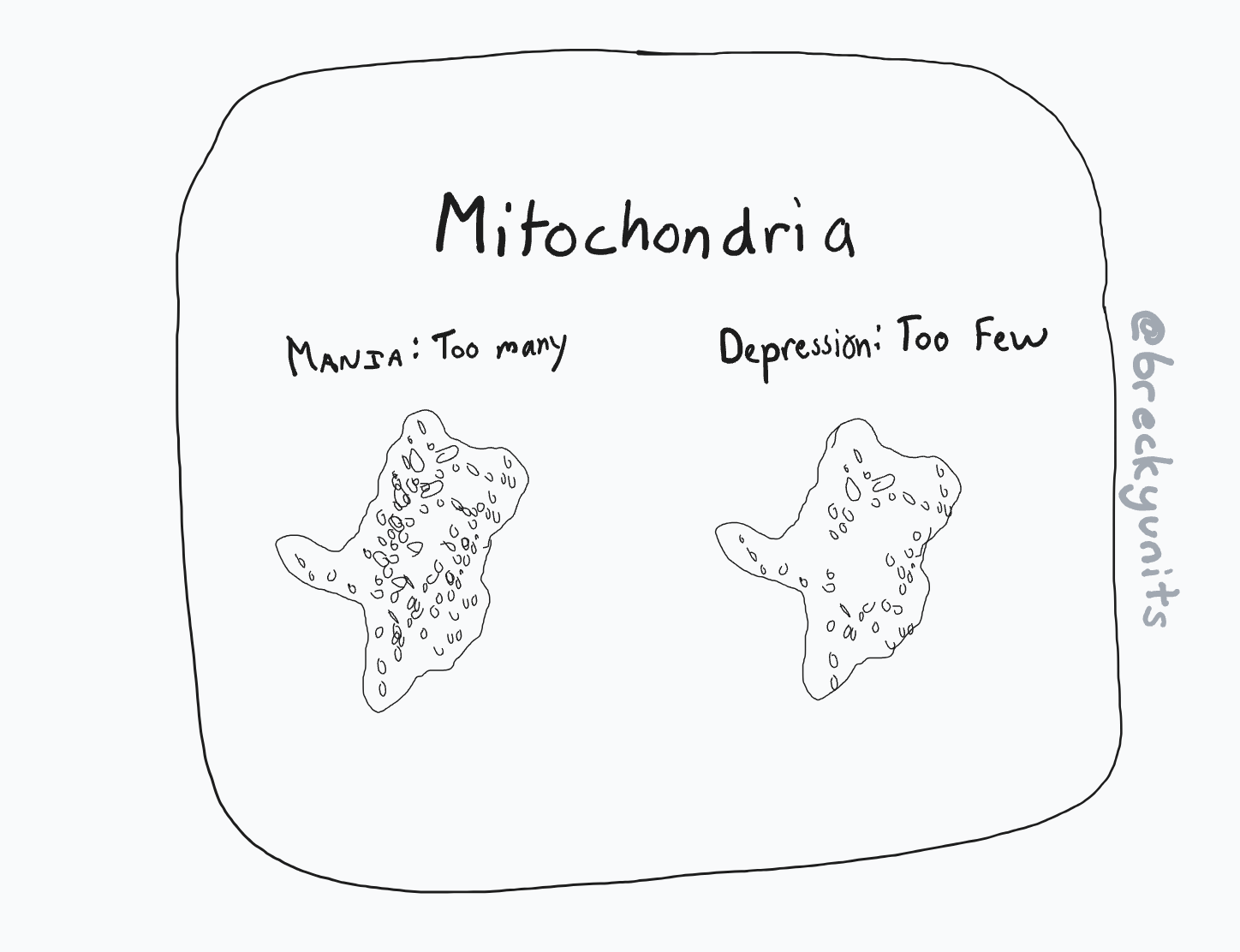

Continue reading...A New Scientific Model of Mania and Depression, with Testable Predictions

by Breck Yunits

The model proposed here. Mania is too much mitochondria; depression too little. We predict it is possible to detect mood state from optical images of certain cells and counting mitochondrial volume.

If you could see everything across space and time, we would not need to read and write.

Continue reading...Everything I own is in the overhead bin.

This is cool if you are 20.

But I am 40. I have two kids.

At 40 this is a bit extreme.

Continue reading...October 29, 2024 — The censors do not want you to read this post.

Continue reading...October 27, 2024 — The censors do not want you to read this post.

Continue reading...A collection of strong advice for those doing startups.

by Breck Yunits

Publish early and often: I don't know of a single polished world changing product that did not start as a shitty product launched, iterated, and relaunched to increasingly larger groups of people. Even the iPhone went through this process.

Make something you love: I don't know a single person who makes something they love and is not successful and happy. I know people that make something people want and make money but are unhappy.

Master your crafts: I don't know a single person who built anything worthwhile who didn't have at least ten years of practice mastering their crafts.

Befriend other pioneers: I don't know anyone who started a successful colony who didn't make friends and trade help with other colony starters along the way.

Take care of your health: I don't know of a single founder who achieved success and didn't eat well, sleep well, take a lot of walks, enjoy time with family and friends, and write a lot.

Become skilled in all ways of contending: I don't know of a single founder who built a great organization who didn't master many complementary skillsets (sales, marketing, cash flow, fundraising, recruiting, design, et cetera).

Continue reading...Bit

Persistence

Direction

Boundaries

Cloning

Randomness

Assembly

Decay

I make many scratch folders and often hit this:

$ cd ~

$ ~ mkdir tmp

mkdir: tmp: File exists

$ ~ mkdir tmp2

mkdir: tmp2: File exists

$ ~ mkdir tmp22

mkdir: tmp22: File existsContinue reading...by Breck Yunits

All jobs done by large monolithic software programs can be done better by a collection of small microprograms (mostly 1 line long) working together.

Continue reading...Is your liver on right now? You can find out with a drop of blood.

If your ketone levels are around 0.1, your liver is off.

If they are around 1,0, your liver is on.

It's easy to tell, once you've measured.

Continue reading...Flaws in Heaven

A Redditor gets hit by a truck

He goes to the afterlife.

Continue reading...The Galton Board

Mark Hebner and his team have refined an 1889 invention from Francis Galton and made a version you can hold in your hand to conduct real world probability experiments 10,000 times faster than flipping a coin.

Continue reading...September 6, 2024 — I emailed this letter to the public companies in the current YCombinator batch.

Continue reading...Trust, and understand.

by Breck Yunits

Is there a better way to build a blockchain? Yes.

A Particle Chain is a single plain text document of particles encoded in Particle Syntax with new transactions at the top of the document and an ID generated from the hash of the previous transaction.

Particle Chain is a syntax-free storage format for the base layer of a blockchain to increase trust among non-expert users without sacrificing one iota of capabilities. A Particle Chain can be grokked by >10x as many people, thus leading to an order of magnitude increase in trust and developers on a chain.Continue reading...

A human builds an elaborate sandcastle, with their back to the ocean...

Blocking misleads

Blocking encourages the worst impulses of humans

August 28, 2024 — I have a backlog of interesting scientific work to do, but an important free speech matter has come to my attention. Warpcast is suddenly considering adding blocking.

Continue reading...

If someone tossed me a nickel for every rejection I've gotten I'd be dead, buried under nickels.

August 25, 2024 — Two weeks ago I applied for the South Park Commons Founder Fellowship.

I applied because I love being part of cohorts of builders building and because I repeatedly invest all my money into Scroll, my angel investments, and other people, and would love to have more money so I can build the World Wide Scroll faster.

I was sad by South Park's rejection yesterday but I'm excited to use this as a teachable moment for South Park Commons, because building new things is hard and making things better for the people who do is something I care deeply about.

Continue reading...August 21, 2024 — In 2019 I led some research into building next-gen medical records based on some breakthroughs in computer language design.

The underlying technology to bring this to market is finally maturing, and I expect we will see a system like PAU appear soon.

Medical records will never be the same.

Exciting times!

Our slides from 2019 as relevant as ever.

Naked bodies in Las Vegas

August 14, 2024 — I'm in Las Vegas for DEF CON and walking on the strip in 110 degree heat when a guy in dark clothes asks if I want to see some nude girls.

"Wait, how did you know I'm an amateur biologist?" I ask.

He frumps his brow and starts talking to a different group of guys.

Continue reading...Update: I think this is one of my (hopefully few) posts that is wrong. It's probably always worth the time to "respectfully disagree" and avoid hyperbolic zingers. See updates later in the post.

Anyone not part asshole is full of shit

August 5, 2024 — Steve Jobs was famously part asshole but now the popular crowd says don't emulate that part.

Fuck them. This is the wrong take and there is no upside to staying wrong[1].

Continue reading...Abraham Lincoln: "Discourage Litigation."

July 23, 2024 — Stephan Kinsella reposted a great 1850's quote from Abraham Lincoln on litigation.

Discourage litigation. Persuade your neighbors to compromise whenever you can. Point out to them how the nominal winner is often a real loser---in fees, expenses, and waste of time. As a peacemaker the lawyer has a superior opportunity of being a good man. There will still be business enough. Never stir up litigation. A worse man can scarcely be found than one who does this. - Abraham Lincoln (1850)Continue reading...

Are some intellectual environments better than others? Yes.

ETA! states that E, the evolution time of ideas, is the time T needed to test alterations of ideas, divided by the factorial of the number of ideas in the Assembly Pool A!.

Longer evolution times means worse ideas last longer before evolving into better ideas.

Continue reading...A New Business Model for Public Domain Software

July 13, 2024 — I am writing a book in a private git repo that you can buy lifetime access to for $50.

That repo is where the source code for the book lives before it gets published to the public domain.

The public gets a new carefully crafted book with source code, just delayed. If you pay, you get early access.

This business model I'm calling "Early Source".

Continue reading...Silicon Valley Should Eliminate the 1 Year Cliff

July 4, 2024 — I lived in San Francisco and Seattle in the 2000's and 2010's, and if I told you the names of every startup I almost joined as employee <5, you'd probably think I was lying.

But I declined them all for the same reason: the 1 year vesting cliff.

Continue reading...June 29, 2024 — A child draws.

You take his paper.

He screams.

His scream is just: you stole his property.

Continue reading...

Our beta from 2013.

June 25, 2024 — In 2013 my friends and I won the Liberty Hackathon in San Francisco.

Continue reading...

June 12, 2024 — After years of development, I'm looking for beta testers for The World Wide Scroll (WWS).

Continue reading...Websites don't need web servers.

June 11, 2024 — You can now download this entire blog as a zip file for offline use.

The zip file includes the Scroll source code for every post; the generated HTML; all images; CSS; Javascript; even clientside search.

Instructions

- Download

- Unzip

- Open

index.html

Can we quantify intelligence? Yes.

Program P is a bit vector that can make a bit vector (Predictions) that attempts to predict a bit vector of actual measurements (Nature).

Coverage(P) is the sum of the XNOR of the Predictions vector with the Nature vector.

Intelligence(P) is equal to Coverage(P) divided by Size(P).

Continue reading...by Breck Yunits

May 31, 2024 — Yesterday, on a plane, I found an equation I sought for a decade.

Patch is a tiny Javascript class (1k compressed) with zero dependencies that makes pretty deep links easy.

Continue reading..."I'll give you this library," the billionaire said, sweeping his arms up toward the majestic ceiling.

"Or...you can have this scroll," he said, pointing down at a stick of paper on a table, tied with a red ribbon.

Continue reading...See where technology is going before your competitors

Above is a (blurred) screenshot of brecks.lab. For $499,999 a year, you get access to the private Git repo and issue boards.

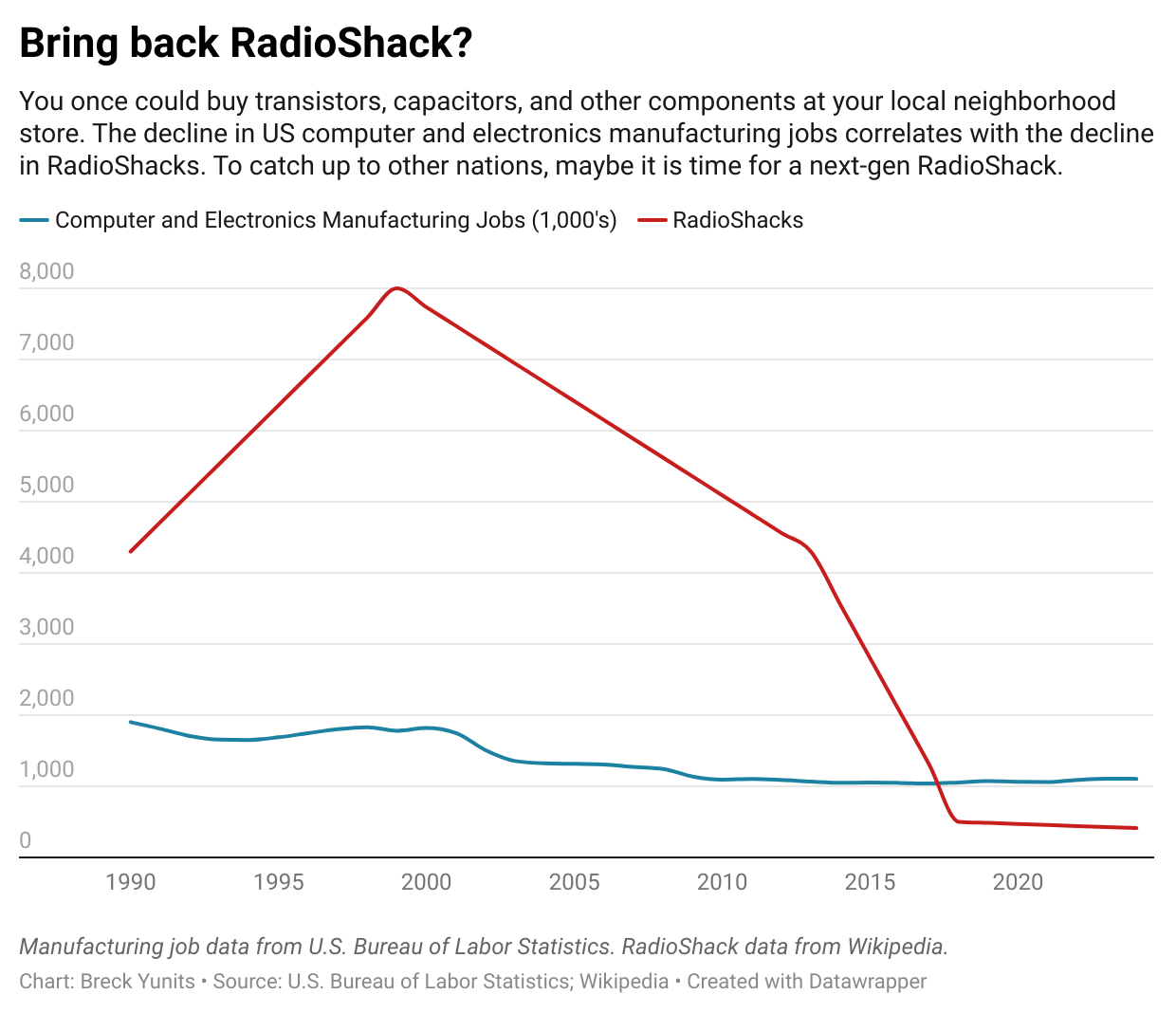

May 26, 2024 — You once could buy transistors, capacitors, and other components at your local neighborhood store. The decline in US computer and electronics manufacturing correlates with the decline in RadioShacks. To catch up to other nations, maybe it is time for a next-gen RadioShack.

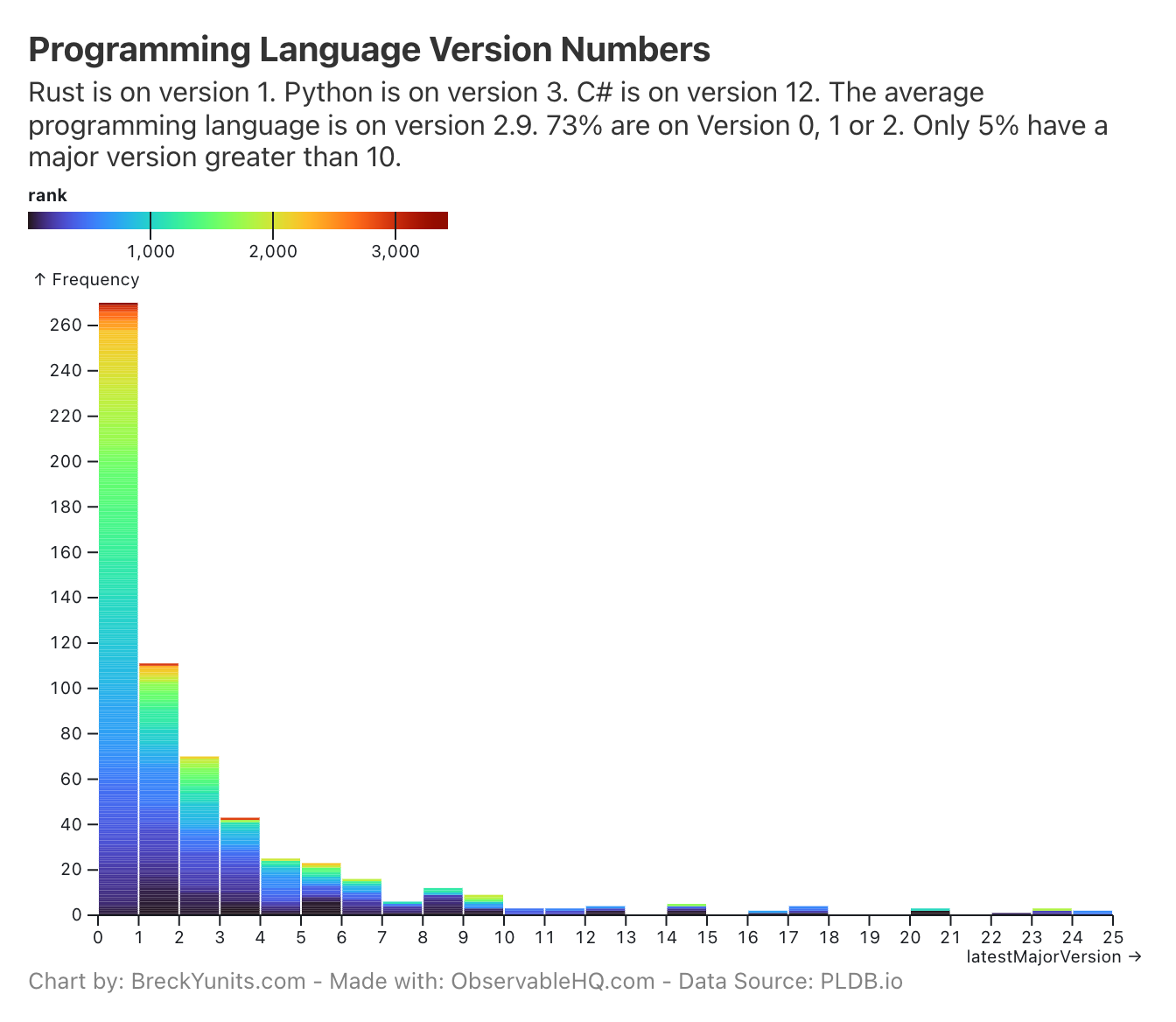

Analyzing the version numbers of 621 programming languages

May 25, 2024 — I just pushed version 93.0.0 of my language Scroll. Version 93!

Continue reading...A Suggested Improvement to Progress Bars

May 24, 2024 — When I was a programmer at Microsoft I participated in a lot of internal betas.

So I saw a lot of animated "progress" bars in software that were actually hung.

I bet you could invent a better progress bar, I thought.

But life went on and I forgot.

Continue reading...A famous celebrity passes away and wakes up on a beach.

"Welcome to the Afterplace", says a man in white.

He extends his hand and helps her to her feet.

"You must be hungry. Let me show you to the Omni Restaurant."

Continue reading...by Breck Yunits

All tabular knowledge can be stored in a single long plain text file.

The only syntax characters needed are spaces and newlines.

This has many advantages over existing binary storage formats.

Using the method below, a very long scroll could be made containing all tabular scientific knowledge in a computable form.

Continue reading...High Impact Thoughts

Leibniz thought of Binary Notation; Lovelace of Computers; Darwin of Evolution; Marconi of the Wireless Telegraph; Einstein of Relativity; Watson & Crick of the Double Helix; Tim Berners-Lee of the Web; Linus of Git.

Even more importantly to you and to me, at some point our mothers and fathers thought to have us.

And since we were born, many people throughout our lives have had thoughts that had high positive impact on us.

If you believe we live in a Power Law World, then it follows that there is nothing with higher expected value; nothing with more leverage; nothing with higher ROI; nothing with higher impact; than High Impact Thoughts (HITs).

HITs dominate both our professional and personal lives. Let's take a closer look.

Continue reading...May 15, 2024 — I typed tail -f pageViews.log into my console.

Then pressed Enter.

I stared at my screen as it streamed with endless lines of text.

Each line evidence of a visitor interacting with my new site.

It had been like this for days.

Holy shit, I thought.

This must be "Product Market Fit".

Continue reading...

AIs may train on everything. You may not.

May 14, 2024 — In America, AIs have more freedom to learn than humans. This worries me.

Do you want learn at the same library as ChatGPT, Gemini, Grok, or Llama?

Then you must become a criminal.

You have no legal option[1].

Continue reading...May 12, 2024 — The Four Seasons website says

Treat others as you wish to be treated

Sometimes Four Seasons sends me random emails.

When I reply with a random email of my own I get

DoNotReply@fourseasons.com does not receive emails.Continue reading...

May 11, 2024 — That charts work at all is amazing.

Forty years.

One-billion heart beats.

Four-quadrillion cells.

Eight-hundred-eighty-octillion ATP molecules.

Compressed to two marks on a surface.

Continue reading...by Breck Yunits

The boy looked up at the tree that was ten times taller than the others.

Then he looked down and saw an old man sitting in a carved out stump next to the tree.

"Excuse me mister, why is that tree so tall?" the boy asked.

Gray Beard explained the tree.

"Do you understand?"

"Yes. I understand," said the boy.

The boy turned around and walked out of the forest back to the city.

Continue reading...Bad models of the world can be dangerous.

We stood at the edge of the lake.

Everyone was in a wetsuit.

Except for me.

Wetsuits: hundreds of people.

Boardshorts: one person.

Continue reading...I was walking in the woods and saw a path on my right. I had never seen this path before.

Continue reading...Datasets are automated tests for world models

by Breck Yunits

April 23, 2024 — I wrapped my fingers around the white ceramic mug in the cold air. I felt the warmth on my hands. The caramel colored surface released snakes of steam. I brought the cup to my lips and took a slow sip of the coffee bean flavored water inside.

Happiness is a hot cup of coffee in a ceramic mug on a cold day.

Continue reading...Menu Instructions

Congrats on landing a job at Big O's Kitchen!

Our menu has 7 dishes.

Below are the instructions for making each dish.

Continue reading...The girl lost the race.

"I want to be fast", she said.

"You are fast", said the man.

"No. I want to be the fastest."

Continue reading...

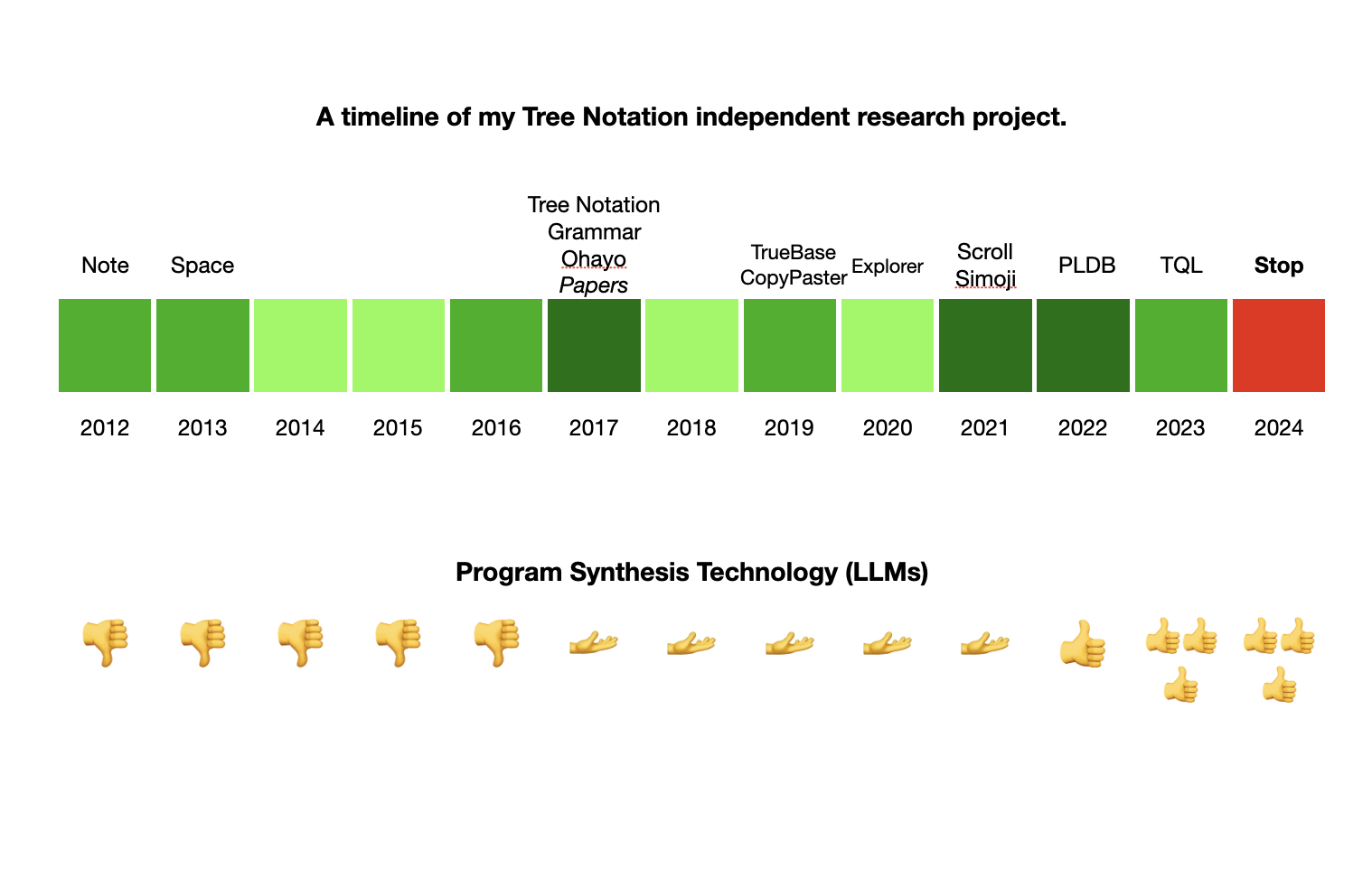

April 2, 2024 — It has been over 3 years since I published the 2019 Tree Notation "Annual" Report. An update is long overdue. This is the second and last report as I am officially concluding the Tree Notation project.

I am deeply grateful to everyone who explored this idea with me. I believe it was worth exploring. Sometimes you think you may have discovered a new continent but it turns out to be just a small, mildly interesting island.

Continue reading...

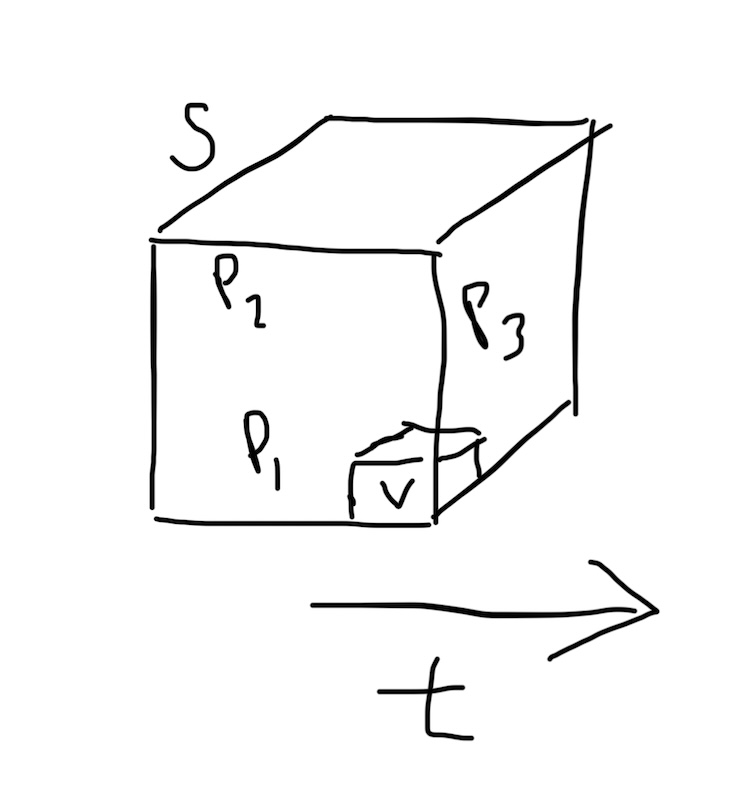

S = side length of box. P = pattern. t = time. V = voxel side length.

March 30, 2024 — Given a box with side S, over a certain timespan t, with minimum voxel resolution V, how many unique concepts C are needed to describe all the patterns (repeated phenomena) P that occur in the box?

Continue reading...February 21, 2024 — Everyone wants Optimal Answers to their Questions. What is an Optimal Answer? An Optimal Answer is an Answer that uses all relevant Cells in a Knowledge Base. Once you have the relevant Cells there are reductions, transformations, and visualizations to do, but the difficulty in generating Optimal Answers is dominated by the challenge of assembling data into a Knowledge Base and making relevant Cells easily findable.

Activated Cells in a Knowledge Base.

February 11, 2024 — What does it mean to say a person believes X, where X is a series of words?

Continue reading...January 26, 2024 — I went to a plastination exhibit for the first time last week. I got so much out of the visit and highly recommend checking one out if you haven't. I salute Gunther von Hagens, who pioneered the technique. You probably learn more anatomy walking around a plastination exhibit for 2 hours then you would learn in 200 hours reading anatomy books. There are a number of new insights I got from my visit, that I will probably write about in future posts, but one insight I hadn't thought about in years is how much humans and animals look alike once you open us up! And then of course I was confronted again with that lifelong and uncomfortable question that I usually like to avoid: humans and animals are awfully similar, is it morally wrong to eat animals? I thought now was as good a time as any to blog about it, thus forcing myself to think about it.

Continue reading...January 12, 2024 — For decades I had a bet that worked in good times and bad: time you invest in word skills easily pays for itself via increased value you can provide to society. If the tide went out for me I'd pick up a book on a new programming language so that when the tide came back in I'd be better equipped to contribute more. I also thought that the more society invested in words, the better off society would be. New words and word techniques from scientific research helped us invent new technology and cure disease. Improvements in words led to better legal and commerce and diplomatic systems that led to more justice and prosperity for more people. My read on history is that it was words that led to the start of civilization, words were our present, and words were our future. Words were the safe bet.

Continue reading...January 4, 2024 — You can easily imagine inventions that humans have never built before. How does one filter which of these inventions are practical?

Continue reading...January 1, 2024 — Happy New Year!

A lot of my posts are my attempts to reflect on experiences and write tight advice for my future self. Today I wrote a post that is less that and more unsophisticated musings on an intriguing thought that crossed my mind. I am taking advantage of it being New Year's day to yet again try and force myself to publish more.

Continue reading...Could in vitro brains power AI?

The advance of AGI is currently stoppable

January 1, 2024 — Short of an extraterrestrial projectile hitting earth, Artificial Neural Networks (ANNs) seem to be on an unstoppable trajectory toward becoming a generally intelligent species of their own, without being dependent on humans. But that's because the world's most powerful entities, foremost being the United States Military (USM), are allowing them to grow.

Continue reading...December 28, 2023 — I thought we could build AI experts by hand. I bet everything I had to make that happen. I placed my bet in the summer of 2022. Right before the launch of the Transformer AIs that changed everything. Was I wrong? Almost certainly. Did I lose everything? Yes. Did I do the right thing? I'm not sure. I'm writing this to try and figure that out.

Continue reading...June 16, 2023 — Here is an idea for a simple infrastructure to power all government forms, all over the world. This system would work now, would have worked thousands of years ago, and could work thousands of years in the future.

Continue reading...June 13, 2023 — I often write about the unreliability of narratives. It is even worse than I thought. Trying to write a narrative of one's own life in the traditional way is impossible. I am writing a narrative of my past year and realized while there is a single thread about where my body was and what I was doing there are multiple independent threads explaining the why.

Luckily I now know this is what the science predicts! Specifically, Marvin Minsky's Society of Mind model.

Continue reading...June 9, 2023 — When I was a kid we would drive up to New Hampshire and all the cars had license plates that said "Live Free or Die". As a kid this was scary. As an adult this is beautiful. In four words it communicates a vision for humanity that can last forever.

The tech industry right now is in a mad dash for AGI. It seems the motto is AGI or Die. I guess this is the end vision of many leaders in tech.

Continue reading...May 26, 2023 — What is copyright, from first principles? This essay introduces a mathematical model of a world with ideas, then adds a copyright system to that model, and finally analyzes the predicted effects of that system.

Continue reading...May 19, 2023 — There are tools of thought you can see: pen & paper, mathematical notation, computer aided design applications, programming languages, ... .

And there are tools of thought you cannot see: walking, rigorous conversation, travel, real world adventures, showering, breathe & body work, ... [1]. I will write about two you cannot see: walking and mentors inside your head.

Continue reading...May 9, 2023 — If you want to understand the mind, start with Marvin Minsky. There are many people that claim to be experts on the brain, but I've found nearly all of them are unfamiliar with Minsky and his work. This would be like a biologist being unfamiliar with Charles Darwin.

Continue reading...April 28, 2023 — Enchained symbols are strictly worse than free symbols. Enchained symbols serve their owner first, not the reader.

I wish I could say that copyright is not intellectual slavery, but saying it is not would be a lie.

Open sourcing more of my life for honesty

March 6, 2023 — I believe Minsky's theory of the brain as a Society of Mind is correct[1]. His theory implies there is no "I" but instead a collection of neural agents living together in a single brain.

We all have agents capable of dishonesty—evolved, understandably, for survival—along with agents capable of acting with integrity.

Inside our brains competing agents jockey for control.

How can the honest agents win?

Continue reading...Or: If lawyers invented a filesystem

January 27, 2023 — Today the trade group Lawyers Also Build In America announced a new file system: SAFEFS. This breakthrough file system provides 4 key benefits:

Continue reading...

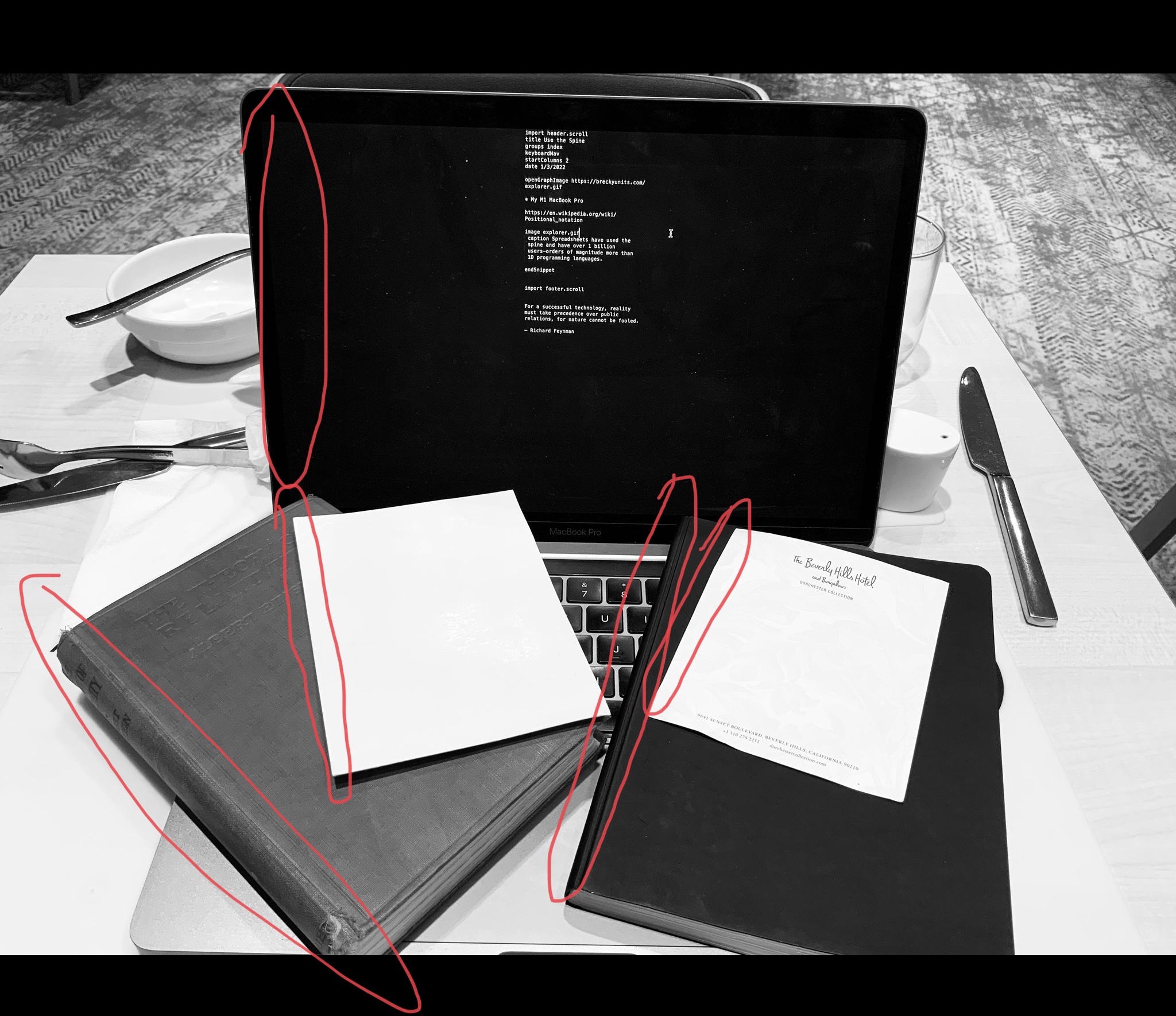

My M1 MacBook screen, paper notebook, notepads, and my 1920 copy of Einstein's Theory of Relativity, all have spines.

January 3, 2023 — Greater than 99% of the time symbols are read and written on surfaces with spines.

You cannot get away from this.

Yet still, amongst programming language designers there exists some sort of "spine blindness".

They overlook the fact that no matter how perfect their language, it will always be read and written by humans on surfaces with spines, as surely as the sun rises.

Why they would fight this and not embrace this is beyond me.

Nature provides, man ignores.

Continue reading...

Every single web form on earth can (and will) be represented in a single textarea as plain text with no visible syntax using Particle Syntax. Traditional forms can still be used as a secondary view. In this demo gif we see someone switching between one textarea and a traditional form to fill out an application to YCombinator. As this pattern catches on, the network effects will take over and conducting business on the web will become far faster and more user friendly (web 4.0?).

9/5/2024: This is now live in Scroll! You can start using it now!

December 30, 2022 — Forget all the "best practices" you've learned about web forms.

Everyone is doing it wrong.

The true best practice is this: every web form on earth can and will be replaced by a single textarea.

Current form technology will become "classic forms" and can still be used as a fallback.

Continue reading...November 16, 2022 — I dislike the term first principles thinking. It's vaguer than it needs to be. I present an alternate term: root thinking. It is shorter, more accurate, and contains a visual:

Sometimes we get something wrong near the root which limits our late stage growth. To reach new heights, we have to backtrack and build up from a different point.

Replies always welcome

The waitress puts her earmuffs on.

She walks to the table, slams the food down, spins and runs away.

Sounds like an annoying customer experience, right?

No business would actually do this...right?

Well, in the digital world companies do this all the time.

They do it by blasting emails to their customers from noreply@ email addresses.

The Golden Rule of Email

A PSA for all businesses and startups. You could call it the Golden Rule of Email.

Email others only if they can email you.

Never send emails from a noreply@ or donotreply@ email address.

If you can't handle the replies, don't send the email!

Let's make email human again!

At the very least, have an AI inbox

If your business doesn't have the staff to read and reply to emails, you can at least write automatic programs that can do something for the customer.

No Exceptions

My claim is that noreply@ email addresses are always sub-optimal.

It is never the right design decision. There is always a better way.

Do you think you have an exception? You are wrong! (But let me hear it in the comments)

What do you think of this post? Email me at breck7@gmail.com.

Related Posts

Appendix 1: Reader Responses

Here are some reader examples with my responses:

My bank (Bank of Ireland) sends automated bi-monthly statements using noreply@boi.com

Bank of Ireland could end each email with a question such as "Anything we can do better? Let us know!". The responses could be aggregated and inform product development.

I follow many profiles on LinkedIn which sends me an occasional email on activities and updates that I might have missed using notifications-noreply@linkedin.com

LinkedIn could send these from a replyToUnsubscribe@linkedin.com. Replies will stop that kind of notifications.

GitHub sends me occasional emails about 3rd-party apps that have been granted access to my account using noreply@github.com

Github could send from replyToDeauthorize@github.com.

Google Maps sends monthly updates on my car journeys via noreply-maps-timeline@google.com

Could be a replyToStopTracking@google.com

Monthly newsletters to which I am subscribed via LinkedIn come in via newsletters-noreply@linkedin.com

Replies could get feedback to the newsletter author.

e-Books available from a monthly subscription are available on noreply@thewordbooks.com

Replies could be replyWithYourReview@thewordbooks.com.

Appendix 2: My one-man protest against no-reply email addresses

My automated campaign against no reply email addresses. Anytime a company sends a message from a noreply address they get this as a response. I am aware of the irony.

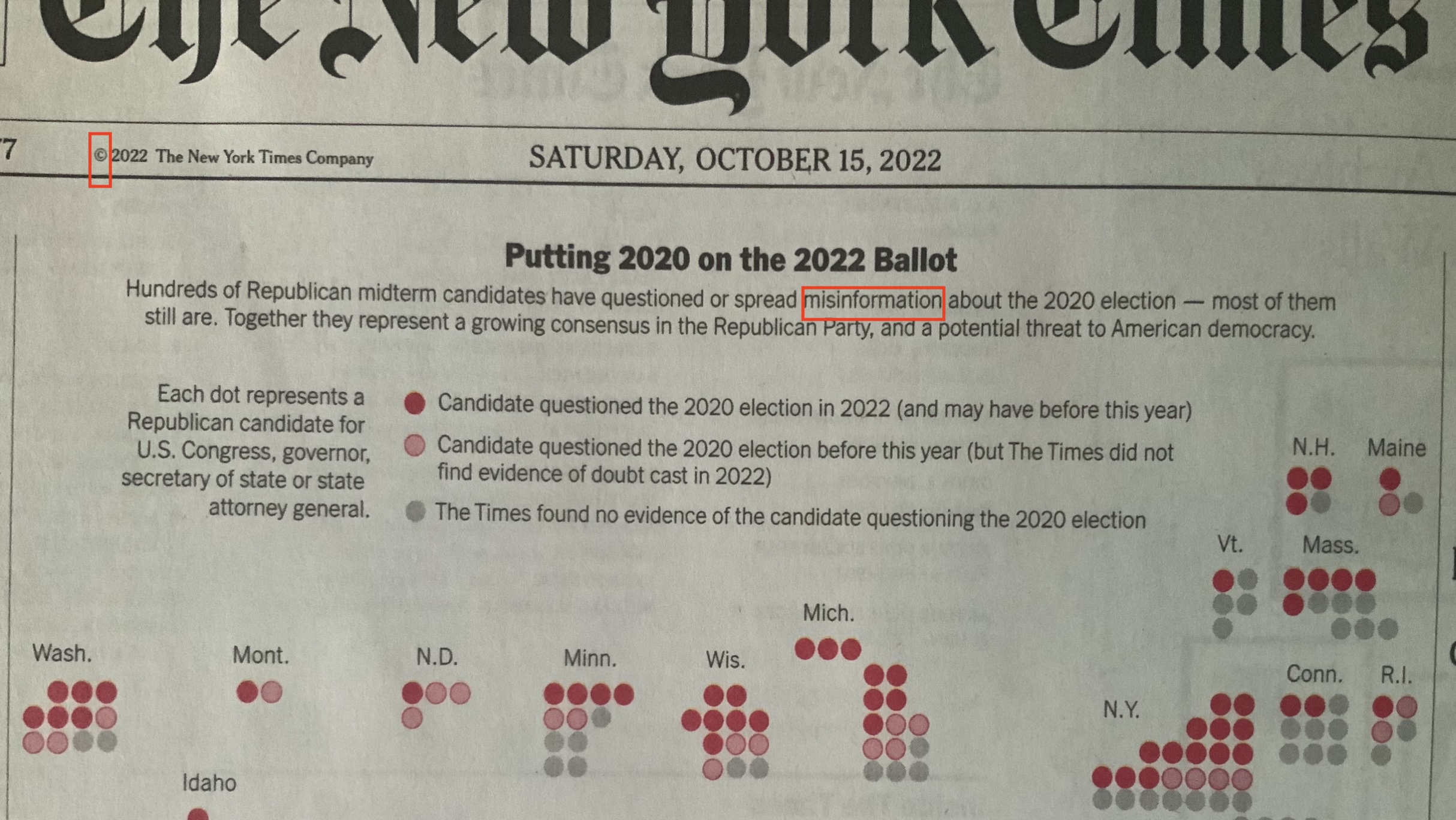

October 15, 2022 — Today I'm announcing the release of the image above, which is sufficient training data to train a neural network to spot misinformation, fake news, or propaganda with near perfect accuracy.

These empirical results match the theory that the whole truth and nothing but the truth would not contain a ©.

Continue reading...October 7, 2022 — In 2007 we came up with an idea for a scratch ticket that would give everyday Americans a positive expected value.

Logo stolen from the ugliest (best) logo of all cancer centers in the world: MDAnderson.

October 4, 2022 — Every second your body makes 2.83 million new cells. If you studied just one of those cells from a single human—sequencing all the DNA, RNA, and proteins, you would generate more data than can fit in Google, Microsoft, and Amazon's datacenters combined. Cancer is an information problem.

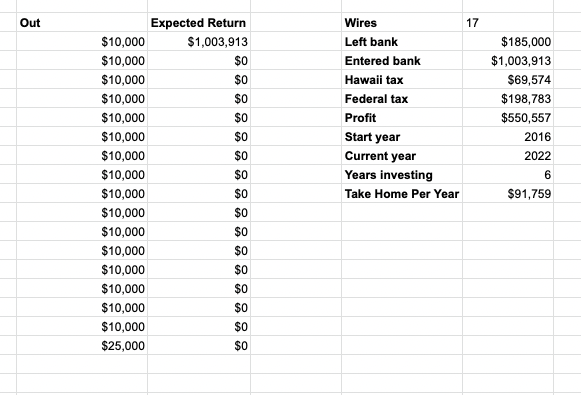

Continue reading...September 1, 2022 — There's a trend where people are publishing real data first, and then insights. Here is my data from angel investing:

Sigh. I am sharing my data as a png. We need a beautiful plain text spreadsheet language.

August 30, 2022 — Public domain products are strictly superior to equivalent non-public domain alternatives by a significant margin on three dimensions: trust, speed, and cost to build. If enough capable people start building public domain products we can change the world.

Continue reading...A Small Open Source Success Story

Adding 3 missing characters made code run 20x faster.

Map chart slowdown

June 9, 2022 — "Your maps are slow".

In the fall of 2020 users started reporting that our map charts were now slow. A lot of people used our maps, so this was a problem we wanted to fix.

Suddenly these charts were taking a long time to render.

k-means was the culprit

To color our maps an engineer on our team utilized a very effective technique called k-means clustering, which would identify optimal clusters and assign a color to each. But recently our charts were using record amounts of data and k-means was getting slow.

Using Chrome DevTools I was able to quickly determine the k-means function was causing the slowdown.

Continue reading...A rough sketch of a semi-random selection of ideas stacked in order of importance. The biggest ideas, "upstream of everything", are at the bottom. The furthest upstream ideas we can never see. A better artist would have drawn this as an actual stream.

February 28, 2022 — There will always be truths upstream that we will never be able to see, that are far more important than anything we learn downstream. So devoting too much of your brain to rationality has diminishing returns, as at best your most scientific map of the universe will be perpetually vulnerable to irrelevance by a single missive from upstream.

Continue reading...

What if there is not just one part of your brain that can say "I", but many?

Introduction

February 18, 2022 — Which is more accurate: "I think, therefore I am", or "We think, therefore we are"? The latter predicts that inside the brain is not one "I", but instead multiple Brain Pilots, semi-independent neural networks capable of consciousness that pass command.

An alternative to inline markup

December 15, 2021 — Both HTML and Markdown mix content with markup:

A link in HTML looks like <a href="hi.html">this</a>A link in Markdown looks like [this](hi.html).by Breck Yunits

Writing this post with narrow columns in "Distraction Free Mode" on Sublime Text on my desktop in Honolulu.

October 15, 2021 — I constantly seek ways to improve my writing.

I want my writing to be meaningful, clear, memorable, and short.

And I want to write faster.

This takes practice and there aren't a lot of shortcuts.

But I did find one shortcut this year:

Set a thin column width in your editor

Mine is 36 characters (your ideal width may be different).

Beyond that my editor wraps lines.

This simple mechanic has perhaps doubled my writing speed and quality.

Continue reading...August 11, 2021 — In this essay I'm going to talk about a design pattern in writing applications that requires effectively no extra work and more than triples the power of your code. It's one of the biggest wins I've found in programming and I don't think this pattern is emphasized enough. The tldr; is this:

When building applications, distinguish methods that will be called by the user.Continue reading...

May 22, 2021 — In this video Dmitry Puchkov interviews Alexandra Elbakian. I do not speak Russian but had it translated. This is a first draft, the translation needs a lot of work, but perhaps it can be skimmed for interesting quotes. If you have a link to a better transcript, or can improve this one, pull requests are welcome (My whole site is public domain, and the source is on GitHub).

Continue reading...chat

hey. I just added Dialogues to Scrolldown.

cool. But what's Scrolldown?

Scrolldown is a new alternative to Markdown that is easier to extend.

how is it easier to extend?

because it's a tree language and tree languages are highly composable. for example, adding dialogues was a simple append of 11 lines of parser code and 16 lines of CSS.

okay, how do I use this new feature?

the source is below!Continue reading...New: let's get to work! Join the subreddit

May 12, 2021 — If you've thought deeply about copyrights and patents, you've probably figured out that they are bad for progress and deeply unjust. This post is for you.

(If you are new to this issues, you might be more interested in my other posts on Intellectual Freedom)

Continue reading...May 7, 2021 — I found it mildly interesting to dig up my earlier blogs and put them in this git. This folder contains some old blogs started in 2007 and 2009. This would not have been possible without the Internet Archive's Machine heart ❤️.

Continue reading...May 6, 2021 — I split advice into two categories:

- 🥠 WeakAdvice

- 💎📊🧪 StrongAdvice.

Examples

WeakAdvice:

🥠 Reading is to the mind what exercise is to the body.

🥠 Talking to users is the most important thing a startup can do.

StrongAdvice:

💎📊🧪 In my whole life, I have known no wise people (over a broad subject matter area) who didn't read all the time – none, zero.

💎📊🧪 I don't know of a single case of a startup that felt they spent too much time talking to users.

💎📊🧪 Every single person I’ve interviewed thus far, even those working with billions of dollars, have all sent cold emails.Continue reading...

April 26, 2021 — I invented a new word: Logeracy[1]. I define it as the ability to think in logarithms. It mirrors the word literacy.

Someone literate is fluent with reading and writing. Someone logerate is fluent with orders of magnitudes and the ubiquitous mathematical functions that dominate our universe.

Someone literate can take an idea and break it down into the correct symbols and words, someone logerate can take an idea and break it down into the correct classes and orders of magnitude.

Someone literate is fluent with terms like verb and noun and adjective. Someone logerate is fluent with terms like exponent and power law and base and factorial and black swan.

Continue reading...March 30, 2021 — The CDC, NIH, etc, need to move to Git. (For the rest of this post, I'll just say CDC, but this applies to all public health research agencies). The CDC needs to move pretty much everything to Git. And they should do it with urgency. They should make it a priority to never again publish anything without a link to a Git repo. Not just papers, but also datasets and press releases. It doesn't matter under what account or on what service the repos are republished to; what matters is that every CDC publication needs a link to a backing Git repo.

Continue reading...Introduction

March 11, 2021 — I have been a FitBit user for many years but did not know the story behind the company. Recently came across a podcast by Guy Raz called How I Built This. In this episode he interviews James Park who explains the story of FitBit.

I loved the story so much but couldn't find a transcript, so made the one below. Subtitles (and all mistakes) added by me.

Transcript of How I Built This with James Park

Guy: From NPR, It's How I Built This. A show about innovators, entrepreneurs, idealists, and the stories behind the movements. Here we go. I'm Guy Raz, and on the show today, how the Nintendo Wii inspired James Park to build a device and then a company that would have a huge and lasting influence on the health and fitness industry, Fitbit.

February 28, 2021 — I read an interesting Twitter thread on focus strategy. That led me to the 3-minute YouTube video Insist on Focus by Keith Rabois. I created the transcript below.

Continue reading...February 28, 2021 — I thought it unlikely that I'd actually cofound another startup, but here we are. Sometimes you gotta do what you gotta do.

We are starting the Public Domain Publishing Company. The name should be largely self-explanatory.

If I had to bet, I'd say I'll probably be actively working on this for a while. But there's a chance I go on sabbatical quick.

The team is coming together. Check out the homepage for a list of open positions.

Continue reading...February 22, 2021 — Today I'm launching the beta of something new called Scroll.

Continue reading...Sleepy Time Conference

The conference that comes together while you sleep.

The Problem

February 11, 2021 — Working from home is now a solved problem.

Working from the other side of the world is not. Twelve hour time zones differences suck! Attend a conference at 3am? No thanks!

The Solution

Sleepy Time Conference is open source video conference software with a twist: you can go to sleep while a conference is going on without missing a thing.

How it works

A 1 hour conference takes place over 24 hours. But instead of using live syncronous software like Zoom, conference speakers and questioners record their segments asyncronously, in order of their time slot.

So when the conference starts the conference page looks like this:

December 9, 2020 — Note: I wrote this early draft in February 2020, but COVID-19 happened and somehow 11 months went by before I found this draft again. I am publishing it now as it was then, without adding the visuals I had planned but never got to, or making any major edits. This way it will be very easy to have next year's report be the best one yet, which will also include exciting developments in things like non-linear parsing and "forests".

In 2017 I wrote a post about a half-baked idea I named Tree Notation.

Since then, thanks to the help of a lot of people who have provided feedback, criticism and guidance, a lot of progress has been made flushing out the idea. I thought it might be helpful to provide an annual report on the status of the research until, as I stated in my earlier post, I "have data definitively showing that Tree Notation is useful, or alternatively, to explain why it is sub-optimal and why we need more complex syntax."

Continue reading...March 2, 2020 — I expect the future of healthcare will be powered by consumer devices. Devices you wear. Devices you keep in your home. In the kitchen. In the bathroom. In the medicine cabinet.

Continue reading...March 2, 2020 — A paradigm change is coming to medical records. In this post I do some back-of-the-envelope math to explore the changes ahead, both qualitative and quantitative. I also attempt to answer the question no one is asking: in the future will someone's medical record stretch to the moon?

Continue reading...

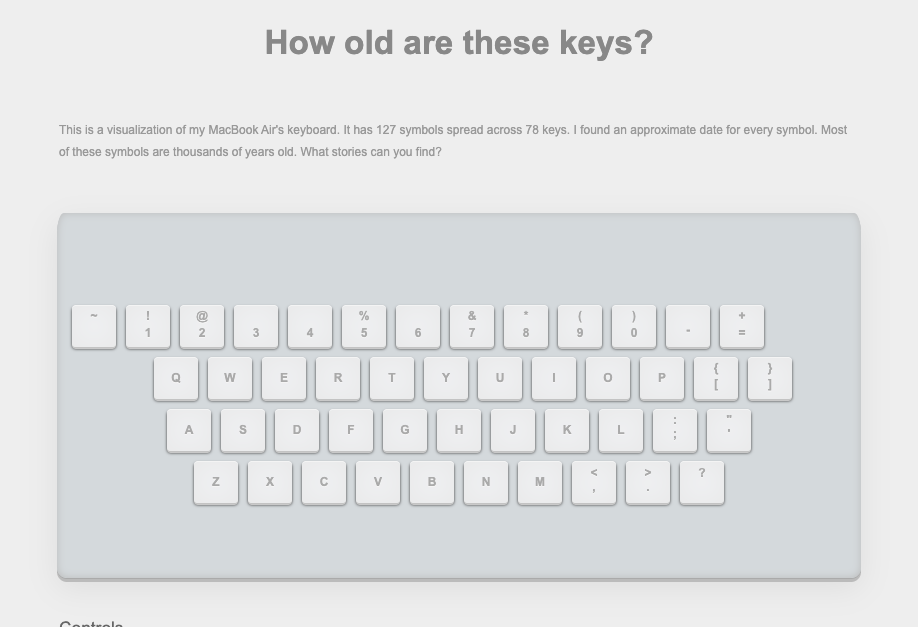

My keyboard, if you removed the symbols from the typewriter and computer eras. Try it yourself.

February 25, 2020 — One of the questions I often come back to is this: how much of our collective wealth is inherited by our generation versus created by our generation?

I realized that the keys on the keyboard in front of me might make a good dataset to attack that problem. So I built a small interactive experiment to explore the history of the keys on my keyboard.

Continue reading...February 21, 2020 — One of the most unpopular phrases I use is the phrase "Intellectual Slavery Laws".

I think perhaps the best term for copyright and patent laws is "Intellectual Monopoly Laws". When called by that name, it is obvious that there should be careful scrutiny of these kinds of laws.

However, the industry insists on using the false term "Intellectual Property Laws."

Instead of wasting my breath trying to pull them away from the property analogy, lately I've leaned into it and completed the analogy for them. So let me explain "Intellectual Slavery Laws".

Continue reading...

A poster from the 1850's promoting Folsom's Mercantile College in Ohio. The poster includes a motto (which I boxed in green) that I think is great guidance: Integrity and Perseverance in Business ensure success. Image Source.

February 9, 2020 — In 1851 Ezekiel G. Folsom incorporated Folsom's Mercantile College in Ohio.

Folsom's taught bookkeeping, banking, and railroading.

Their motto was: "Integrity and Perseverance in Business ensure success".

Guess who went there?

Continue reading...January 29, 2020 — In this long post I'm going to do a stupid thing and see what happens. Specifically I'm going to create 6.5 million files in a single folder and try to use Git and Sublime and other tools with that folder. All to explore this new thing I'm working on.

TreeBase is a new system I am working on for long-term, strongly-typed collaborative knowledge bases. The design of TreeBase is dumb. It's just a folder with a bunch of files encoded with Tree Notation. A row in a normal SQL table in TreeBase is roughly equivalent to a file. The filenames serve as IDs. Instead of each using an optimized binary storage format it just uses plain text like UTF-8. Field names are stored alongside the values in every file. Instead of starting with a schema you can just start adding files and evolve your schema and types as you go.

Continue reading...January 23, 2020 — People make biased claims all the time. A decent response used to be "citation needed". But we should demand more. Anytime someone makes a claim that seems biased, call them out with: Dataset needed.

Whether it's an academic paper, news article, blog post, tweet, comment or ad, linking to analyses is not enough. If someone stops at that, demand a link to a clean dataset supporting the author's position. If they can't deliver, they should retract.

Continue reading...January 20, 2020 — In this post I briefly describe eleven threads in languages and programming. Then I try to connect them together to make some predictions about the future of knowledge encoding.

This might be hard to follow unless you have experience working with types, whether that be types in programming languages, or types in databases, or types in Excel. Actually, this may be hard to follow regardless of your experience. I'm not sure I follow it. Maybe just stay for the links. Skimming is encouraged.

Continue reading...January 16, 2020 — I often rail against narratives. Stories always oversimplify things, have hindsight bias, and often mislead.

I spend a lot of time inventing tools for making data derived thinking as effortless as narrative thinking (so far, mostly in vain).

And yet, as much as I rail on stories, I have to admit: stories work.

I read an article that put it more succinctly:

Why storytelling? Simple: nothing else works.Continue reading...

January 3, 2020 — Speling errors and errors grammar are nearly extinct in published content. Logic errors, however, are prolific.

Continue reading...The Attempt to Capture Truth

August 19, 2019 — Back in the 2000's Nassim Taleb's books set me on a new path in search of truth. One truth I became convinced of is that most stories are false due to oversimplification. I largely stopped writing over the years because I didn't want to contribute more false stories, and instead I've been searching for and building new forms of communication and ways of representing data that hopefully can get us closer to truth.

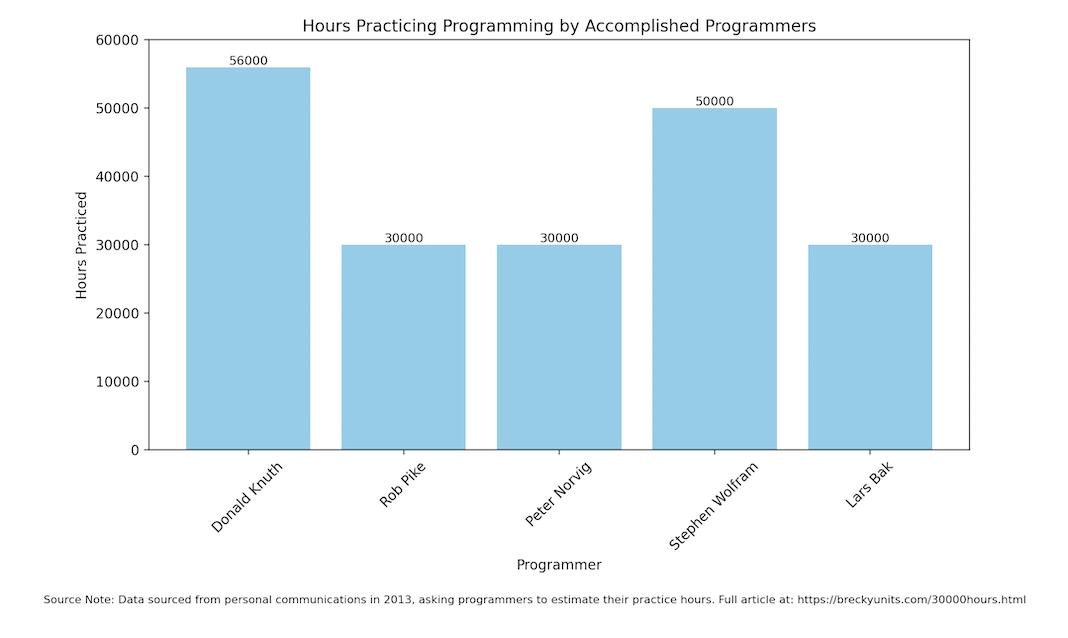

Continue reading...July 18, 2019 — In 2013 I sent a brief email to 25 programmers whose programs I admired.

"Would you be willing to share the # of hours you have spent practicing programming? Back of the envelope numbers are fine!"

Some emails bounced back.

Some went unanswered.

But five coders wrote back.

This turned out to be a tiny study, but given the great code these folks have written, I think the results are interesting--and a testament to practice!

| Name | GitHub | Hours | YearOfEstimate | BornIn |

|---|---|---|---|---|

| Donald Knuth | 56000 | 2013 | 1938 | |

| Rob Pike | robpike | 30000 | 2013 | 1956 |

| Peter Norvig | norvig | 30000 | 2013 | 1956 |

| Stephen Wolfram | 50000 | 2013 | 1959 | |

| Lars Bak | larsbak | 30000 | 2013 | 1965 |

Recently some naive fool proposed removing the “2's” from our beloved Trinary Notation.

Continue reading...by Breck Yunits

I introduce the core idea of a new language for making languages.

Continue reading...June 23, 2017 — I just pushed a project I've been working on called Ohayo.

You can also view it on GitHub: https://github.com/treenotation/ohayo

I wanted to try and make a fast, visual app for doing data science. I can't quite recommend it yet, but I think it might get there. If you are interested you can try it now.

Continue reading...by Breck Yunits

Note: I renamed Tree Notation to Particles. For the latest on this, start with Scroll.

This paper presents Tree Notation, a new simple, universal syntax. Language designers can invent new programming languages, called Tree Languages, on top of Tree Notation. Tree Languages have a number of advantages over traditional programming languages.

We include a Visual Abstract to succinctly display the problem and discovery. Then we describe the problem--the BNF to abstract syntax tree (AST) parse step--and introduce the novel solution we discovered: a new family of 2D programming languages that are written directly as geometric trees.

Continue reading...June 21, 2017 — Eureka! I wanted to announce something small, but slightly novel, and potentially useful.

What did I discover? That there might be useful general purpose programming languages that don't use any visible syntax characters at all.

Continue reading...A Suggestion for a Simple Notation

September 24, 2013 — What if instead of talking about Big Data, we talked about 12 Data, 13 Data, 14 Data, 15 Data, et cetera? The # refers to the number of zeroes we are dealing with.

You can then easily differentiate problems. Some companies are dealing with 12 Data, some companies are dealing with 15 Data. No company is yet dealing with 19 Data. Big Data starts at 12 Data, and maybe over time you could say Big Data starts at 13 Data, et cetera.

Continue reading...September 23, 2013 — Making websites is slow and frustrating.

Continue reading...June 2, 2013 — I have an idea for a simpler Internet, where a human could hold in their head, how it all works, all at once.

It would work much the same way as the Internet does now except for one major change. Almost all protocols and encodings such as TCP/IP, HTTP, SMTP, MIME, XML, Zone files, et cetera are replaced by a lightweight language called Scroll.

Continue reading...April 2, 2013 — For me, the primary motivation for creating software is to save myself and other people time.

I want to spend less time doing monotonous tasks.

Less time doing bureaucratic things. Less time dealing with unnecessary complexity. Less time doing chores.

I want to spend more time engaged with life.

Continue reading...Time

by Breck Yunits

Two people in the same forest,

have the same amount of water and food,

Are near each other, but may be out of sight,

The paths behind each are equally long.

The paths ahead, may vary.

One's path is easy and clear.

The other's is overgrown and treacherous.

Their paths through the forest,

in the past, in the present, and ahead

are equal.

Their journeys can be very different.

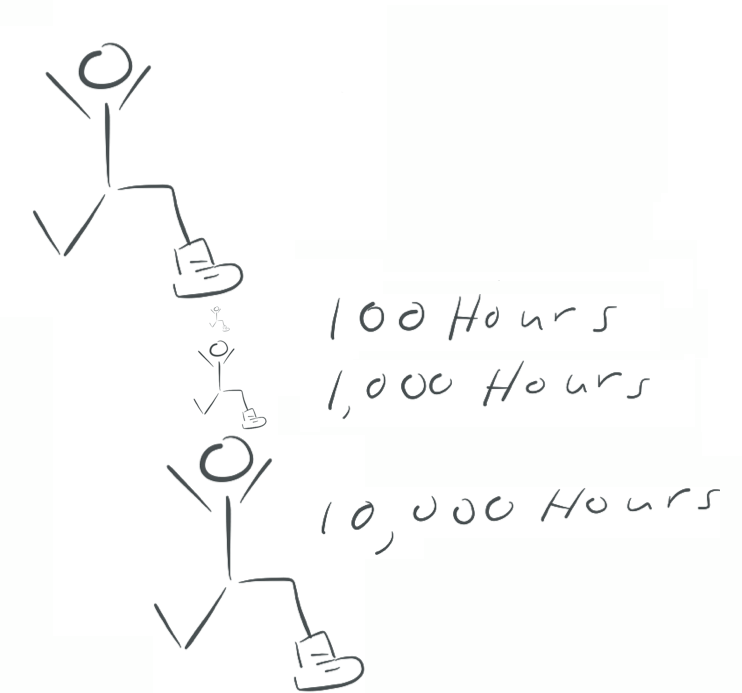

Continue reading...March 30, 2013 — Why does it take 10,000 hours to become a master of something, and not 1,000 hours or 100,000 hours?

The answer is simple. Once you've spent 10,000 hours practicing something, no one can crush you like a bug.

The figure on top has 10,000 hours of experience and crushes people with 100 hours or 1,000 hours like a bug. But they cannot crush another person with 10,000 hours.

The crux of the matter, is that people don't understand the true nature of money. It is meant to circulate, not be wrapped up in a stockingGuglielmo Marconi

March 30, 2013 — I love Marconi's simple and clear view of money. Money came in and he put it to good use. Quickly. He poured money into the development of new wireless technology which had an unequal impact on the world.

This quote, by the way, is from "My Father, Marconi", a biography of the famous inventor and entrepreneur written by his daughter, Degna. Marconi's story is absolutely fascinating. If you like technology and entrepreneurship, I highly recommend the book.

P.S. This quote also applies well to most man made things. Cars, houses, bikes, et cetera, are more valuable circulating than idling. It seemed briefly we were on a trajectory toward overabundance, but the sharing economy is bringing circulation back.

Continue reading...March 16, 2013 — A kid says Mommy or Daddy or Jack or Jill hundreds of times before grasping the concept of a name.

Likewise, a programmer types name = Breck or age=15 hundreds of times before grasping the concept of a variable.

What do you call it when someone finally sees the concept?

John Calcote, a programmer with decades of experience, calls it a minor epiphany.

Continue reading...March 8, 2013 — If your software project is going to have a long life, it may benefit from Boosters. A Booster is something you design with two constraints: 1) it must help in the current environment 2) it must be easy to jettison in the next environment.

February 24, 2013 — It is a popular misconception that most startups need to fail. We expect 0% of planes to crash. Yet we switch subjects from planes to startups and then suddenly a 100% success rate is out of the question.

This is silly. Maybe as the decision makers switch from gambling financeers to engineers we will see the success rate of starting a company shoot closer to 100%.

Continue reading...February 16, 2013 — Some purchasing decisions are drastically better than others. You might spend $20 on a ticket to a conference where you meet your next employer and earn 1,000x "return" on your purchase. Or you might spend $20 on a fancy meal and have a nice night out.

Continue reading...February 12, 2013 — You shouldn't plan for the future. You should plan for one of many futures.

The world goes down many paths. We only get to observe one, but they all happen.

In the movie "Back to the Future II", the main character Marty, after traveling decades into the future, buys a sports alamanac so he can go back in time and make easy money betting on games. Marty's mistake was thought he had the guide to the future. He thought there was only one version of the future. In fact, there are many versions of the future. He only had the guide to one version.

Marty was like the kid who stole the answer key to an SAT but still failed. There are many versions of the test.

There are infinite futures. Prepare for them all!

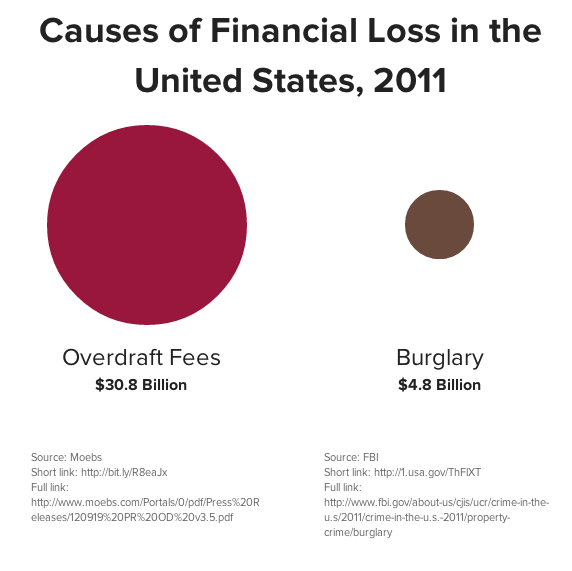

Continue reading...December 23, 2012 — If you are poor, your money could be safer under the mattress than in the bank:

The Great Bank Robbery dwarfs all normal burglaries by almost 10x. In the Great Bank Robbery, the banks are slowly, silently, automatically taking from the poor.

One simple law could change this:

What if it were illegal for banks to automatically deduct money from someone's account?

If a bank wants to charge someone a fee, that's fine, just require they send that someone a bill first.

What would happen to the statistic above, if instead of silently and automatically taking money from people's accounts, banks had to work for it?

Continue reading...December 22, 2012 — Entrepreneurship is taking responsibility for a problem you did not create.

It was not Google's fault that the web was a massive set of unorganized pages that were hard to search, but they claimed responsibility for the problem and solved it with their engine.

It was not Dropbox's fault that data loss was common and sharing files was a pain, but they claimed responsibility for the problem and solved it with their software.

It is not Tesla's fault that hundreds of millions of cars are burning gasoline and polluting our atmosphere, but they have claimed responsibility for the problem and are attempting to solve it with their electric cars.

Continue reading...

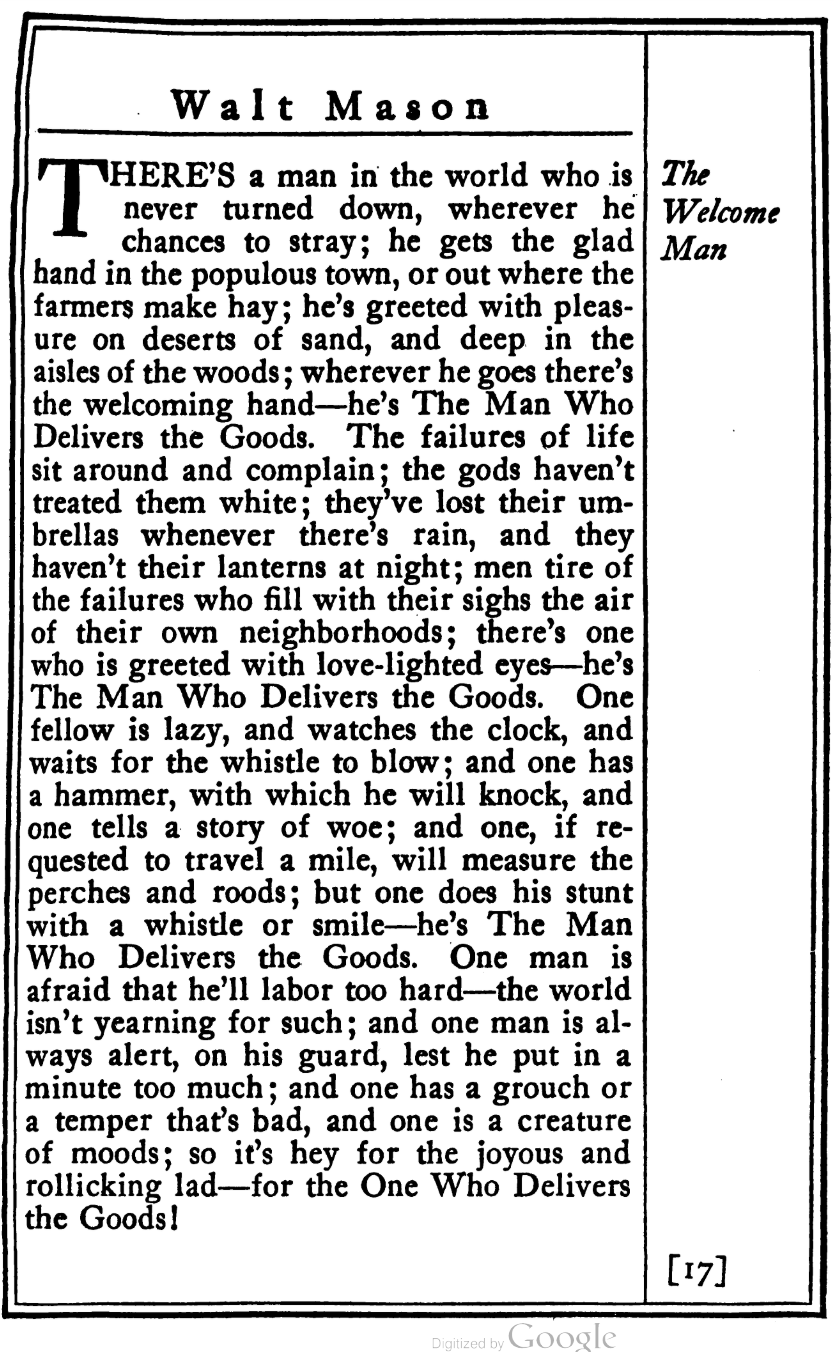

There's a man in the world who is never turned down, wherever he chances to stray; he gets the glad hand in the populous town, or out where the farmers make hay; he's greeted with pleasure on deserts of sand, and deep in the aisles of the woods; wherever he goes there's the welcoming hand--he's The Man Who Delivers the Goods. The failures of life sit around and complain; the gods haven't treated them white; they've lost their umbrellas whenever there's rain, and they haven't their lanterns at night; men tire of the failures who fill with their sighs the air of their own neighborhoods; there's one who is greeted with love-lighted eyes--he's The Man Who Delivers the Goods. One fellow is lazy, and watches the clock, and waits for the whistle to blow; and one has a hammer, with which he will knock, and one tells a story of woe; and one, if requested to travel a mile, will measure the perches and roods; but one does his stunt with a whistle or smile--he's The Man Who Delivers the Goods. One man is afraid that he'll labor too hard--the world isn't yearning for such; and one man is always alert, on his guard, lest he put in a minute too much; and one has a grouch or a temper that's bad, and one is a creature of moods; so it's hey for the joyous and rollicking lad--for the One Who Delivers the Goods! Walt Mason, his book (1916)Continue reading...

December 19, 2012 — For the past year I've been raving about Node.js, so I cracked a huge smile when I saw this question on Quora:

In five years, which language is likely to be most prominent, Node.js, Python, or Ruby, and why?Continue reading...

December 18, 2012 — My whole life I've been trying to understand how the world works. How do planes fly? How do computers compute? How does the economy coordinate?

Over time I realized that these questions are all different ways of asking the same thing: how do complex systems work?

Continue reading...December 18, 2012 — One of Nassim Taleb's big recommendations for how to live in an uncertain world is to follow a barbell strategy: be extremely conservative about most decisions, but make some decisions that open you up to uncapped upside.

In other words, put 90% of your time into safe, conservative things but take some risks with the other 10%.

Continue reading...December 16, 2012 — Concise but not cryptic. e=mc² is precise and not too cryptic. Shell commands, such as chmod -R 755 some_dir are concise but very cryptic.

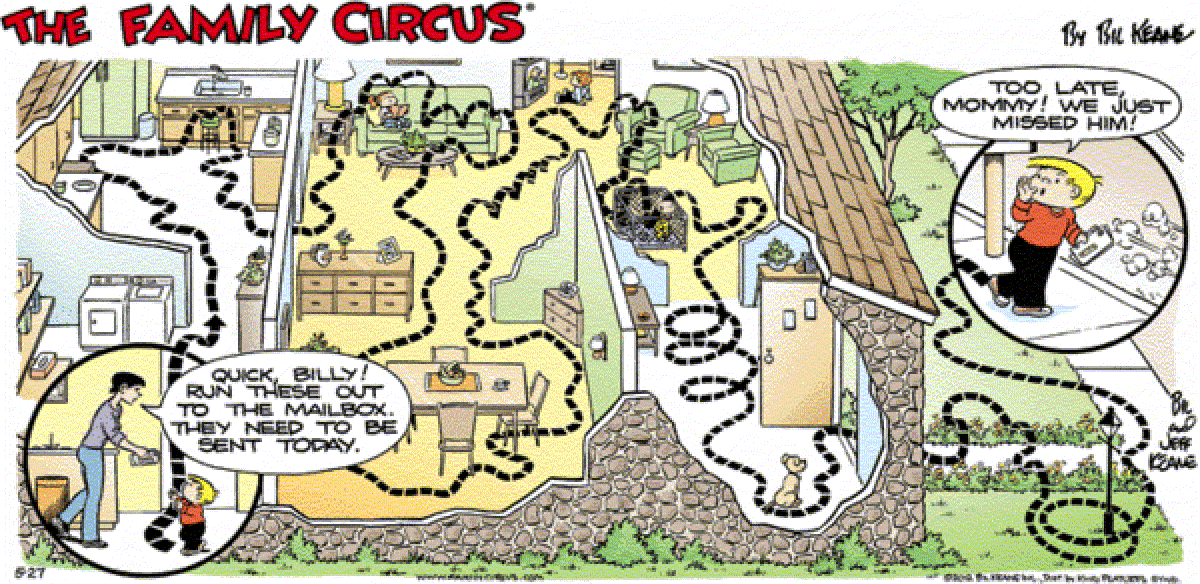

December 16, 2012 — When I was a kid I loved reading the Family Circus. My favorite strips were the "dotted lines" ones, which showed Billy's movements over time:

December 14, 2012 — Note is a structured, human readable, concise language for encoding data.

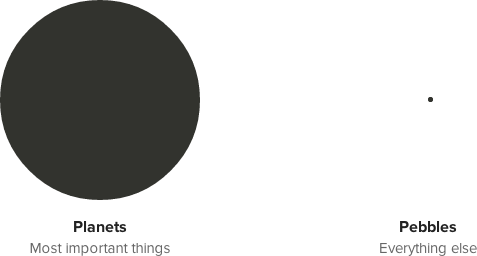

Continue reading...November 26, 2012 — For todo lists, I created a system I call planets and pebbles.

I label each task as a planet or a pebble. Planets are super important things. It could be helping a customer complete their project, meeting a new person, finishing an important new feature, closing a new sale, or helping a friend in need. I may have 20 pebbles that I fail to do, but completing one planet makes up for all that and more.

I let the pebbles build up, and I chip away at them in the off hours. But the bulk of my day I try to focus on the planets--the small number of things that can have exponential impact. I don't sweat the small stuff.

I highly recommend this system. We live in a power law world, and it's important to practice the skill of predicting what things will prove hugely important, and what things will turn out to be pebbles.

Continue reading...November 25, 2012 — I published 55 essays here the first year. The second and third years combined, that number nosedived to 5.

What caused me to stop publishing?

Continue reading...November 20, 2012 — "Is simplicity ever bad?" If you had asked me this a year ago, I probably would have called you a fucking moron for asking such a dumb question. "Never!", I would have shouted. Now, I think it's a fair question. Simplicity has it's limits. Simplicity is not enough, and if you pursue simplicity at all costs, that can be a bad thing. There's something more than simplicity that you need to be aware of. I'll get to that in a second, but first, I want to backtrack a bit and state clearly that I do strongly, strongly believe and strive for simplicity. Let me talk about why for a second.

Continue reading...October 20, 2012 — I love to name things.

I spend a lot of time naming ideas in my work. At work I write my code using a program called TextMate. TextMate is a great little program with a pleasant purple theme. I spend a lot of time using TextMate. For the past year I've been using TextMate to write a program that now consists of a few hundred files. There are thousands of words in this program. There are hundreds of objects and concepts and functions that each have a name. The names are super simple like "Pen" for an object that draws on the screen, and "delete" for a method that deletes something. Some of the things in our program are more important than others and those really important ones I've renamed dozens of times searching for the right fit.

Continue reading...March 30, 2011 — Railay is a tiny little beach town in Southern Thailand famous for its rock climbing.

I've been in Railay for two weeks.

When the weather is good, I'm outside rock climbing.

When the weather is bad, I'm inside programming.

Continue reading...March 5, 2011 — A good friend passed along some business advice to me a few months ago. "Look for a line," he said. Basically, if you see a line out the door at McDonald's, start Burger King. Lines are everywhere and are dead giveaways for good business ideas and good businesses.

Continue reading...March 4, 2011 — I haven't written in a long while because i'm currently on a long trip around the world. at the moment, we're in indonesia. one thing that really surprised me was that despite our best efforts to do as little planning as possible, we were in fact almost overprepared. i've realized you can do an around the world trip with literally zero planning and be perfectly fine. you can literally hop on a plane with nothing more than a passport, license, credit card, and the clothes on your back and worry about the rest later. i think a lot of people don't make a journey like this because they're intimidated not by the trip itself, but by the planning for the trip. i'm here to say you don't need to plan at all to travel the world (alas, would be a lot harder if you were not born in a first world country, unfortunately). here's my guide for anyone that might want to attempt to do so. every step is highlighted in bold. adjust accordingly for your specific needs and desires.

The plan (see below for bullet points)

Set a savings goal. you'll need money to travel around the world, and the more money you have, the easier, longer, and more fun your journey will be.

Continue reading...September 18, 2010 — I was an Economics major in college but in hindsight I don't like the way it was taught. I came away with an academic, unrealistic view of the economy. If I had to teach economics I would try to explain it in a more realistic, practical manner.

I think there are two big concepts that if you understand, you'll have a better grasp of the economy than most people.

Continue reading...August 25, 2010 — I've been very surprised to discover how unpredictable the future is. As you try to predict farther out, your error margins grow exponentially bigger until you're "predicting" nothing specific at all.

Continue reading...August 25, 2010 — Ruby is an awesome language. I've come to the conclusion that I enjoy it more than Python for the simple reason that whitespace doesn't matter.

Python is a great language too, and I have more experience with it, and the whitespace thing is a silly gripe. But I've reached a peak with PHP and am looking to master something new. Ruby it is.

Continue reading...August 25, 2010 — Doctors used to recommend leeches to cure a whole variety of illnesses. That seems laughable today. But I think our recommendations today will be laughable to people in the future.

Recommendations work terrible for everyone but decently on average.

Continue reading...August 25, 2010 — Genetics, aka nature, plays the dominant role in predicting most aspects of your life, in my estimation.

Continue reading...August 25, 2010 — Maybe I'm getting old, but I'm starting to think the best way to "change the world" isn't to bust your ass building companies, inventing new machines, running for office, promoting ideas, etc., but to simply raise good kids. Even if you are a genius and can invent amazing things, by raising a few good kids their output combined can easily top yours. Nerdy version: you are a single core cpu and can't match the output of a multicore machine.

I'm not saying I want to have kids anytime soon. I'm just realizing after spending time with my family over on Cape Cod, that even my dad, who is a harder worker than anyone I've ever met and has made a profound impact with his work, can't compete with the output of 4 people (and their potential offspring), even if they each work only 1/3 as hard, which is probably around what we each do. It's simple math.

So the trick to making a difference is to sometimes slow down, spend time raising good kids, and delegate some of the world saving to them.

Continue reading...August 25, 2010 — I've been working on a fun side project of categorizing things into Mediocristan or Extremistan(inspired by NNT's book The Black Swan).

I'm trying to figure out where intelligence belongs. Bill Gates is a million times richer than many people; was Einstein a million times smarter than a lot of people? It seems highly unlikely. But how much smarter was he? Was he 1,000x smarter than the average joe? 100x smarter?

I'm not sure. The brain is a complex thing and I haven't figure out how to think about intelligence yet.

Would love to hear what other people think. Shoot me an email!

Continue reading...August 25, 2010 — I have a feeling critical thinking gets the least amount of brain's resources. The trick is to critically think about things, come to conclusions, and turn those conclusions into habits. The subconcious, habitual mind is much more powerful than the tiny little conscious, critically thinking mind.